- Testing Basics

- Home

- /

- Learning Hub

- /

- What Are Test Specification: With Examples And Best Practices

- -

- April 11 2023

What Are Test Specification: With Examples And Best Practices

A complete tutorial that explores test specifications, their types and components, and how to create them.

OVERVIEW

A test specification, also known as a test case specification, is a holistic summary that includes information such as the scenarios to be tested, how they are to be tested, the frequency of such testing, and other pertinent information for each specific feature of the software application.

Test specifications are ‘written guidelines’ instructing the testers to run specific test suites and skip some. They consist of sections such as the goal of the particular test, the group of essential inputs, the group of expected results, methods to implement the test, and benchmarks to decide the acceptance of the specific feature.

For each unit that requires testing, the test specification is different. By referring to the approach in the test plan, the team has to decide the feature that the team should test. Then, the team refines the gross approach in the test plan to develop particular test techniques for the testing unit and the criteria the team should employ to evaluate the unit. Using all this information, the team creates the test specifications.

What is Test Specification?

The test specification is a detailed summary of what scenarios one needs to test, how to and how often to test them, etc., for a specific feature.

Test runners utilize test specification strings to determine which tests to execute. These specifications are written instructions on which test suites to include or exclude. A comprehensive specification must incorporate the purpose of the test, a list of necessary inputs and expected outputs, techniques for executing the test, and benchmarks to verify whether the test passes or fails.

To ensure efficient software testing, having correct, detailed, and precise test specifications is mandatory. The QA team writes the test specifications for every test case at the unit level. The significance of these test specifications is that they enable the testers to clearly digest the goal of the test case and further implement it with precision toward success. Those who develop tests can use the previous test specifications again to develop new test versions. The earlier test specifications play the role of a guide for comparison between the previous versions of a test and the new versions.

Suppose the testing team has written the test specification correctly. In that case, when they compare the test specification and the test assertion, the team can determine the reason for failure. Thus, the test specification is crucial to determine the cause of a test's failure. The precise details written in the test case and the test specification have a considerable effect on the efficiency of testing. When the testing team explains the test cases in the form of a test specification document, the team can ascertain that it has fulfilled all the original needs of the test and that no points are missed.

Testers can leverage the test specification documents to facilitate the maintenance of the test phase. The most significant gain of the test specification documents is that they play an important role in ascertaining efficient testing and, consequently, bug-free software. Such superior-quality software successfully ensures customer satisfaction with the outcome of acceleration in organization profits.

Why is Test Specification important?

Test specification helps ensure software testing is carried out effectively. It is essential for the following reasons:

- The testing process has many limitations. The exact nature of the test case considerably impacts testing effectiveness. Therefore, the correctness of the test specification decides the effectiveness of testing.

- Creating proper test specifications that expose application errors is a creative activity. For this, an organization has to rely on the skill of the software testers. The crux is that the set of test specifications should be of superior quality. Therefore, its quality decides the ability to expose errors.

The testing team develops the test specifications during the planning phase of software development. In software testing, the blueprint of the complete test design is termed test specification. The QA team takes the test plan as the foundation for creating the test specification and selects the software feature to undergo testing, refines the details mentioned in the test plan, and develops the precise test specification.

Drafting the proper and accurate test specifications is a significant part of the Software Testing Life Cycle. The reason is that although the test cases are efficient, the team will not generate the expected outcome if the testers fail to comprehend them correctly. In this process, the testers can understand the test cases by reading the test specifications.

Test your web applications on a real device cloud. Try LambdaTest Now!

Identifiers of Test Specifications

The testers assign the test specification identifiers to individual test specifications for their unique identification. These identifiers have a link to the pertinent test plan. Such specification identifiers enable uniquely identifying every test case and offer an overall view of the functionality to be tested by implementing the test case.

The test specification identifiers are the following.

- Test case objectives: These are the reasons or goals for implementing the specific test case.

- Test items: These are the documents essential for the execution of a test case. This list of documents includes the code, user manual, Software Design Document (SDD), and Software Requirement Specifications (SRS), among others. They define the requirements or features that should be met after, during, and before testing.

- Test procedure specification: It consists of the step-by-step procedure to run the test case.

- Input specifications:This collection of inputs is essential to run a specific test case. Here, the testers must specify the precise values of the inputs and not generalized values.

- Output specifications:This is a description of the appearance of the result of the test case implementation. The testing team compares the output specifications to the actual outputs to determine the success or failure of a test case. Similar to input specifications, the testers must specify the precise values of the outputs.

- Environmental requirements: The testers mention special environment requirements such as specific interfaces, software applications, and hardware tools.

- Special procedural needs: These describe the special conditions or constraints essential for fulfilling the test case implementation.

- Intercase dependencies:There are some instances wherein two or more test cases depend on one another for correct implementation. For such instances, the testing team should include these intercase dependencies.

Components of a Test Specification

A test specification outlines the testing requirements and procedures for a software application. It typically includes the following components:

- Revision history: At the first stage, you have to prepare a test plan by reviewing the Software Requirement Specification (SRS) of the software application. Then you are required to develop manual test case scenarios for testing different aspects of the application like components, user behavior, and performance. Following this, also prepare a list of testing procedures you want to optimize.

- Feature description: This includes information on the feature of the software application that the testing team is about to test.

- Test scenarios: These include information on the scenarios the testing team wants to test.

- Omissions in testing: This includes information on the software application features that the testers will skip from testing due to test limitations or allocation of some different persons for these omitted testing.

- Nightly test cases: It includes the list of test cases and the descriptions at a high level about the section that the testers will test after the availability of a new build.

- An alignment of the main test areas: The QA team arranges the test cases sequentially based on the sections the team is about to test. This is a crucial part of the test specification.

- Tests to verify functionality: The testing team conducts tests to ensure that the specific feature is functioning per the design specifications and verifies the error conditions.

- Security tests: This includes information about the tests pertinent to security.

- Accessibility tests: These include information about the tests pertinent to accessibility.

- Performance tests: These include information about confirming performance requirements for specific features.

- Globalization or Localization tests: This includes information about the tests the testers should implement to ensure that the software application meets local and international requirements.

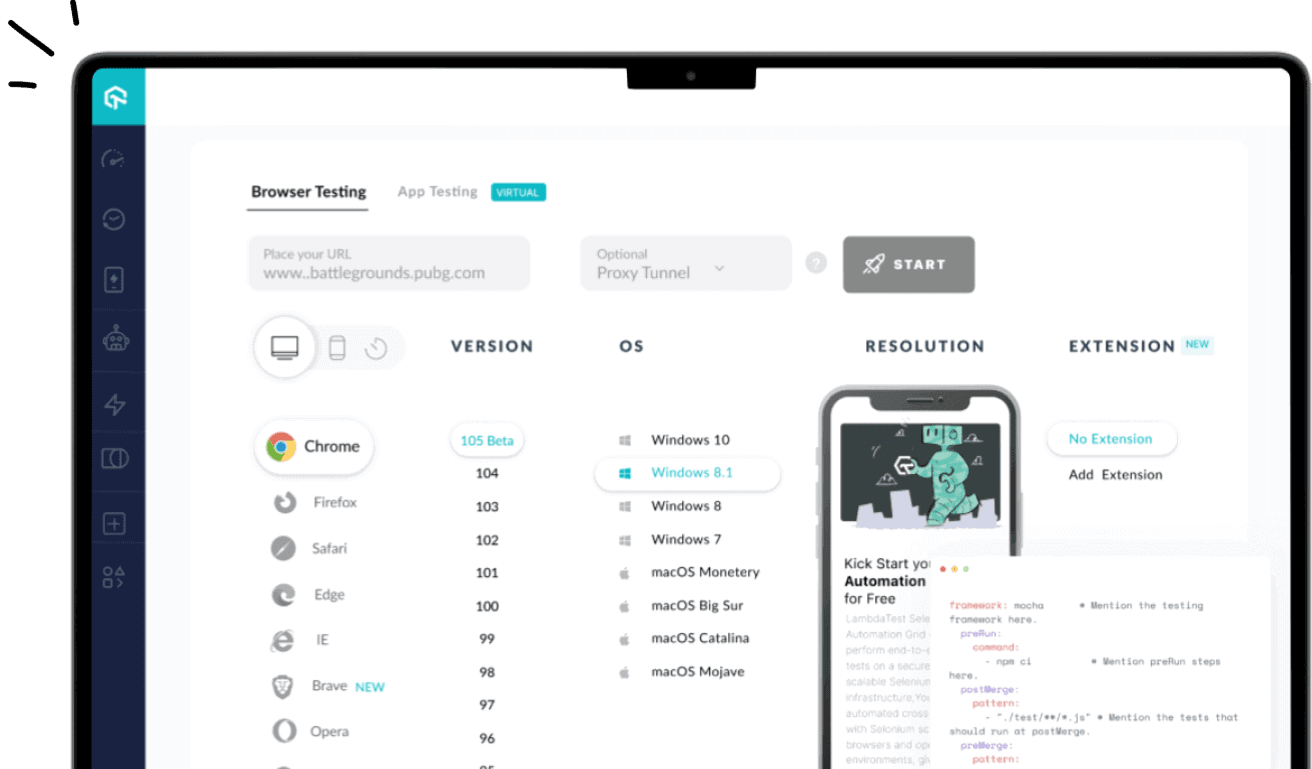

For your testing requirements, it is important to run test cases on real browsers, devices, and OS combinations. Digital experience testing platforms like LambdaTest can cater to all your testing requirements, like manual testing, automation testing, accessibility testing, and more.

Subscribe to our LambdaTest YouTube Channel to get the latest updates on tutorials around Selenium testing, Cypress testing, Appium, and more.

This cloud-based platform lets you test web and mobile applications on over 3000+ real browsers and platform combinations. Furthermore, you can also perform geolocation testing and localization testing of your software applications across 50+ geographies. To get started with automation testing, visit LambdaTest documentation.

Types of Test Specifications

Test specifications are of two types: developer-level and high-level test specifications. Following is a brief description of these two types.

Developer-Level Test Specification

The writers of these test specifications are developers. The testers perform unit testing when developers build the software application. During this time, they draft these test specifications. The developer-level test specifications are low-level specifications that must be more holistic and detailed. Majorly, developers use these test specifications. Non-technical people or resources besides software developers need help understanding these test specifications. The primary use of these developer-level test specifications is for future software developers to check the unit tests.

High-Level Test Specification

Testers implement end-to-end testing on the software application, which consists of high-level functions. They write the high-level test specifications commensurate with the level of accuracy and detail of the preceding high-level functions. Due to their detailed description, non-technical people can understand them. The primary use of these high-level test specifications is for project managers to confirm assurance and use in the future.

Process of Writing Test Specifications

The writer of the test specifications needs to implement the following steps while drafting the test specifications:

- Select a test case or a function to write the test specification.

- Read the test plan and comprehend the intention of the test.

- Make a list of features to be tested and a list of features to be skipped from testing.

- Ensure the test specifications are brief, accurate, and up to 150 characters.

- Confirm that the test specifications are in the present tense.

- Describe the modifications in the original test plan and the reasons that these modifications are necessary if your opinion is that the original test plan should be refined.

- Make a list of the input criteria.

- List the inputs the Testing team will use during the test implementation.

- Note down the expected output for each input. It is essential to be as specific as possible so that any bugs are not missed. The pattern of the test specification should be the following: “The output will be _____ when the input is ______.”

- Exclude any filler words in the entire test specification. Ensure that you are concise.

- Ascertain that there are unique identifiers to the test specification.

- Add descriptions of the environmental requirements, such as software, hardware, and interface essential to run the test.

Test Specification vs. Test Plan

In this section, let’s explore the difference between test specification and test plan.

| Test Specification | Test Plan |

|---|---|

| It is a detailed summary of the scenarios to be tested, the ways of testing these scenarios, and the frequency of testing them, for a specific feature. | It is a detailed document that includes the areas and activities of software testing. It outlines the test strategy, testing aims, timeline, essential resources (software, hardware, and human), estimation, and, eventually, test deliverables. It is the foundation of software testing. |

| It depends on the test plan because the test specification draft is based on it. | The test plan consists of all test specifications for a specific region. It is a high-level overview of the testing carried out for a specific feature area. Thus, they are not dependent on test specifications. |

| They are derived from test cases. | They are derived from the Software Requirement Specifications (SRS) document. |

| Software testers write them. | The Test Lead or the Quality Assurance Manager writes them. |

The Goldilocks Rule for Test Specifications

This rule is pertinent to writing test specifications. As per this rule, an effective test specification has inputs and expected outputs that are not too specific or general. The tester should be able to achieve a golden mean between the specific and the general.

Example 1: If you write, “does what I expect,” this is very general. The reader cannot understand what the test was checking or the reason for the failure of the test.

Example 2: If you write “adds the cache-control none header and the vary Lang header,” this is very specific. As per this rule, you can write, “adds correct headers.”

Best Practices for Writing Test Specifications

Here are some best practices you need to follow while writing test specifications:

- You should write test specifications in the present tense. Do not write, “will call sumModal when the button is clicked.” Instead of this, write “calls sumModal when the button is clicked.” Test specifications are statements about the functionality of the feature. When you write them in the present tense, they are easier to read.

- It is recommended to write specifications as briefly as possible. The thumb rule is that they should be at most 150 characters. When your test specification exceeds 150 characters, rewrite it briefly or break the functionality into two or smaller sentences. Moreover, you should not add filler words such as ‘will’ or ‘shall.’

- You must focus on the input and the output. The pattern of the specification should be ‘output when input.’ An example is ‘returns true when you type a number’ or ‘calls sumModal when you click a button.’

- Several testing libraries in JavaScript have a feature known as ‘describe’ blocks. You can use these described blocks for defining a test suite in a file. However, you should never nest describe blocks within each other to organize tests. If a file has twenty or more tests with nested describe blocks, your time will be wasted choosing the block where a new test should be inserted. Moreover, you might accidentally delete a curly brace.

- For end-to-end tests, you must select high-level test specifications. If there are unit tests, you must select developer-level specifications.

Sample: Test Case Specification Document

Here is the sample document for test case specification

<Project name/Acronym> TEST CASE SPECIFICATION Version x.x mm/dd/yyyy Page 1 | ||

|---|---|---|

Table of Contents Page 2 | ||

1.IntroductionYou must include the complete information for the software application for which you are preparing the Test Case Specification. You must mention one or more identification numbers, names or titles, part numbers, acronyms and abbreviations, release numbers, and version numbers. This is to be followed by the purpose of this document, the intended audience, the scope of activities that resulted in the development of this document, and the evolution that can be expected from this document. The final point concerns any privacy or security considerations pertinent to using the Test Case Specification. 1.1 OverviewYou must include the context and the purpose of the software application or situation. You have to mention the history of the development of this situation. After this, you should have one or more high-level context diagrams for the software application and scenarios. You can refer to the System Design Document (SDD), Requirements Document, and High-level Technical Design Concept for these diagrams. You should update one or more diagrams based on the present understanding or information. Regarding updates to these diagrams, you must mention the reasons for each update 2.Test Case SummaryYou have to mention an overview of the test scripts or the test cases scheduled for execution. Identifying each test case or script by a project-unique identifier and title is essential. You can group the test cases or scripts by the test function, such as Regression testing, System testing, and User Acceptance testing. Sometimes, information on test cases or scripts is stored in an automated tool. Therefore, you can export this information from the tool and add it as an appendix to this document. Test Case Summary Table: | ||

| Test Case or Script Identifier | Test Case or Script Title | Execution Priority |

3.Test Case-To-Requirements Traceability MatrixYou must create a table wherein to map all the requirements in the Requirements Document to the corresponding test cases or scripts. If the test case or script information is stored in an automated tool, you have to export or print the matrix from the tool to be included in this document. 4.Test Case DetailsYou must provide the details for every test case or script mentioned in the Test Case Summary section. You need to have a separate detail section for every test case or script. Suppose the test case or script information is stored in an automated tool. In that case, you have to gather the information mentioned in the following subsections for every test case or script from the automated tool and include this information as an appendix to this document. You can also print the test case or script details in a table to group the test cases or scripts with identical features. This will result in the minimization of the volume of the reported information, which facilitates a review of the content 4.1 Test Case or Script IdentifierYou have to mention the project-unique identifier for the test case or script along with a descriptive title. This is followed by the test case or script date, number, version, and any modifications related to the test case or script specification. The number of the test case or script indicates the level of the test case or script relative to the corresponding software level. This helps coordinate the software development and test versions concerning configuration management. 4.1.1 Test Objective:You have to write the purpose of the test case or script and follow it with a short description. The next step is to identify whether multiple test functions can use the test case or script. 4.1.2 Intercase Dependencies:In this section, you must note the prerequisite test cases or scripts that can generate the test environment or input data that enables running this test case or script. Moreover, you also have to note the post-requisite test cases or scripts for which the test environment or input data is created when this test case or script runs. 4.1.3 Test Items:You must describe the features or items the test case or script will test. Some examples are code, design specifications, and requirements, among others. While describing these features or items, you need to know the level for which the test case or script is written. Based on the test case or script level, you can refer to the item description and definition from one of many sources. Another useful suggestion is to refer to the source document, such as the Installation Instructions from the Version Description Document, Operations & Maintenance Manual, User Manual, System Design Document, and Requirements Document, among others. 4.1.4 Pre-requisite Conditions:You need to identify if any pre-requisite conditions are mandatory before implementing the test case or script. Here, it is essential to discuss the following considerations as per applicability. This includes environmental needs like training, hardware configurations, system software (tools and operating systems), and other software applications. The next considerations are the control parameters, pointers, initial breakpoints, flags, drivers, stubs, or initial data you must set or reset before starting the test. Then, you must discuss the preset hardware states or conditions essential for running the test case or script. The next is the initial conditions you will use to make timing measurements. Then, you have to speculate on the conditioning of the simulated environment. The last considerations are other special conditions related to the test case or script. 4.1.5 Input Specifications:You have to note down all the inputs essential for the execution of the test case or script. While doing this task, you must remember the level for which the test case or script has been written. You should ascertain that you have included all essential inputs. Some examples of these are human actions, tables, data (values, ranges, and sets), relationships (timing), files (transaction files, control files, and databases), and conditions (states), among others. You need to describe the input as text, an interface to another system, a file identifier, and a picture of a properly completed screen. You can use tables for data elements and values to simplify the documentation. As applicable, you can include the following. For each test input, you must mention the name, purpose, and description (such as accuracy and range of values, among others). This is to be followed by the source of the test input and the method you use to choose the test input. You have to indicate the type of the test input, that is, real or simulated. This is followed by the event sequence or time of the test input. The last point is about the mode in which you will control the input data to do the following: permit retesting if essential; test one or more items with a reasonable or minimum count of data types and values; handle one or more items with a gamut of valid data types and values that test conditions such as saturation, overload or other worse situations; and handle the one or more items with invalid data types and values that test the correct handling of abnormal inputs. 4.1.6 Expected Test Results:You must include all expected test results, including intermediate and final results for the test case or script. You need to examine the specific files, reports, and screens, among others, after the test case or script is run to describe the system's appearance. You should be able to identify all outputs essential for verifying the test case or script. You should consider the level for which the team has written the test case or script and accordingly describes the outputs. You must make it a point to identify all outputs, such as the following: timing (duration, response times), relationships, files (transaction files, control files, databases), conditions (states), human actions, tables, and data (sets, values), among others. You can simplify the output description using tables. To simplify the documentation, you can include the input and the associated output in the same table. This method improves the usefulness of documentation. 4.1.7 Pass/Fail Criteria:You should identify the criteria the team can use to evaluate the intermediate and final results of the test case or script and conclude whether the test case or script is a success or a failure. Every test result must be accompanied by the following information as per applicability. The output is bound to vary. You have to specify the acceptable precision or range for such variations. It is essential to note down the minimum count of alternatives or combinations of the input and output values considered acceptable test results. You should specify the minimum and maximum permissible test duration regarding the count of events or time. The maximum count of system breaks, halts, and interrupts that may occur should be mentioned. The permissible severity of processing errors is crucial. You need to specify the conditions that indicate an inconclusive result, due to which it would become essential to conduct re-testing. You should know the conditions for which you must interpret the output as indicative of abnormalities in the input test data, test procedures, or test data files or database. The final point is to note the permissible indications for the test's results, status, control, and readiness to conduct the next test case or script. This is to be coupled with other pertinent criteria about the test case or script. 4.1.8 Test Procedure:You have to describe the series of numbered steps that the team must complete in a sequence for the execution of the test procedure of the test case or script. These test procedures are to be a part of the appendix regarding this paragraph. This is for document maintenance. You need to consider the type of software being tested to determine the correct level of detail in the test procedure. Every step in the test procedure includes a series of keystrokes that have a logical relation with one another. This is a better method than considering each keystroke as a separate step of the test procedure. The apt level of the details is the level at which the details can specify the expected results and compare these expected results with the actual results. The test procedure should include the following. You must mention the relevant test operator actions and equipment operations for each step. This includes commands that are applicable to the initiation of the test case or script, application of test inputs, an inspection of test conditions, implementation of interim evaluations of test results, recording data, interrupting or halting the test case or script, sending a request for diagnostic aids, modification of the data files or the database, repetition of the test case if it is unsuccessful, application of alternate modes as is essential for the test case or script, and termination of the test case or script. You need to note the evaluation criteria for every step and the expected outcome. Sometimes, the test case or script might deal with multiple needs. You should be able to identify the correlation between each procedural step and the related needs. You need to include the actions that should be executed when an indicated error or a program stop occurs. Some such actions are gathering system and operator records of test results, pausing or halting time-sensitive test-support software and test apparatus, and recording critical data based on indicators for usage as a reference. The final point is to include the actions that the team can take for analysis of test results for the fulfillment of the following aims: evaluation of the test output against the expected output, evaluation of the output as a foundation to continue the test sequence, identification of the media and location of the generated data due to the test case or script, and detection of whether the output is generated. Test Procedure Steps for the Given Test Case or Script Identifier: | ||

| Step No. | Action | Expected Results or Evaluation Criteria Requirements Tested |

4.1.9 Assumptions and Constraints:You should identify if there are any limitations, constraints, or assumptions in the description of the test case originating from the test or system conditions. An example is the limitations on data files, databases, personnel, equipment, interfaces, or timing. If the team has approved exceptions or waivers to particular parameters and limits, you should identify them and describe their impact on the test case or script. <You must repeat all sections from 4.1 to 4.1.9 for every test case or script.> Appendix A: Test Case-to-Requirements Traceability MatrixFollowing is an example of this matrix type. You can amend it aptly to provide a view of the actual identification and mapping of test cases to requirements for the particular system or project. | ||

| Requirement | Test Case 1 | Test Case 2 Test Case 3 |

| Requirement 1 | <identify traceability> | |

Appendix B: Record of ChangesYou must render information about the method the team will control and track the development and distribution of the Test Case specification. Following is an example of a relevant table. | ||

| Version number | Date | Author or Owner Description of change |

Appendix C: GlossaryYou should provide brief but accurate definitions of the terms in this document with which the readers might need to be more conversant. You need to arrange such terms in alphabetical order. | ||

| Term | Acronym | Definition |

Appendix D: Referenced DocumentsYou have to display a summary of the relationship of this document to other pertinent documents. These include relevant technical documentation, pre-requisite documents, and companion documents. | ||

| Document name | Document location or URL | Issuance date |

Appendix E: ApprovalsThis is a list of those persons who have reviewed the Test Case Specification and have approved the information in this document. If any changes are made to this document, they should be shared with these persons or their representatives for further approval. | ||

| Document Approved By | Date of Approval | |

| Name: <Name> <Designation> <Company name> | ||

Conclusion

Test specifications are a crucial component of the Software Testing Life Cycle (STLC). When QA writes well-crafted test specifications, the chances of developing high-quality products increase significantly. Moreover, it's important to structure your test specification document to prioritize test cases, such as nightly test cases, weekly test cases, and complete test passes.

About the Author

Irshad Ahamed is an optimistic and versatile software professional and a technical writer who brings to the table around four years of robust working experience in various companies. Deliver excellence at work and implement expertise and skills appropriately required whenever. Adaptive towards changing technology and upgrading necessary skills needed in the profession.

Frequently asked questions

- General

Did you find this page helpful?

More Hubs

Try LambdaTest Now !!

Get 100 minutes of automation test minutes FREE!!

Christmas Deal is on: Save 25% off on select annual plans for 1st year.

Christmas Deal is on: Save 25% off on select annual plans for 1st year.