- Testing Basics

- Home

- /

- Learning Hub

- /

- Test Reports Tutorial

- -

- December 18 2023

What Are Test Reports: With Examples And Best Practices

A complete tutorial that explores test reports, their types and sections, and how to create an effective test report.

OVERVIEW

Test reports are documents containing a summary of all the test details of software projects, like the environments where QA teams validate the test code, who performs the test, and when and how the test was performed. During testing, it acts as a physical log that records what code was tested, in what configuration, and what bugs were found.

Every software development organization aims to retain customers. For this, it is mandatory to render the best software services and products. In this stage, one realizes the significance of software testing.

In the software testing process, test reporting is a critical phase. If the team implements this phase diligently within the timelines, the test analysis report and feedback will be handy in the entire Software Development Life Cycle (SDLC).

What are Test Reports?

A software testing report is like a summary of all the tests we did, what we wanted to achieve with them, and what happened during the testing project. It helps us see how well the testing went and figure out why some tests didn't work as expected.

After the software testing team accomplishes the testing work, the team generates the test reports containing information like the details of the testing procedures, the overall quality of the application, the test results, and the identified defects. It is a consolidated document of the summary of all the testing activities.

The other term for a test reporting document is the test closure report. The various project stakeholders read this document. You can generate a test report by ensuring that it has the following features:

- Detailed information: You should include all the details of the testing activities. Although you should concisely mention these details, you should not write an abstract. A detailed yet concise test report enables the stakeholders to form a clear picture of the testing activities in their minds.

- Clarity: When you include information in this document, you should confirm clarity in all aspects of the testing process.

- Format: You should adhere to a standard template for the test report. When it is in a standard format, the stakeholders can quickly review and understand.

- Specifications: You should include all test result specifications with brevity. These specifications should be to the point.

When to Create a Test Summary Report?

The Test Summary Report plays a crucial role in documenting the entire testing process. It's crucial that this report encompasses all vital information and outcomes from each phase of testing. The ideal approach is to compile this report once all testing activities have completed. This ensures that the report serves as a thorough and precise record of the entire testing journey, capturing every significant event and result.

Why are Test Summary Reports Important?

Test reports enable the stakeholders to estimate the efficiency of the testing and detect the causes that led to a failed or negative test report. The stakeholders can evaluate the testing process and the quality of the specific feature or the entire software application. They can clearly understand how the team dealt with and resolved the defects.

Using all the preceding information, they can make product release decisions. In these ways, the data in the test report proves vital for the business.

Various stakeholders; such as product managers, analysts, developers, and testers; read the test report to determine the origin of each issue and the stage at which it has surfaced. Using this report, they can locate the causes of negative test results and thoroughly analyze the cause for the issues, such as weak implementation, unstable infrastructure, mismanaged back end, and defective automation scripts.

After going through the test reports, one can have adequate information to find answers to the following questions:

- Has the team avoided unnecessary testing?

- How is the stability of the tests?

- Do the testers in the team have adequate skills to identify the issues in an advanced stage?

- What value has the testing team accomplished after all the testing activities?

Test reports are essential because they enable monitoring testing activities, contribute to quality improvement of the software application, and make early product releases feasible.

Benefits of Test Reports

Test reports are helpful in the following ways:

- The relevant stakeholders can get all the updates about the project's status and the software application's quality.

- Based on these updates, the stakeholders can direct corrective measures and amendments.

- Test reports help stakeholders to decide whether to ship the software application to the customer.

- The report acts as a justification for all the efforts of the testing team. After going through the report, the testing team can learn and apply learnings in the future to make testing even more effective.

- The test reports ensure that only quality software applications are provided a release.

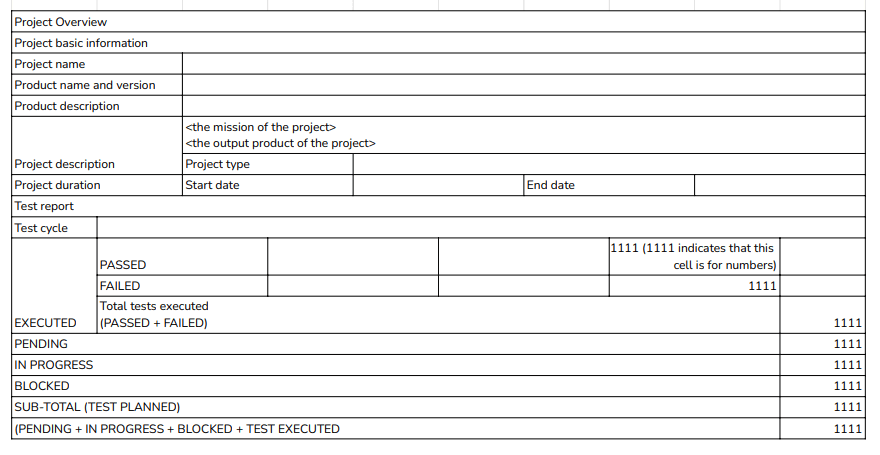

Templates of a Test Report

Here are some possible templates for a Test Report.

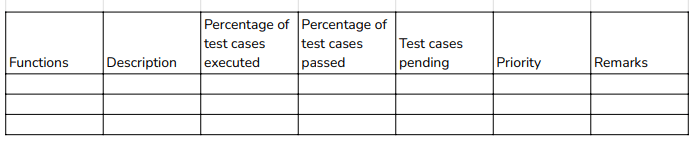

Template 1:

Template 2:

Sections of a Test Report

There are various templates for test summary reports. Out of these, you can choose the template that matches your requirements and customize the sections per your project's nature.

The broad categorization of sections of a report is the following.

Document Control

This section consists of two points: the Revision History and the Distribution List.

- The Revision History consists of information such as the date on which the team created the Test report, the date of the previous update, and the updated sections.

- The Distribution List consists of information such as the resources in the testing process, such as the test engineers, test leads, and test managers. The other information concerns those who have reviewed the test report, such as product managers and other stakeholders.

Project Overview

You need to include the description of the project with details, such as the name of the project, the type of the project, the duration of the project, the version of the product, and the description of the product.

Test Objective

You need to include the purpose of the software testing. This consists of the objectives of each testing stage, such as performance testing, security testing, regression testing, UI testing, functional testing, etc.

Further, you can mention the software testing team's several activities as part of the software testing. This test report section shows that the QA team has clear concepts about the test object and the requirements.

Test Summary

One should include information such as the count of the executed test cases, the pertinent data of passed and failed tests, the pass/fail percentage, and comments. A team can present this information more finely using visual representations such as tables, charts, graphs, and color indications.

Included Areas

This section consists of descriptions of the testing areas of the software application along with its functionalities. It is not essential to include every test scenario in the minutest details. You have to include all the areas at a high level.

Excluded Areas

This section consists of the testing areas of the software application that the testing team did not include. You have to mention every such testing area with the specific reason for exclusion. An example of such a reason is restricted access to device availability. It can happen that when the customers know about the excluded areas, they might raise the alarm. This is the main reason to jot down what the QA team needs to test, along with the expectations relevant to that specific area.

Testing Approach

You have to include information about what the testing team has tested and how this team has tested the specific area. Further, you have to mention the testing approaches of the team and the details of the various steps.

Defect Reports

Generally, the bug report has information about all the defects. However, if you include the defects’ information in the test report, it is advantageous for the utility of the test reports. This report comprises information such as the total count of bugs the team has handled during the testing and the current status of these bugs (such as open, closed, and resolved).

The other details are the bugs marked as ‘Deferred,’ the bugs marked as ‘Not a Bug,’ reopened bugs, open bugs of the previous release, new bugs found, and total open bugs. This is a crucial ingredient of the test report because the metrics in this report are adequate for correct decision-making and product improvement.

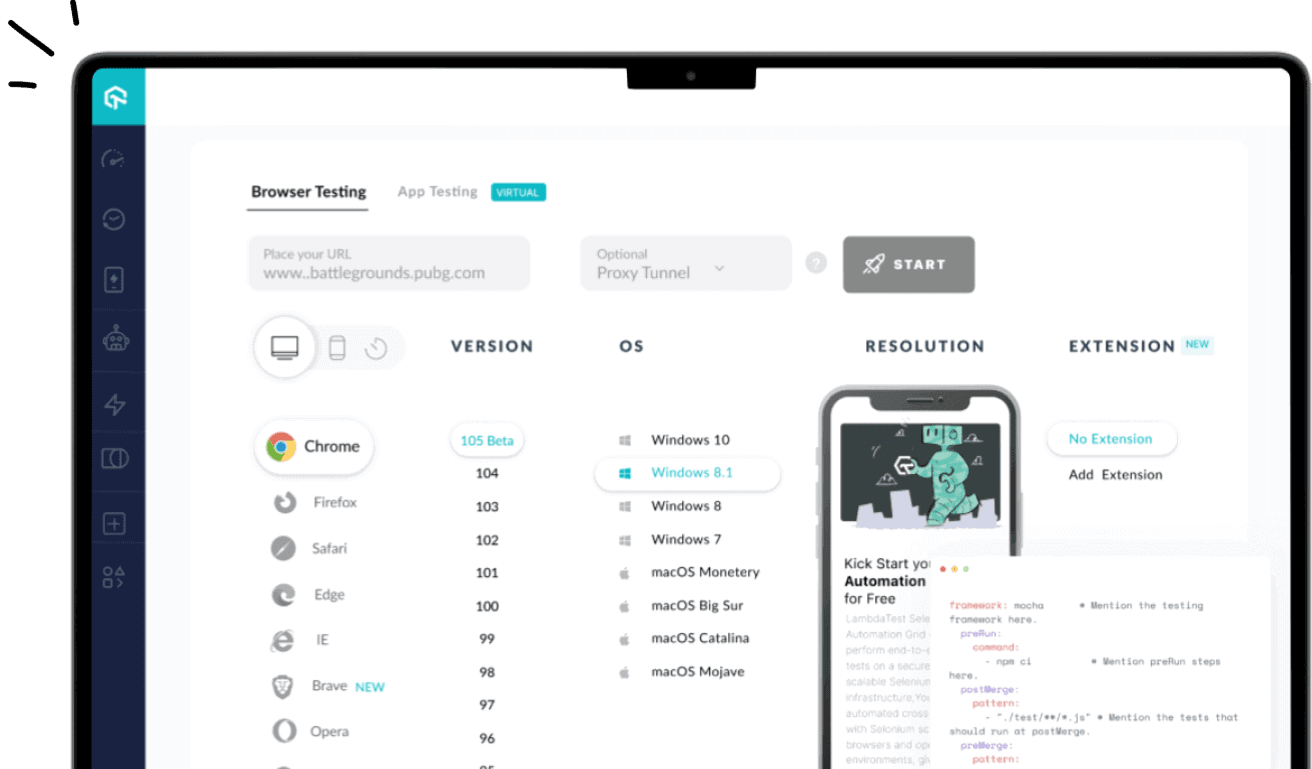

Platform Details

Currently, the testing team validates the software application across multiple platforms. Due to this, the team tests across various browsers, devices, and OS. You need to include all the platforms and environments related to testing the software application.

Knowledge Management

This consists of details such as the lessons the team has learned during the current testing cycle and a list of issues that need particular attention. This is followed by information about the work of the testing team in ensuring the application’s quality. Also, it details the list of enhancements the company should implement in future testing cycles. Lastly, there are some suggestions or remarks for all the varied stakeholders.

Gross Summary

In this section, you need to include the feedback of the testing team, which is an overall opinion of the application under test. Through this summary, you communicate the details about the critical issues and those issues that are still open to the customer. After reading the summary, the customer can set expectations about the software application's shipping date.

In the case of larger organizations, the above sections of a test report are inadequate. The team should include additional data such as video recordings, screenshots, network traffic, logs, and other pertinent data, which enable the team to implement data-driven decision-making.

The testing team should generate the test report ideally at the end of the testing cycle. The motive behind this time selection is that the team should be able to include information about the regression tests.

However, the team should arrange for an adequate time interval between the date of submission of the test report and the date of shipping the software application to the customer. The reason for having this time interval is to enable the customer and the stakeholders to understand the overall health of the software application and the testing cycle so that the pertinent teams can make the necessary amendments.

After the test report generation, the testing team should share it with stakeholders, the customer, and all the team members. Due to this step, all members can get an overview of the testing cycle, which enables them to conclude about the ways to improve further. This report serves as an explanatory text for the novices on the team.

Types of Test Reports

Test reports are of the following three types.

Test Incident Report

During the testing cycle, the team enters the defect repository whenever they encounter a defect. This is termed the Test Incident Report. In this report, every defect is associated with a unique ID to facilitate the identification of the incident. Further, the team highlights the high-impact test incidents in the test reports.

Test Cycle Report

In every test cycle, the team plans and runs specific test cases. For each test cycle, the team uses a different software application build. The motive for doing so is that the team expects the software application to stabilize as it passes through the various test cycles.

The team creates the Test Cycle Report, which consists of the following information:

- A summary of all the steps the team has implemented in the test cycle.

- The defects the team detected during the specific test cycle based on impact and severity.

- The progress of the team in terms of the fixed defects from the previous cycle to the current cycle.

- The defects that the team has not fixed in the cycle.

- The observed variations in the schedule or efforts.

Test Summary Report

In the last step of the test cycle, the team recommends whether the product is suitable for release. The team creates the Test Summary Report, which consists of the summary of the outcome of a test cycle. This report includes the following sections:

- The Test Summary Report ID.

- Identification of the test items included in the report with the Test ID.

- The deviations from the test plans and the test procedures, if any.

- The result summary includes all the results with their resolved incidents and solutions.

- A well-rounded assessment and recommendation for the release, including the fit-for-release assessment and release recommendations.

The Test Summary report is of two types:

- The report generated at the end of every phase is known as the Phase-wise Test Summary.

- The final Test Summary Report.

How to write a good Test Summary Report?

To learn how we can write a good test summary report, let us consider that AB is an online travel agency for which an organization is developing an ABC software application. The software testing team does the following while generating a test summary report.

The team notes all the activities it has done during the testing of the ABC application. Then, it documents the overview of the application.

The ABC application provides services to book bus tickets, railway tickets, hotel reservations, domestic and international holiday packages, and airline tickets. To do all these services, the application has modules such as Registration, Booking, Payment, etc. The team includes all such information in the testing report document.

Now let’s see the steps to create a test summary report for an online travel agency.

Step 1: Create a Testing Scope

The team mentions those modules or areas that are in scope, out of scope, and untested owing to dependencies or constraints.

- In-scope: We completed the functional testing of the following modules:

- User registration

- Registration confirmation

- Ticket booking

- Hotel package booking

- Payment

- Out of scope:

- Multi-tenant user testing

- Concurrency

- Untested modules:

- The User Registration page that has the field values in mixed cases

Step 2: Test Metrics

Test metrics include the following:

- The count of planned test cases

- The count of executed test cases

- The count of passed test cases

- The count of failed test cases

The usage of test metrics is to analyze test execution results, the status of the cases, and the status of the defects, among others. The testing team can also generate charts or graphs to represent the distribution of defects: function-wise, severity-wise, or module-wise.

Step 3: Implemented Testing Type

The team includes all the types of testing it has implemented on the ABC application. The motive for doing so is to convey to the readers that the team has tested the application properly.

- Smoke testing

When the QA team receives the build, the team implements smoke testing to confirm whether the crucial functionalities are working as expected. The team accepts the build and commences testing. After the software application passes the smoke testing, the testing team gets the confirmation to continue with the next type of testing.

- Regression testing

The team conducts testing not on a particular feature or defect fix but on the entire software application. It consists of defect fixes and new enhancements. This testing confirms that after these defect fixes and new enhancements exist in the software application, the application has rich functionality. The team adds and executes new test cases to the new features.

- System Integration testing

The team performs system integration testing to ensure that the software application is functioning as per the requirements.

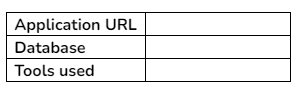

Step 4: Test Environment and Tools

The team notes all the details of the test environment used for the testing activities (such as Application URL, Database version, and the tools used).

The team can create tables in the following format.

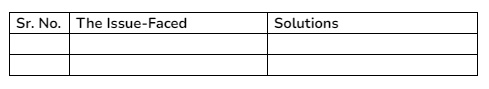

Step 5: Learnings during the Testing Process

The team includes information such as the critical issues they faced while testing the application and the solutions devised to overcome these issues. The intention of documenting this information is for the team to leverage it in future testing activities.

The team can represent this information in the following format.

Step 6: Suggestions or Recommendations

The team notes suggestions or recommendations while keeping the pertinent stakeholders in mind. These suggestions and recommendations serve as guidance during the next testing cycle.

Step 7: Exit Criteria

When the team defines the exit criteria, it indicates test completion on the fulfillment of specific conditions, such as the following:

- The team has successfully executed all its planned test cases.

- The team has closed all the critical issues.

- The team has planned the actions for all open issues, which it will address in the next release cycle.

Step 8: Sign-off

If the team has fulfilled the exit criteria, the team can provide the go-ahead for the application to ‘go live.’ If the team has not fulfilled the exit criteria, the team should highlight the specific areas of concern. Further, the team should leave the decision about the application going live with the senior management and other top-level stakeholders.

Challenges Faced in Creating Test Reports

In the past, the organization used the Waterfall Model of software development, which was relatively simple to create a test report. Organizations use Agile, DevOps, and CI/CD, which have modified the requirements of an efficient test report.

The software testing team faces the following challenges while generating a test report.

Quick release rhythms

During the phase of the Waterfall development cycle, the QA team compiles and summarizes the test report using spreadsheets during the final stages of the development cycle. In those days, there was ample time between two releases. The team could use this time for result compilation, report generation, and decision-making.

After the advent of Agile development and DevOps, this scenario has altered considerably. The team must complete testing at a higher speed. Organizations have to make decisions about quality not in a matter of months but often within some weeks, days, or even hours.

If the stakeholders do not get the feedback in such a timeframe, the organization has to postpone the release or ship it with debatable quality. Here, the challenge is to render feedback about the quality of the software application at a pace that matches the speed of quick-release rhythms.

High-volume, irrelevant data

In the current software testing scenario, test automation enables the QA team to perform more testing. Due to browser and device proliferation, the team can use more devices on more browsers to test multiple software application versions.

The cumulative effect of test automation and device proliferation is an enormous volume of test data. If this high-volume data is actionable, it is useful data. However, the section of this data that is non-actionable is a waste. Another term for this wasted data is ‘noise.’

Nowadays, organizations have colossal testing data. Therefore, they have to decide on valuable and noisy data. The causes of generating noise data are environmental instability, flaky test cases, and other reasons that lead to false negatives, of which the team cannot determine the root cause.

Digital enterprises have to spend time on every failure in the test report. The challenge here is dealing with a high volume of irrelevant testing data while generating the report.

Data from disparate sources

Organizations, specifically large organizations, have a colossal volume of testing data associated with a large count and variety of frameworks, tools, and teams. The sources of this enormous data are disparate, such as from various teams and resources.

This data can be associated with frameworks such as Appium and Selenium. Generating the test report by leveraging such disparate data is the challenge. The only solution, in this case, is to come up with a consistent method to capture and sort this data.

Regardless of the evolution from the Waterfall model to the Agile model, the end goal of creating a test report has remained the same: to procure actionable feedback. The knack is to filter out the false negatives and noise data and concentrate on the authentic issues to eventually ensure a quick mean time to resolution (MTTR).

Subscribe to the LambdaTest YouTube Channel for test automation tutorials around Selenium testing, Playwright, CI/CD, and more

Best Practices for Writing Effective Test Reports

Here are some best practices to follow while creating test reports.

- Close each test execution cycle and then publish the test report.

- Adhere to the standard test report template.

- Include information about all testing cycles to ensure that the stakeholders get a proper and clear picture of the efforts of the testing team.

- Ensure that the information is to the point and can be easily digested.

- Include evaluation metrics, such as schedule slippage, test efficiency, effort ratio, schedule variance, expenses of defect identification, test case adequacy, test case effectiveness, etc.

- Exclude complex technical jargon as several stakeholders need to understand the report.

- Save the test report in a document repository system to ensure its proper maintenance.

Once you generate the test reports, it’s important to share them with stakeholders, customers, and the team to get them an overview of the entire test execution cycle, enhancing their learning and improving further.

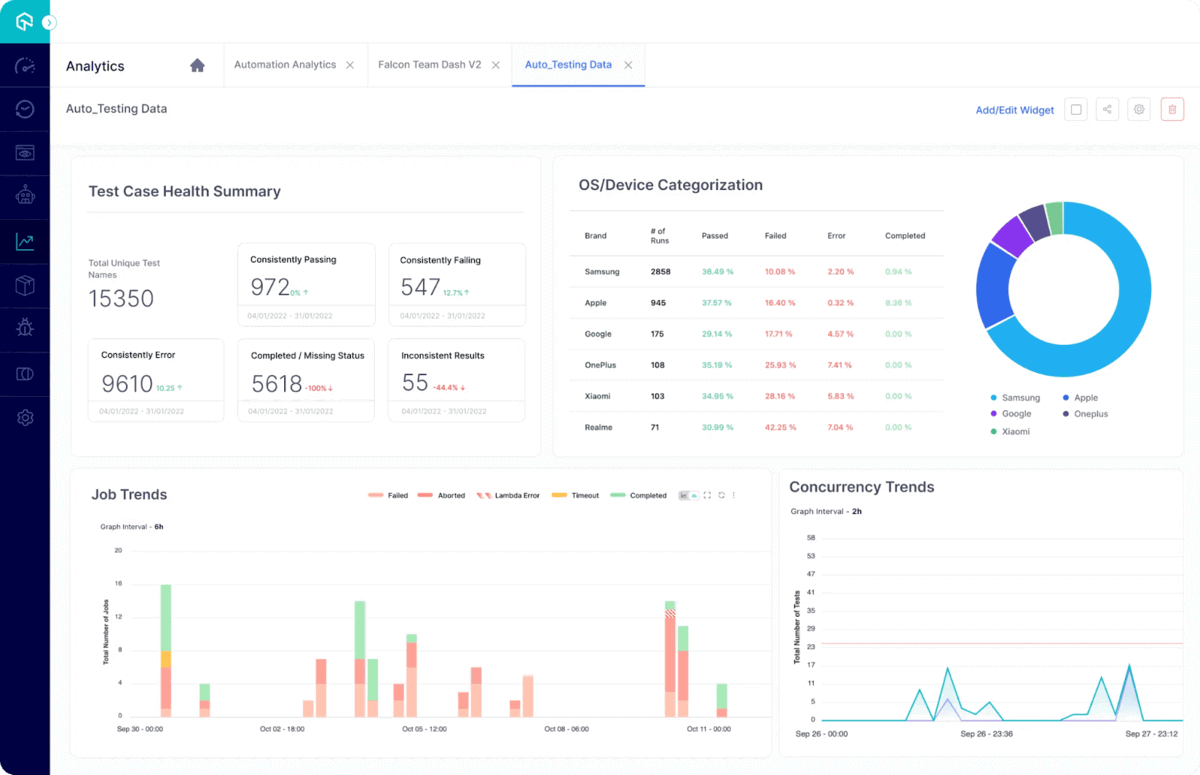

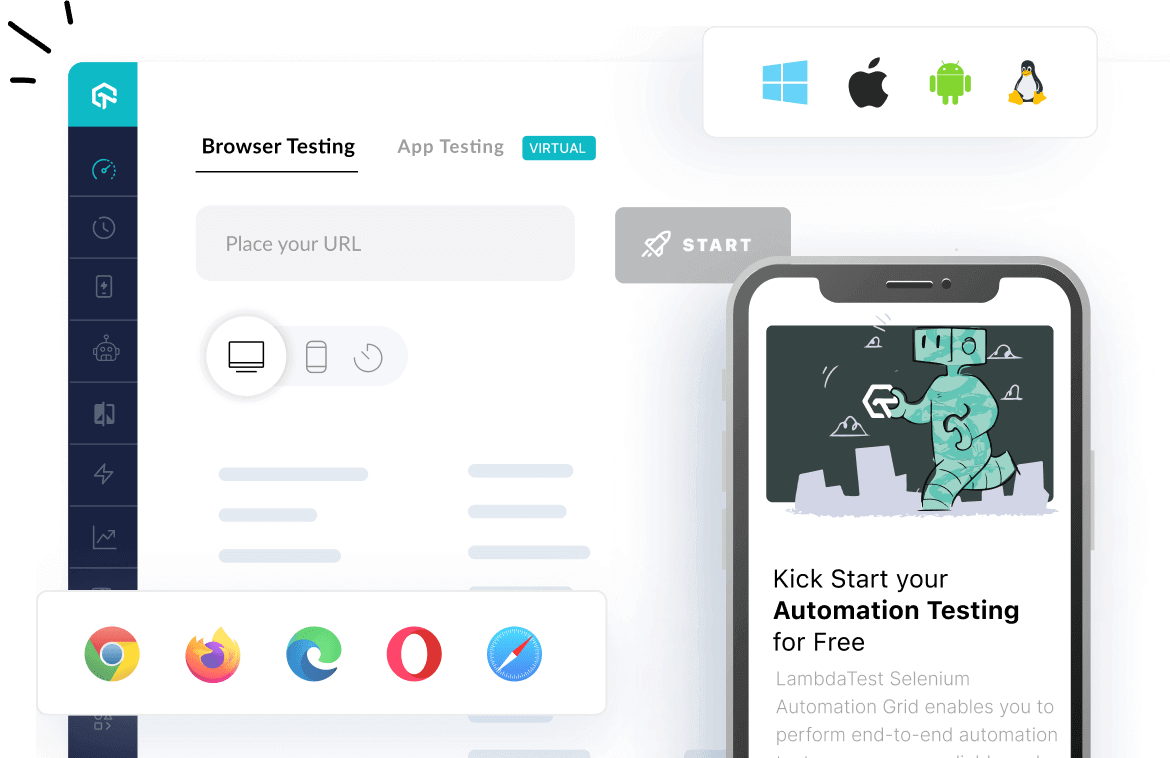

Continuous quality cloud testing platforms like LambdaTest have made test reporting much easier. With the LambdaTest Test Analytics platform, all your test execution data can be displayed on one dashboard, allowing you to make informed decisions. By building custom dashboards, you can share metrics specific to your stakeholders, customers, and team and make your dashboards more useful with widgets and modules.

LambdaTest Test Analytics and Observability platform allow users to customize their analytics dashboard according to their testing needs. Additionally, they can now share real-time test execution data within the team with just a click of a button. Doing so will help them close the gap between data, insight, and action, enabling you to make better and faster decisions.

Conclusion

Test reports show what the tester thinks of a product. The test analysis report informs stakeholders about the product's current status and possible risks. It enables teams to identify ways to improve the product through valuable insights and feedback.

Removing irrelevant noisy data is necessary to find bugs quickly and get quality results out of the test report. This will help your team to focus and resolve critical issues that need attention.

About the Author

Irshad Ahamed is an optimistic and versatile software professional and a technical writer who brings to the table around four years of robust working experience in various companies. Deliver excellence at work and implement expertise and skills appropriately required whenever. Adaptive towards changing technology and upgrading necessary skills needed in the profession.

On this page

- Overview

- What are Test Reports?

- When to create a Test Summary Report?

- Why Test Reports?

- Benefits of Test Reports

- Test Summary Report Templates

- Sections of a Test Report

- Types of Test Reports

- How to write a good Test Summary Report?

- Challenges in creating Test Reports

- Best Practices

- Frequently Asked Questions (FAQs)

Frequently asked questions

- General

Did you find this page helpful?

More Hubs

Try LambdaTest Now !!

Get 100 minutes of automation test minutes FREE!!

Christmas Deal is on: Save 25% off on select annual plans for 1st year.

Christmas Deal is on: Save 25% off on select annual plans for 1st year.