- Testing Basics

- Home

- /

- Learning Hub

- /

- Python Visual Regression Testing

- -

- October 17 2023

Python Visual Regression Testing: A Complete Tutorial

This tutorial on Python regression testing provides an in-depth exploration of visual regression testing and offers guidance on its implementation using the pytest framework.

OVERVIEW

In today's software landscape, every detail on a screen holds significance. A misaligned pixel or color shade can dramatically alter a user's experience and perception. Given the dynamic nature of modern applications, minute alterations in code can unintentionally wreak havoc on visual elements. For teams committed to impeccable user interface designs, navigating this terrain can be tricky and demanding. How can Quality Assurance Engineers be certain that developers' code changes won't disturb the visual equilibrium of their platform?

This is where Visual Regression Testing steps into the spotlight. Visual regression testing with Python offers several advantages that make it a great option for ensuring the consistency and quality of your web applications. Python's combination of robust libraries, automation capabilities, and a supportive community makes it a compelling choice for teams looking to ensure the visual consistency and quality of their web applications.

Leveraging Python's vast suite of tools and libraries offers a streamlined method to automate identifying unplanned visual changes. By juxtaposing an application's appearance before and after modifications, any misalignments are instantly highlighted. In an era where test automation dominates, this level of visual assurance is paramount.

As the digital domain grows more centered around visual content, the quest for visual precision parallels the need for operational correctness. With the capabilities of Python visual regression testing, not only is this quest feasible, but it's also remarkably streamlined.

In this pytest tutorial on Python visual regression testing, we will discuss visual regression testing in detail and how to perform it with the pytest framework.

Introduction to Visual Regression Testing

In the ever-expanding digital landscape, filled with dynamic web elements, adaptive designs, and a plethora of animations, it becomes crucial to ensure the visual integrity of online applications. This is where visual testing comes into play. But what exactly does this concept entail, and why is it so fundamental in upholding the quality of software solutions? Let's delve deeper into this topic.

Visual testing, also known as visual checks, is a unique Quality Assurance technique that focuses on examining the visual presentation of a website or application across different iterations to identify any unintended changes. This methodology guarantees that the software's visual display remains faithful to its original design blueprint, even when it undergoes revisions or upgrades.

Here's a snapshot of how it works:

Establishing the Gold Standard

During its inaugural test phase, the application's pivotal UI components are snapshot-ed. These form the “gold standard” against which forthcoming versions will be gauged.

Snapping Updated Images

Once revisions are made to the app (like after an upgrade), fresh snapshots, termed "updated images", are procured.

Image Analysis

The gold standard and the updated images are juxtaposed using specialized image analysis tools. These applications meticulously pinpoint differences, often on a pixel level.

Highlighting Discrepancies

Variances between the images, if found, are flagged for the design or coding team to rectify.

Quality in software has many facets. Beyond its core functionality, the visual component is a cornerstone, especially from the vantage point of the end-user. Here’s why visual testing is non-negotiable:

- User Engagement (UX)

- Rapid Insights

- Holistic Review

- Trust in Deployments

- Uniform Brand Image

A software's aesthetic design significantly sways user contentment. Unanticipated visual tweaks can destabilize user interaction and dilute their confidence.

Identifying and rectifying visual glitches during the early development can be a time and cost savior. Preempting a defect before it infiltrates the live environment is the key.

Relying solely on manual checks might miss out on nuanced visual deviations, especially when viewing across diverse devices, screen dimensions, or browsers. Automated visual checks ensure thorough scrutiny.

With frequent software updates, teams can release newer iterations with an assured heart, given the visual consistency is unmarred.

For corporate entities, unswerving brand representation is crucial. Visual tests confirm that design elements like logos and color schemes remain unchanged through iterations.

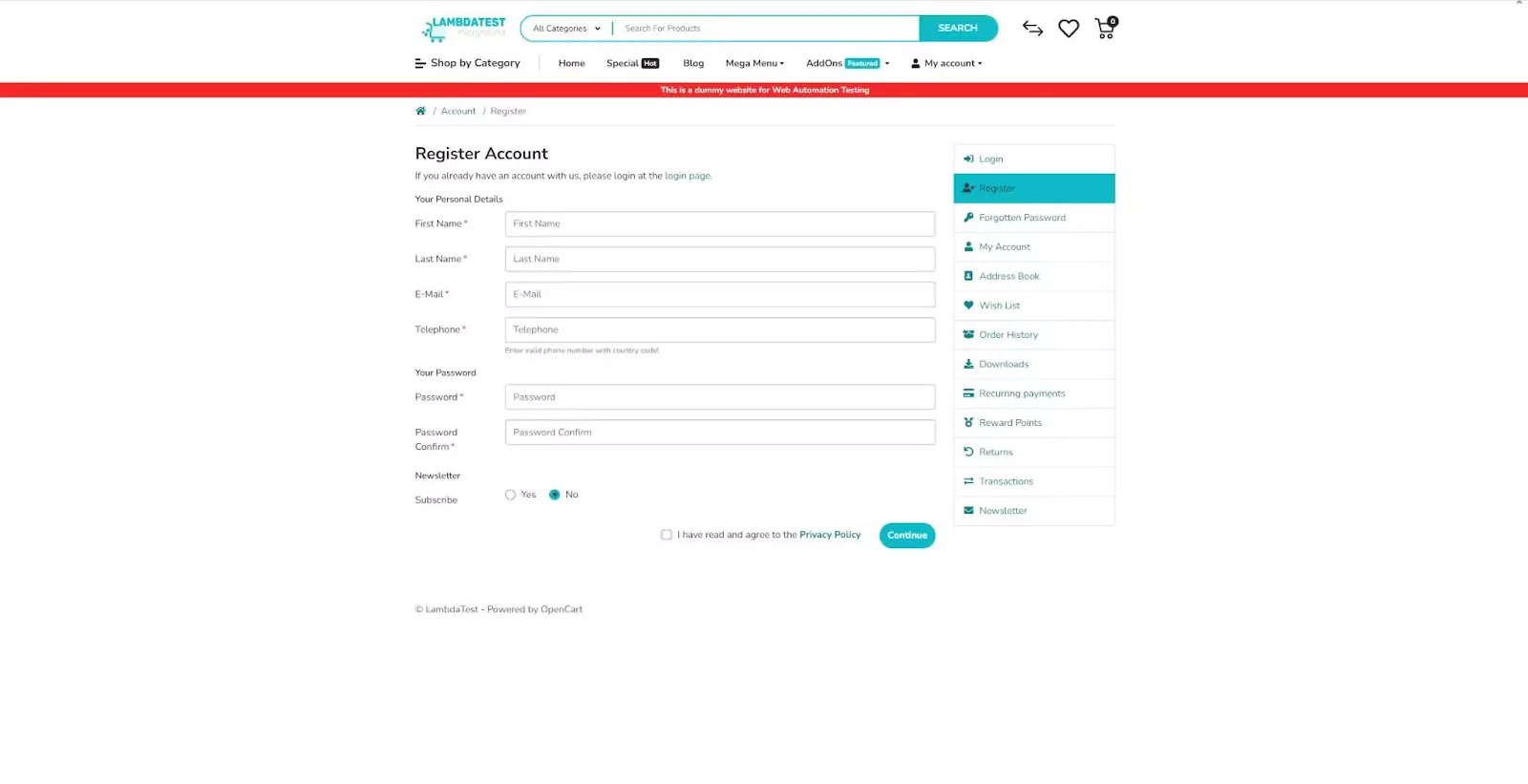

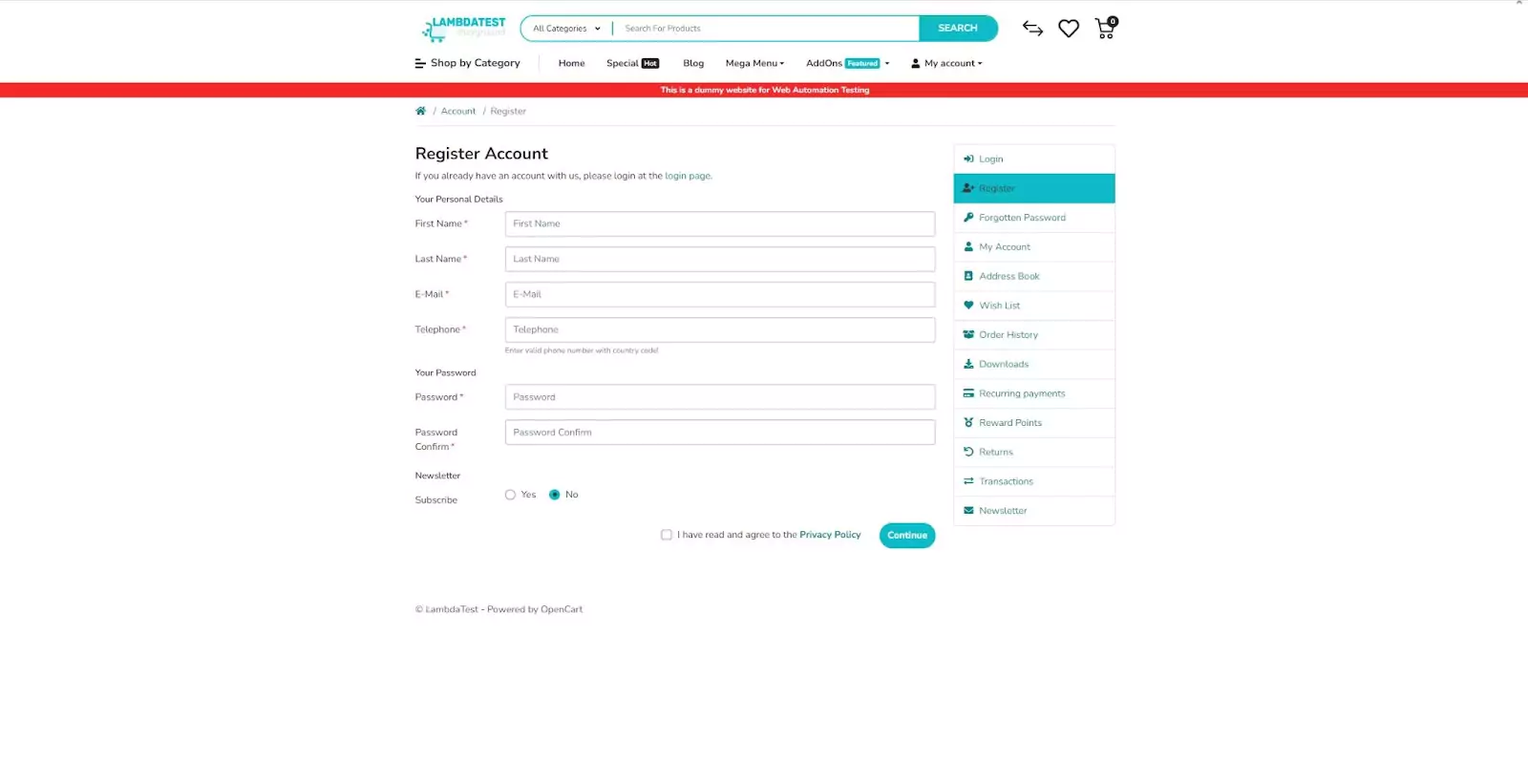

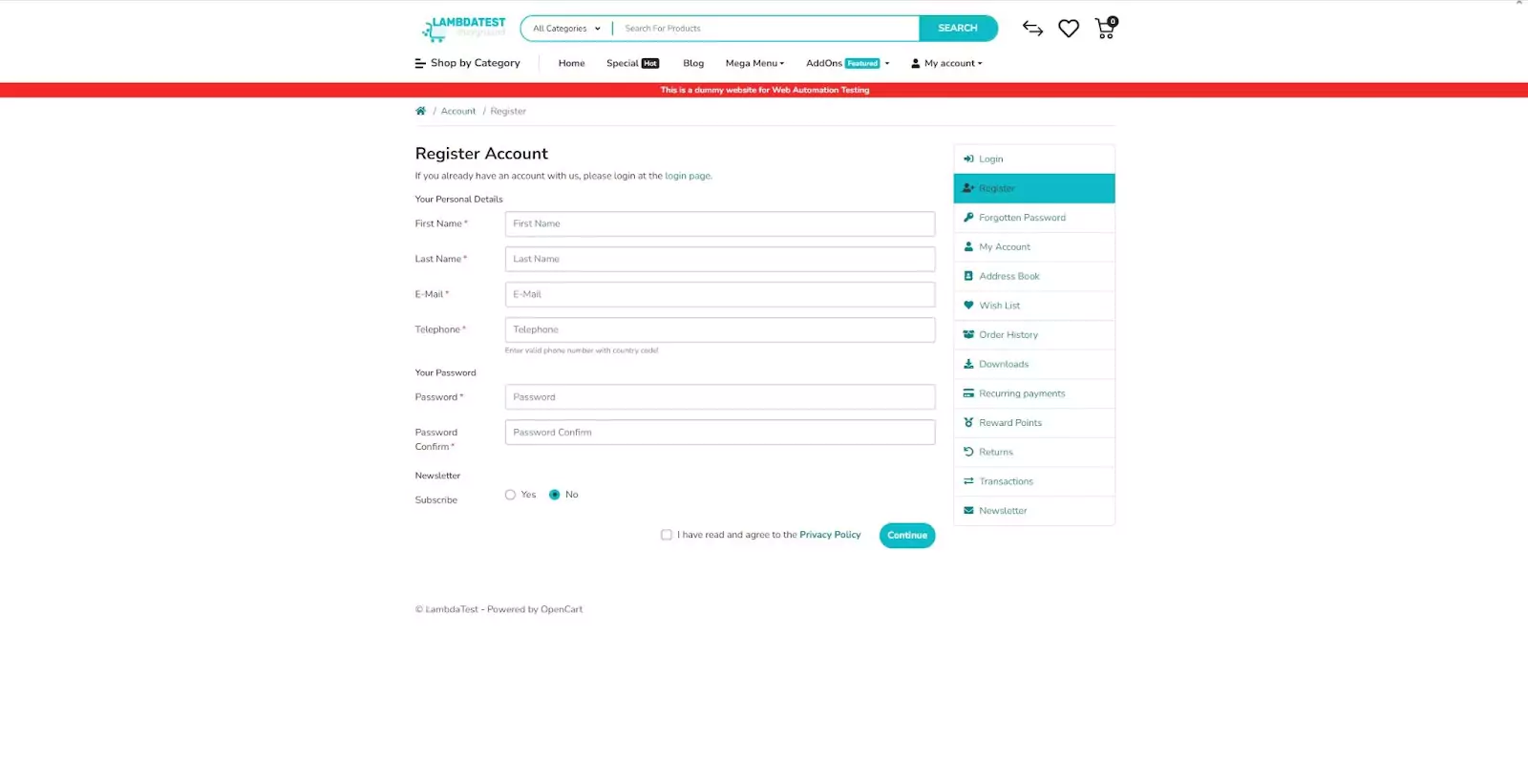

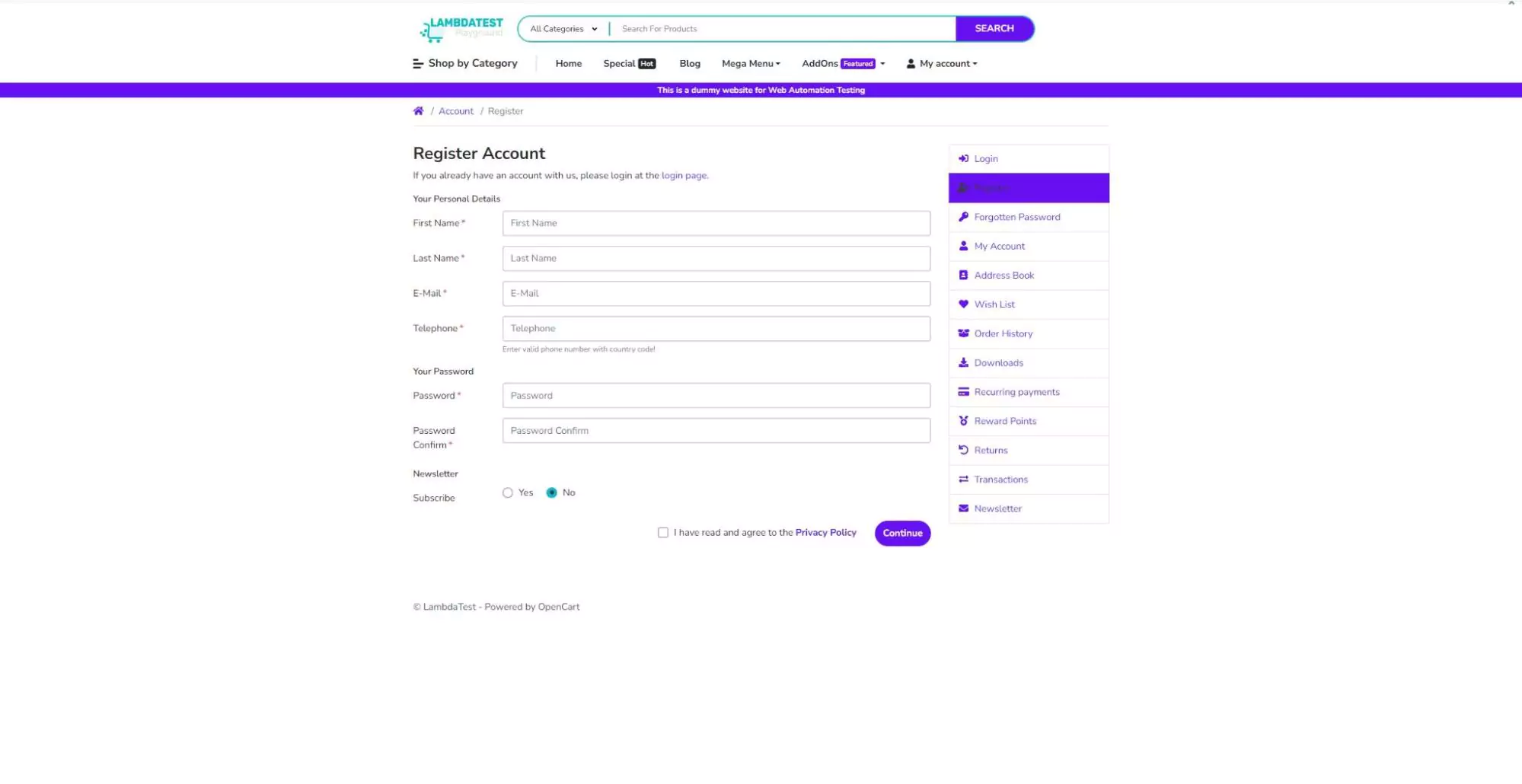

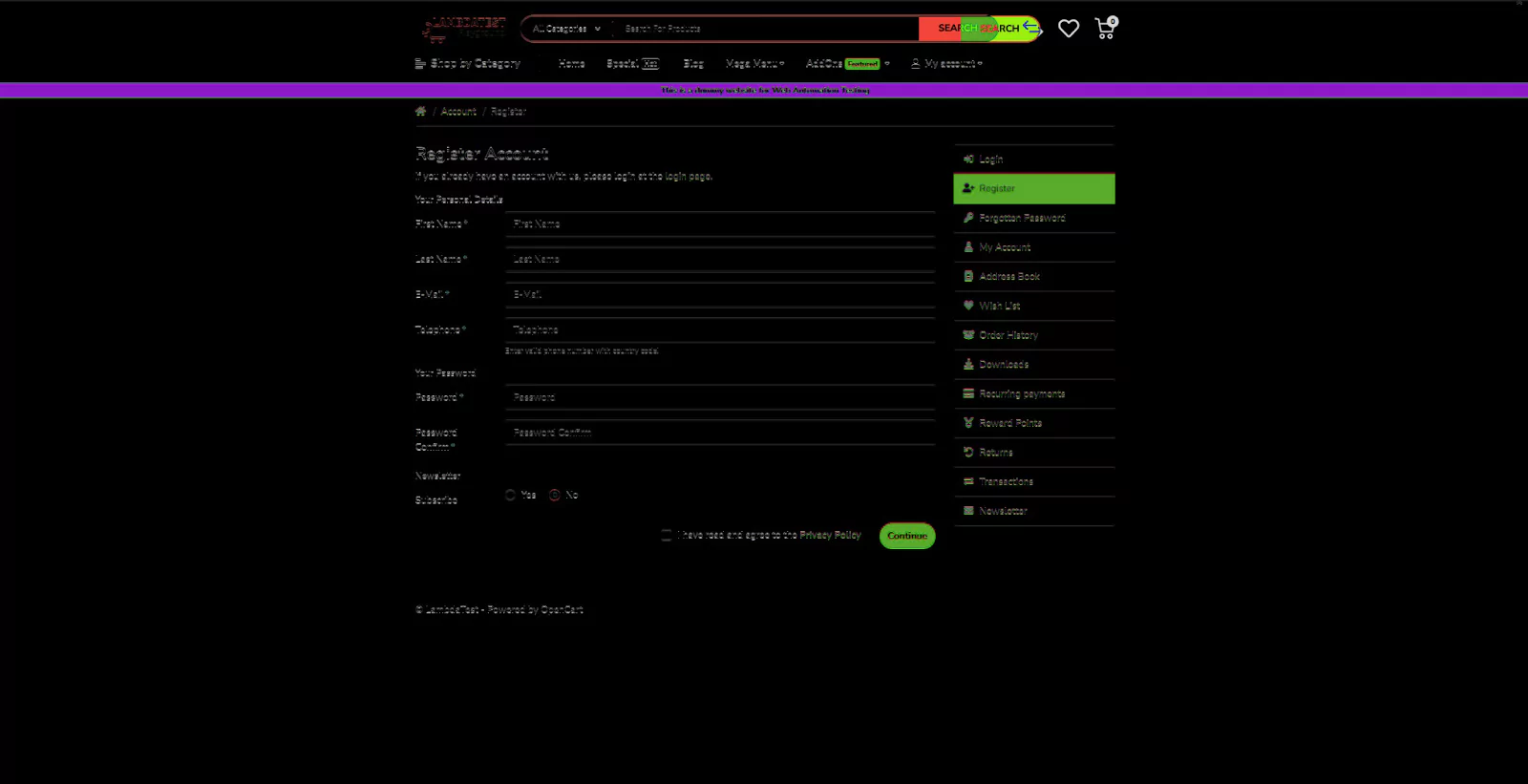

Imagine that you are testing an eCommerce website, like the one provided in the LambdaTest Playground website.

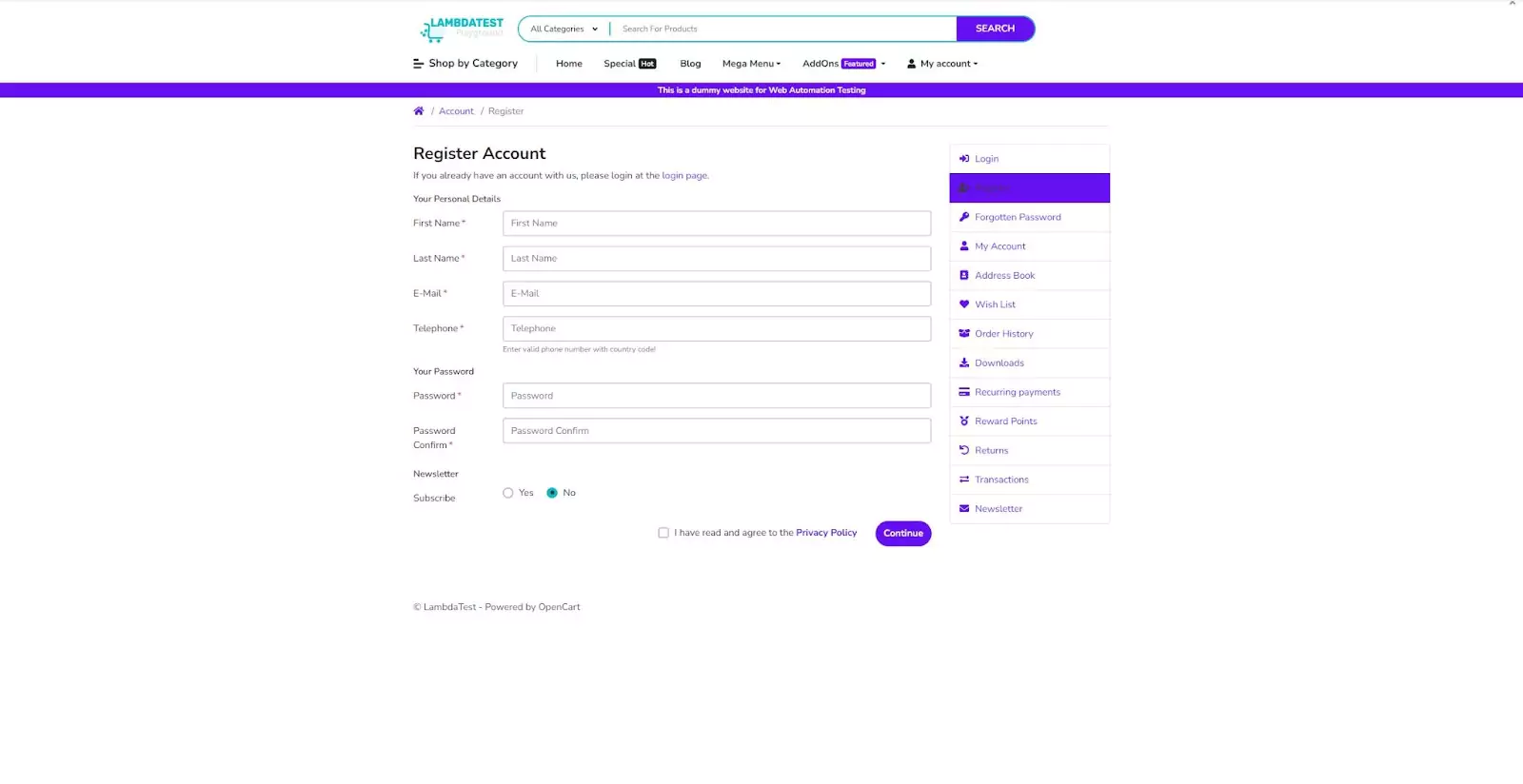

Consider that after adding a new feature or a bug fix, the website's visual is like the screenshot below.

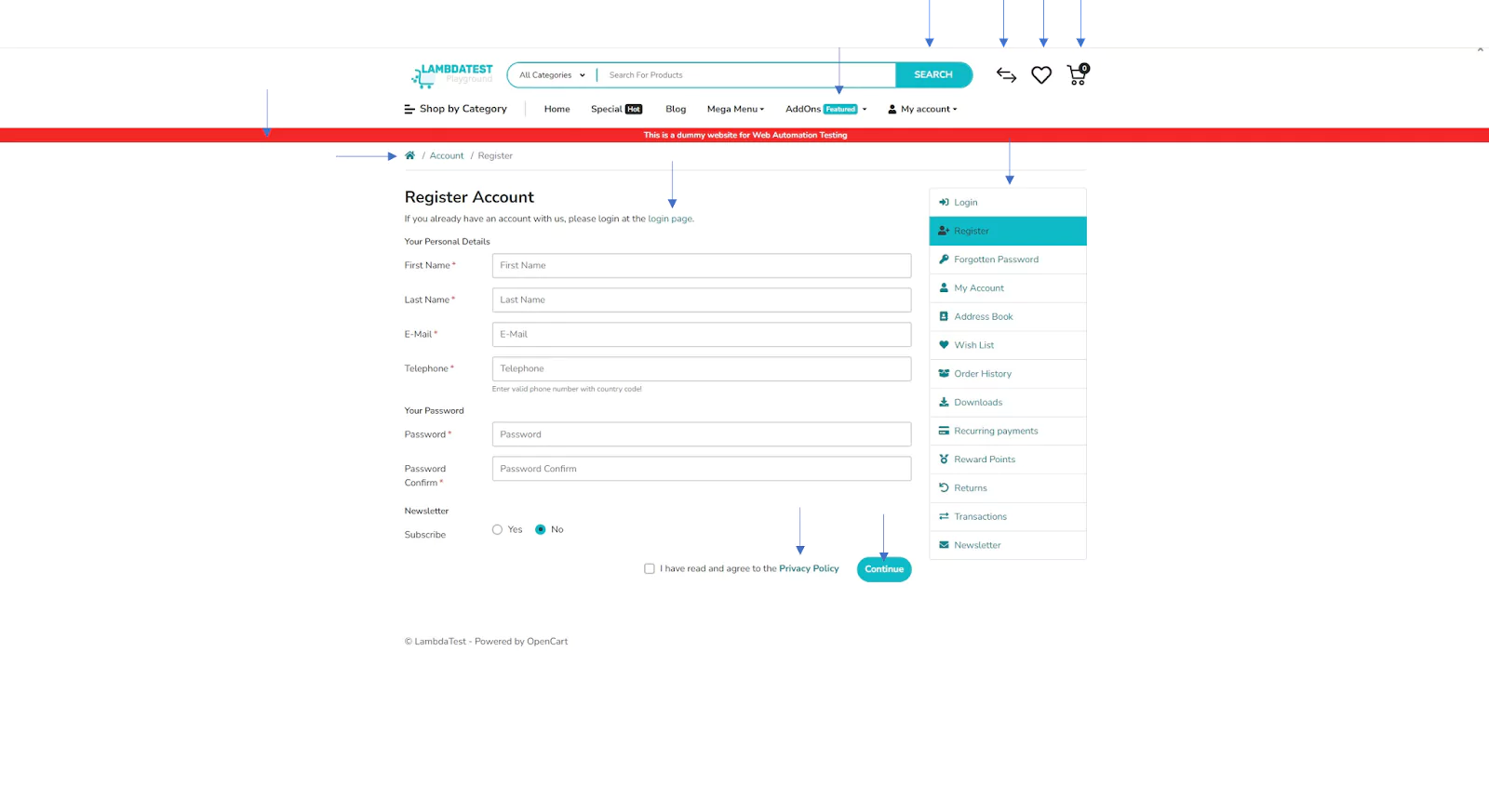

If you run some functional tests, you will likely notice some differences, mainly due to the absence of some of the screen components, but you will not be able to notice some of the differences. Look at this third image to see all the differences highlighted.

Visual testing acts as the gatekeeper, guaranteeing that innovations don't unintentionally disturb the visual appeal and interaction quality of our tech offerings. As they say, "Images resonate deeply." In the tech sphere, a visual misalignment can have loud repercussions, underscoring the need for rigorous visual checks.

Basics of Regression Testing

Regression testing is the pivot of software quality assurance. At its essence, it's re-evaluating software to ensure that new implementations or edits haven’t compromised its existing behavior. Consider it the safety net, catching any discrepancies that may arise post modifications.

At the heart of regression testing are two pivotal goals:

- Guaranteeing recent modifications, whether bug fixes or updates haven't introduced fresh issues.

- Validating that these new updates haven't inadvertently reactivated any prior defects.

In simpler terms, it's the process of verifying that post-alteration, the software continues to deliver its intended performance without hitches. Within the Software Development Life Cycle (SDLC), regression testing stands tall:

- Adapting to Change

- Pre-Deployment Checks

- During Software Upkeep

As digital solutions mature and evolve, they undergo myriad changes like error rectifications and feature additions. Regression testing ensures harmony among these changes.

It is the final test before a software’s market launch, ascertaining that recent modifications haven't negatively influenced the existing features.

Especially in the software's sustenance phase, where updates are a norm, regression testing confirms seamless coexistence between old and new elements.

Types of Regression Testing

The broad umbrella of regression testing covers multiple types, each serving a specific purpose in validating software changes.

- Unit Regression Testing

- Functional Regression Testing

- Integration Regression Testing

- Visual Regression Testing

Focused on the smallest part of the software, unit testing ensures that a specific application section, such as a function or method, works perfectly after modifications.

This type looks at specific software application functions. For instance, if an eCommerce site adds a new payment method, functional regression testing would ensure that this addition hasn’t affected other payment methods.

This focuses on the points where different units or functions of software interact. It ensures that the interaction points between software units remain fault-free while integrating new changes.

Moving beyond the traditional domain, visual regression testing is a subtype that focuses on ensuring the visual aspects of a software application remain consistent before and after changes. It's less about functionality and more about visual representation, ensuring the user interface looks consistent across different versions.

Importance of Visual Regression Testing

With the rising significance of user experience in today's software landscape, the visual dimension of applications has gained paramount importance.

- Ensuring UI Consistency Across Versions

- Detecting Unintended Visual Changes

With frequent software updates, there's always a risk that the user interface might see unintended changes. Visual regression testing ensures that the look and feel of the application remain consistent across various versions, providing users with a consistent experience.

Sometimes, a backend change can lead to an unforeseen visual anomaly in the front end. Visual regression testing automates detecting such changes, ensuring that even the minutest of visual discrepancies don't go unnoticed.

By understanding the foundation of regression testing, developers and testers can ensure that software changes, while aimed at enhancement, don’t backfire by adversely affecting existing functionalities or aesthetics. It's a balance between innovation and consistency, and regression testing is the tool to maintain this balance.

Visual Regression Testing Tools/Frameworks in Python

In software development, visual regression testing plays a pivotal role in maintaining the visual integrity of applications. Python, with its plethora of libraries, offers several tools for this purpose. In this tutorial section of Python visual regression testing, we will explore three such tools: Pillow, PixelMatch, and SeleniumBase.

Pillow

Pillow is an open-source Python Imaging Library that adds image processing capabilities to your Python interpreter. It supports many file formats and is extremely powerful for image opening, manipulation, and saving tasks.

Methods

| Method | Description |

|---|---|

| Image.open() | Opens and identifies the given image file |

| ImageChops.difference() | Computes the absolute pixel-wise difference between two images |

| Image.save() | Saves the image under the given filename |

Benefits

- Extensive file format support

- Robust image processing capabilities

- Wide range of functionalities, including filtering, transforming, and enhancing images

Disadvantages

- Learning curve can be steep for beginners

- May be overkill for simple visual regression tasks

PixelMatch

PixelMatch is a Python library designed for image comparison. It is specifically tuned for comparing PNG images and is known for its accuracy and performance in detecting visual differences.

Methods

| Method | Description |

|---|---|

| pixelmatch() | Compares two images and returns the pixel difference count and a difference image |

Benefits

- Highly accurate in detecting pixel differences

- Optimized for performance

- Easy to integrate and use

Disadvantages

- Limited to PNG images

- Less versatile compared to more comprehensive image processing libraries

SeleniumBase

SeleniumBase is a robust testing framework that integrates Selenium WebDriver for web automation testing. It supports visual testing, allowing testers to perform visual regression tests efficiently using Selenium.

New to Selenium? Check out this tutorial on What is Selenium?

Methods

| Method | Description |

|---|---|

| open() | Opens the specified URL in the browser |

| check_window() | Performs a visual comparison check of the current web page against a baseline |

Benefits

- Provides a comprehensive solution for web testing, including visual testing.

- Integrates seamlessly with Selenium WebDriver.

- Supports multiple browsers and platforms.

Disadvantages

- There is a steeper learning curve for those new to Selenium and web automation.

- Requires additional setup and configuration for web testing.

Writing your First Visual Regression Test in Python

Visual regression testing is a form of software testing that focuses on ensuring that UIs appear consistently across different environments, versions, and screen resolutions. In this section on Python regression testing tutorial, we will describe how to set up and write your first visual regression test using Python. We will explore practical examples using popular libraries like Pillow, PixelMatch, and SeleniumBase in this Python visual regression testing tutorial.

Installation and Configuration

Before writing a test, ensure Python is installed. Depending on your library, you'll need to install the necessary packages using the pip install pillow pixelmatch seleniumbase command.

Basic Structure of a Test

Visual regression testing is an integral aspect of the software development process, primarily aimed at ensuring the visual consistency of an application. It plays a pivotal role in identifying any discrepancies in the visual appearance of an application across various versions, screen resolutions, and environments. To understand it further, let's dissect the fundamental structure of a visual regression test.

Opening or Loading Images/Screenshots

The initial step in the visual regression testing process is acquiring the images or screenshots to be compared. Typically, this involves opening or loading two distinct images: baseline and test images. The baseline image is a previously approved version of the application's UI, representing the expected appearance. On the other hand, the test image is a screenshot captured from the current state of the application, reflecting any recent changes or modifications.

Comparison of Images

Once the baseline and test images are loaded, the core of the visual regression test unfolds – comparing these images. This step is crucial to determine whether visual differences exist between the two images. Advanced algorithms are employed to meticulously compare each pixel of the baseline image with the corresponding pixel of the test image. This pixel-by-pixel comparison ensures the identification of even the minutest discrepancies in color, layout, text, or any other visual element.

Highlighting or Saving Differences

Following the comparison, the test proceeds to the next critical phase – highlighting or saving the differences. If any disparities are detected between the baseline and test images, these are prominently highlighted, making it easier for developers and testers to visualize the changes. This often involves creating a diff image, a composite representation of the baseline and test images, wherein the differences are accentuated for clarity. The diff image serves as a visual guide, quickly and efficiently identifying unintended alterations and facilitating rectification.

Practical Examples

We will now create practical examples for Pillow, PixelMatch, and SeleniumBase libraries. For these examples, we will use three images as a base.

image1.png

image2.png (equals to image1.png)

image3.png (with some differences from image1.png and image2.png)

Example 1: Pillow

from PIL import Image, ImageChops

image1 = Image.open('../images/image1.jpg')

image2 = Image.open('../images/image3.jpg')

diff = ImageChops.difference(image1, image2)

diff.save("../images/pillowdiff.png")Explanation

The code begins by importing necessary modules from Pillow. We then open two images using Image.open(). The ImageChops.difference() function computes the difference between the two images. The resulting difference image (diff) is then saved with diff.save().

You can run the above code just by calling the below command:

python sample.pyThis is the generated image:

Example 2: PixelMatch

from PIL import Image

from pixelmatch.contrib.PIL import pixelmatch

image1 = Image.open('../images/image1.jpg')

image2 = Image.open('../images/image2.jpg')

img_diff = Image.new("RGBA", image1.size)

# note how there is no need to specify dimensions

mismatch = pixelmatch(image1, image2, img_diff, includeAA=True)

img_diff.save('../images/pixelmatchdiff.png')Explanation

After importing the necessary modules and loading the images, we create a new blank image (img_diff) with the same size as our input images. The pixelmatch() function is then used to compare image1 and image2, with the differences drawn onto img_diff. Any mismatched pixels are then highlighted, and the result is saved.

You can run the above code just by calling the below command:

python sample.pyThis is the generated image:

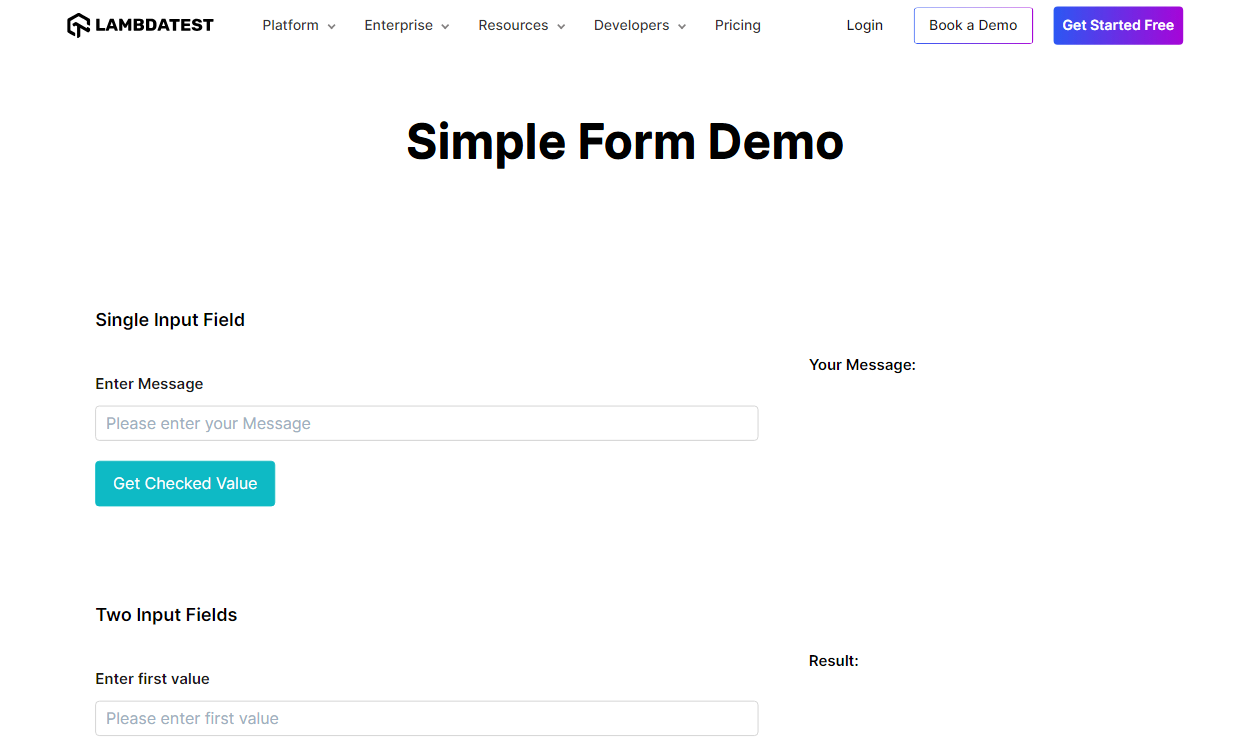

Example 3: SeleniumBase

from seleniumbase import BaseCase

import pytest

class VisualTest(BaseCase):

def test_visual(self):

self.open('https://www.lambdatest.com/selenium-playground/simple-form-demo')

self.check_window(name="main_window")

# This code block ensures that the test runs if this script is executed directly.

if __name__ == "__main__":

pytest.main()Explanation

The example leverages SeleniumBase for web-based visual testing. Within the VisualTest class, the test_visual method opens a webpage using self.open(). The self.check_window() function then captures the current state of the window for Python visual regression testing. The provided name ("main_window") acts as an identifier for the baseline image. The test will compare the captured image to the baseline and report any discrepancies. Finally, if the script is executed directly, the test will run using pytest.

You can run the above code just by calling the below command:

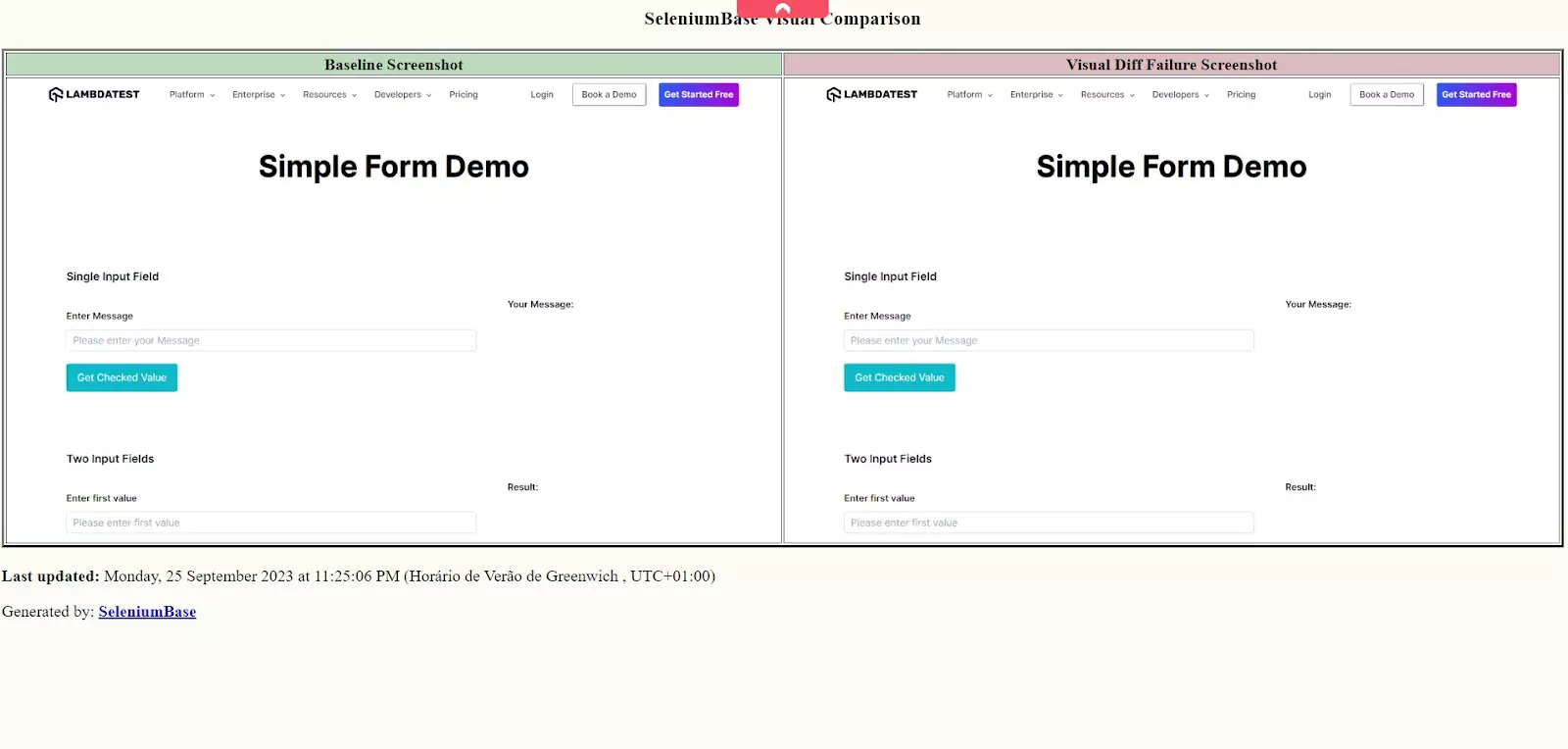

pytestWhen running the first time, SeleniumBase generates the below image and sets it as a baseline.

If you rerun the test, SeleniumBase generates another image and compares it with the baseline.

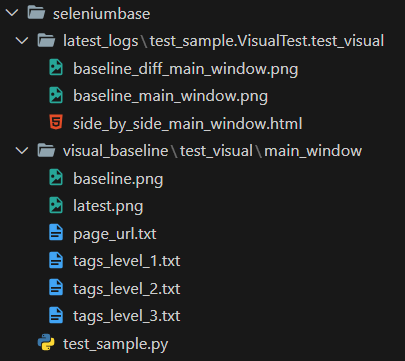

This is the project structure after executing the script twice:

You can also see that it generated a side_by_side_main_window.html file with both images side by side.

Python offers diverse tools for visual regression testing, each with its strengths. Whether you're testing static images or dynamic web pages, understanding the basics and exploring the capabilities of these libraries will ensure you maintain visual consistency throughout your application.

Integrating Python Visual Regression Testing into CI/CD

In today's fast-paced development landscape, CI/CD has emerged as a linchpin for delivering frequent and reliable software releases. By incorporating Python visual regression testing into this pipeline, teams can ensure that UI and design quality remain consistent throughout the software's lifecycle.

Continuous Integration (CI) frequently integrates code changes into a shared repository, where automated builds and tests are run. Continuous Deployment (CD) is an extension where changes are automatically deployed to production environments after testing.

Continuous Integration and Continuous Delivery (CI/CD) are pivotal in test automation, and their importance cannot be overstated. Here's an elaboration of the significance of CI/CD in test automation:

- Rapid Feedback: CI/CD provides instant feedback, allowing developers to address and fix issues immediately.

- Consistent Testing Environment: Automating tests within a CI/CD framework ensures they are run consistently, eliminating discrepancies.

- Reduced Human Error: Automation reduces the chances of manual errors during deployment, leading to more reliable releases.

- Faster Time-to-Market: With automated tests providing instant feedback, the overall time from development to deployment is reduced, delivering features to users faster.

Importance of Visual Testing integration with CI/CD

Incorporating Python visual regression testing into a CI/CD pipeline transcends traditional quality assurance. While automated unit and integration tests verify the functionality, visual tests ensure the software's appearance remains consistent and free from defects across iterations. Here's why integrating visual testing with CI/CD is paramount:

- Immediate Feedback Loop

- Consistent User Experience (UX)

- Enhanced Collaboration

- Reduced Manual QA Effort

- Build Confidence

- Market Responsiveness

Just as CI provides instant feedback on code integrations, visual testing within the CI/CD pipeline instantly highlights visual discrepancies. This immediacy allows developers to fix UI glitches without delaying the deployment process, maintaining the pace of delivery.

Frequent releases, while beneficial, can inadvertently introduce visual anomalies. Integrated visual testing ensures that the user experience remains consistent even with rapid deployments, preventing unexpected UI changes from reaching the end users.

By catching visual regressions early in the development cycle, designers, developers, and product managers can collaborate more effectively. They can pinpoint the cause of an issue, whether it's a design choice, a code change, or an overlooked requirement.

Running visual tests automatically as part of the CI/CD process diminishes the need for exhaustive manual UI checks. This expedites the testing phase and reduces the likelihood of human oversights.

Knowing that visual tests continually check for discrepancies with each integration builds confidence in the release process. Teams can be assured that deployments function as intended and look the part.

In competitive markets, being first can be a differentiator. By ensuring visual perfection through integrated testing, businesses can rapidly deploy features, enhancements, or fixes, responding to market demands efficiently.

In essence, when Python visual regression testing is seamlessly woven into CI/CD, it epitomizes the very essence of shift-left testing. Issues are caught earlier, rectified immediately, and the software's visual integrity is maintained, ensuring a consistently high-quality user experience with each release.

Reporting and Maintenance

Once you've integrated Python visual regression testing into your development pipeline, an essential aspect comes into play: how do you communicate results effectively and maintain the testing suite as the software evolves? Let's explore the intricacies of reporting and the challenges of maintenance.

Generating Reports

Effective communication of test results is vital for swift action and decision-making. That's where reports transform raw test data into insightful visualizations and summaries.

Importance of Actionable Reports

- Clarity: A well-structured report outlines precisely where discr

- Efficiency: Quick insights lead to faster fixes. With clear reports, developers don't waste time interpreting results.

- Stakeholder Communication: Non-technical stakeholders can grasp the quality and state of the software through comprehensive reports, bridging the gap between technical and non-technical members.

Tools and Libraries for Report Generation in Python

- Allure: A flexible, lightweight multi-language test report tool that not only shows a very concise representation of what has been tested but also provides additional information on failed tests, making the analysis process more efficient.

- pytest-html: A plugin for pytest that generates a static HTML report of test results, including visual regression tests.

Maintenance Challenges

With the evolution of software, the Python visual regression testing suite will also need continuous updating. Maintenance ensures the relevance and effectiveness of tests.

- Baseline Image Updates

- Handling False Positives

- Managing a Growing Test Suite

Over time, intentional changes to the UI will necessitate updating the baseline images used for comparison in visual regression tests.

Automation can be set up to prompt testers or developers to approve intentional changes, updating the baseline images accordingly.

False positives, where the test inaccurately flags a discrepancy, can be a frequent nuisance. Factors like dynamic content, minor rendering differences across browsers, or anti-aliasing can trigger them.

Regularly reviewing false positives and refining test strategies or thresholds can mitigate their occurrence.

As features are added, visual tests might increase, leading to longer test execution times.

Periodically auditing the test suite to remove redundant tests, optimizing test execution, or parallelizing tests can help manage the growth effectively.

While Python visual regression testing offers a robust mechanism to maintain UI consistency, it comes with challenges. Effective reporting illuminates the path to action, and vigilant maintenance ensures the longevity and relevance of the testing suite. Properly managed, these tests are invaluable in the Software Development Life Cycle.

Demonstration: Python Visual Regression Testing

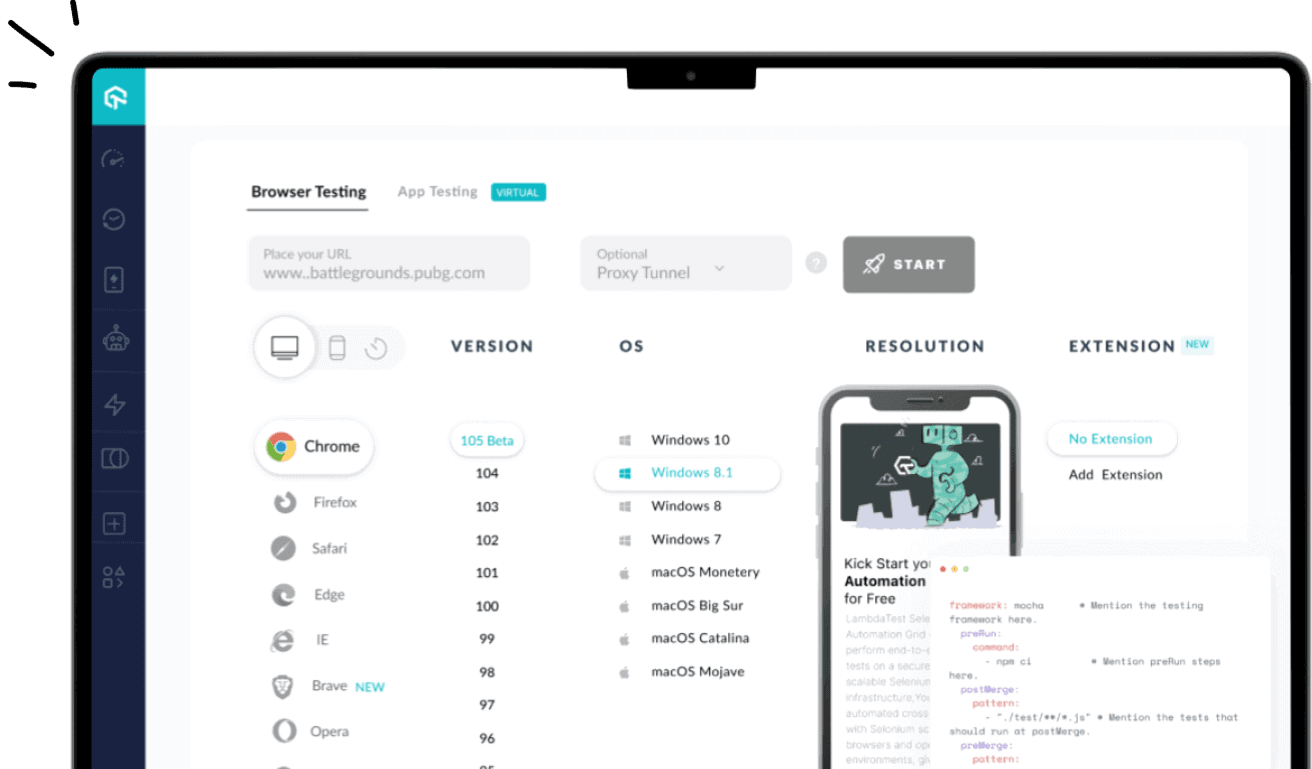

In this section, we will apply some of the knowledge of this tutorial to do Python visual regression testing using cloud platforms like LambdaTest.

LambdaTest is an AI-powered test orchestration and execution platform to execute manual and automation testing at scale. The platform allows you to perform Python visual regression testing using its AI-infused Smart UI platform that brings the power of cloud and AI to your visual regression testing with Python.

To learn how to run automated visual regression testing on LambdaTest, please check out the below tutorial:

Subscribe to the LambdaTest YouTube Channel for software testing tutorials around Selenium testing, Playwright testing, and more.

To perform Selenium Python testing on the LambdaTest cloud grid, you should use the capabilities to configure the environment where the test will run. In this tutorial on Python visual regression testing, we will run the tests in Windows 11 and Chrome.

Test Scenarios

We will execute the below test scenarios:

Test Scenario 1 - Configuring a test to have an image as a baseline

Preconditions:

|

Test Scenario 2 - Using ignore P2P False Positives

Preconditions:

|

Test Scenario 3 - Using ignore colored areas

Preconditions:

|

Test Scenario 4 - Using bounding boxes

Preconditions:

|

LambdaTest offers a lot of capabilities for Python visual regression testing.

In this tutorial on Python visual regression testing, we will demonstrate the two scenarios mentioned above: Ignore P2P False Positives and Ignore Colored Areas. To have a complete overview of all LambdaTest Capabilities for visual testing, you can access the Advanced Test Settings for SmartUI - Pixel to Pixel Comparison page.

Setting up the Environment

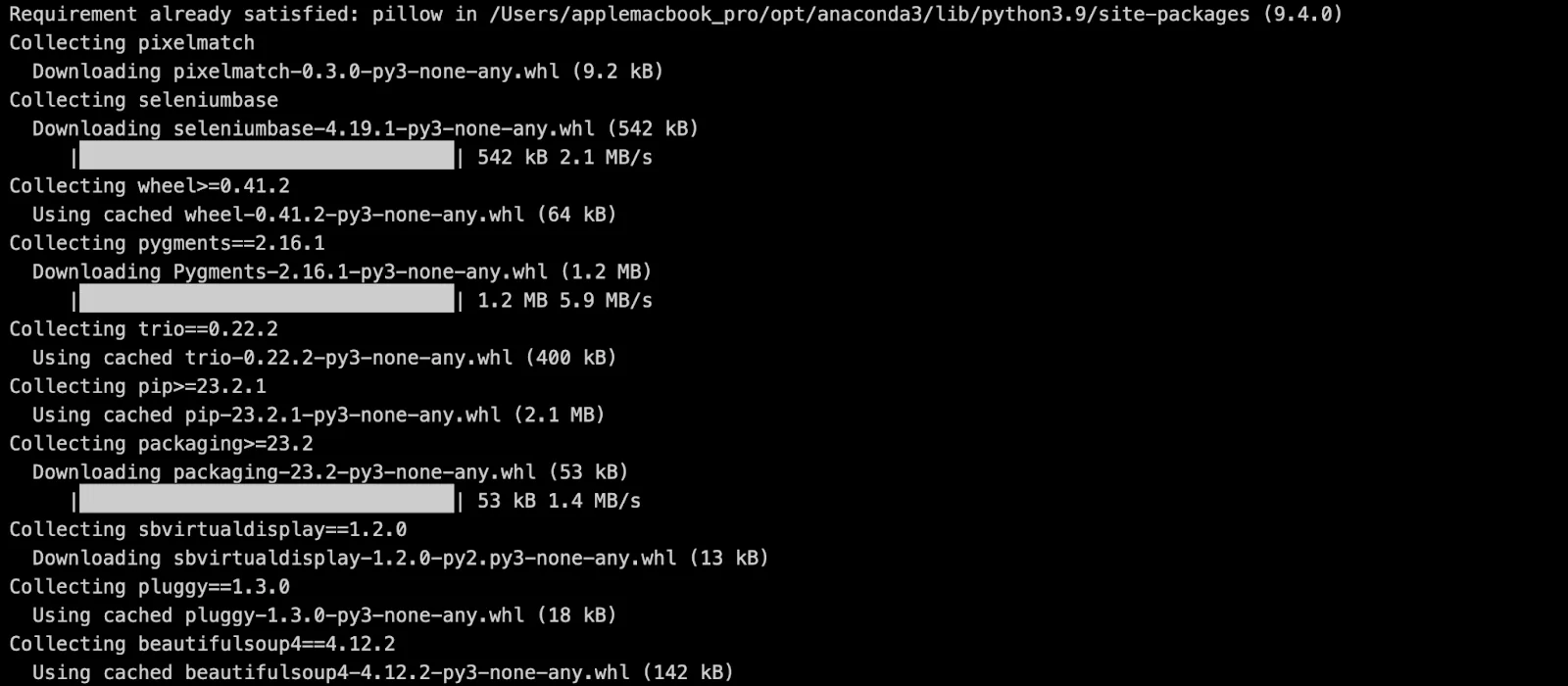

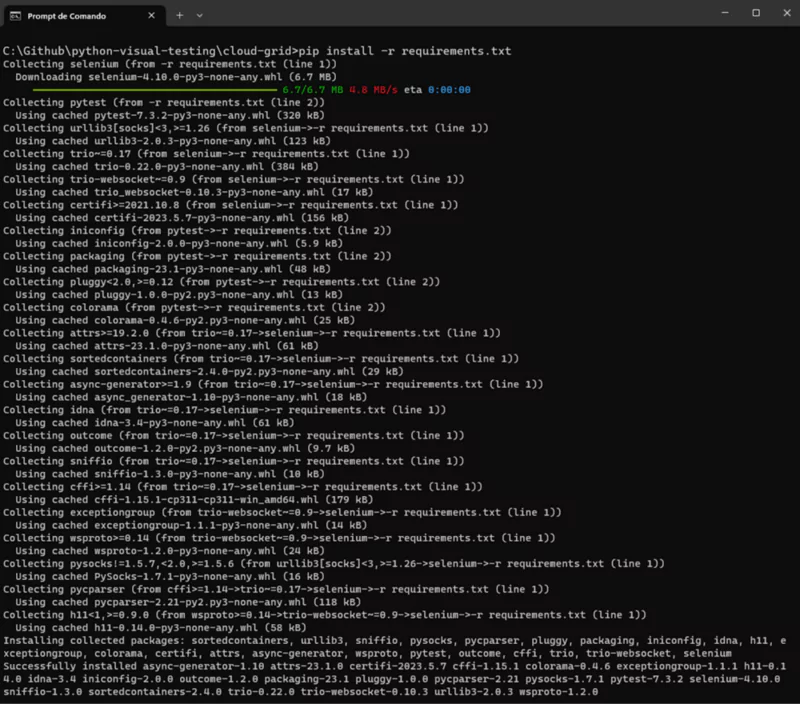

Step 1: Install Selenium, pytest, and other necessary libraries

Once Python is installed, use the Python package manager, pip, to install Selenium and pytest just running the following command:

pip install -r requirements.txtRequirements.txt contains the dependencies that we want to install.

After running, you can see the below output:

Step 2: Download and Install Visual Studio Code or your preferred IDE

Step 3: Configure pytest in Visual Studio Code

To finish the configuration, we need to say to Visual Studio Code that pytest will be our test runner so that you can do this following the below instructions:

- Create a folder for your project (in our example, python-screenshots).

- Open the project folder in Visual Studio Code.

- Open the command palette (menu View > Command Palette), and type “Configure Tests”

- Select pytest as the test framework.

- Select the root directory option.

You must also prepare the LambdaTest capabilities code to be inserted in our test script.

You can generate the capabilities code from the LambdaTest Capabilities Generator.

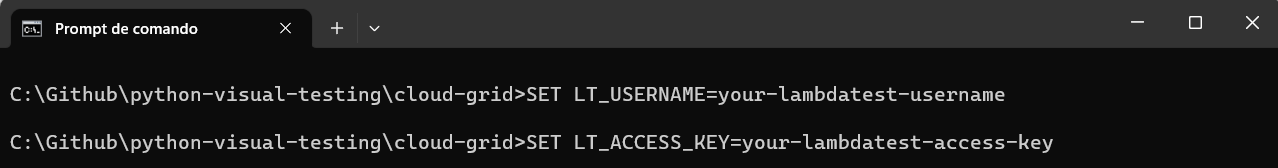

Then, you should get the “Username” and “Access Token” from your account in your LambdaTest Profile Section and set it as an environment variable.

Scenario 1: Visual Testing Baseline Implementation

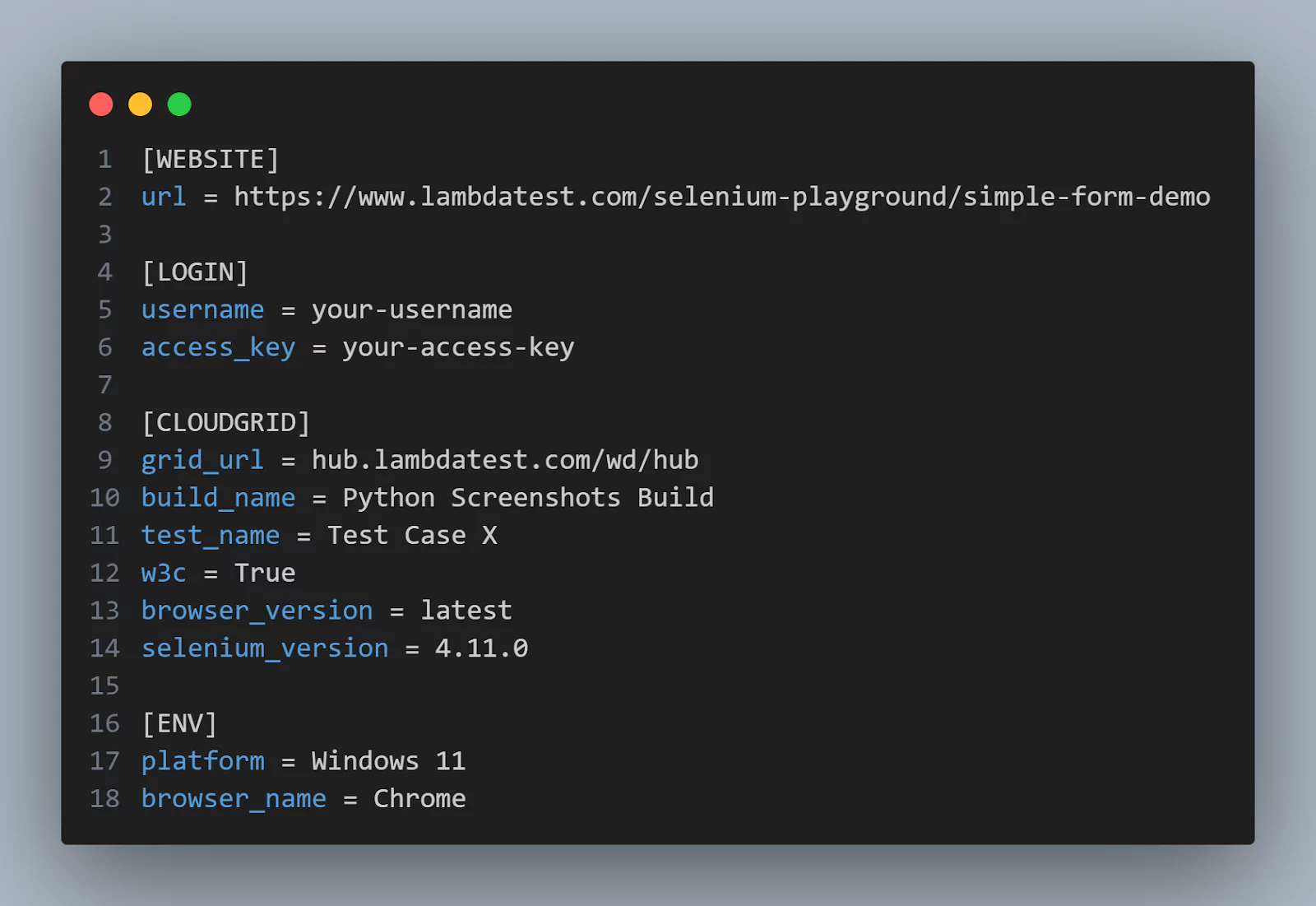

File Name - config.ini

[WEBSITE]

url = https://www.lambdatest.com/selenium-playground/simple-form-demo

[LOGIN]

username = your-username

access_key = your-access-key

[CLOUDGRID]

grid_url = hub.lambdatest.com/wd/hub

build_name = Python Screenshots Build

test_name = Test Case X

w3c = True

browser_version = latest

selenium_version = 4.13.0

[ENV]

platform = Windows 11

browser_name = ChromeFile Name - test_lambdatest_baseline.py

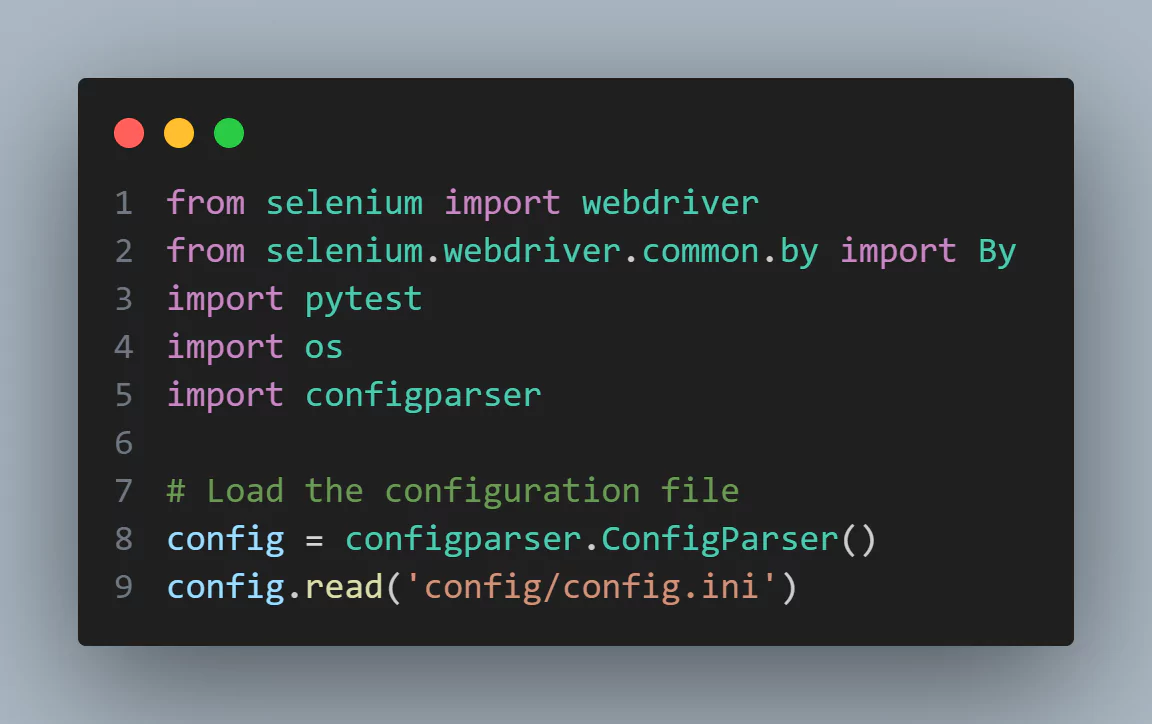

from selenium import webdriver

from selenium.webdriver.common.by import By

import pytest

import os

import configparser

# Load the configuration file

config = configparser.ConfigParser()

config.read('config/config.ini')

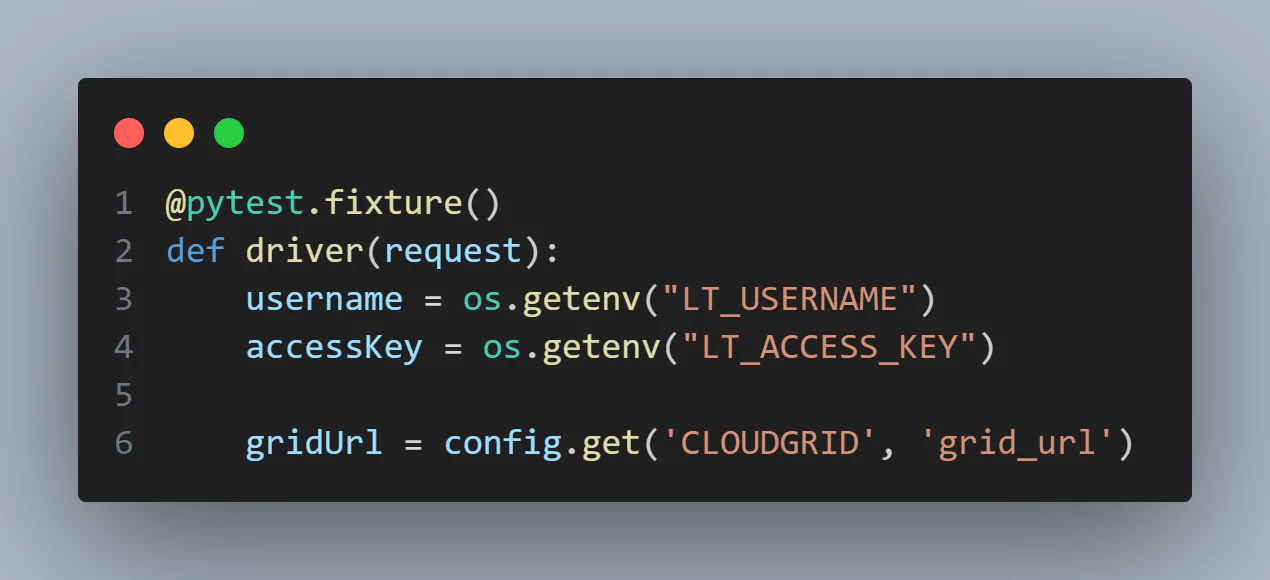

@pytest.fixture()

def driver(request):

username = os.getenv("LT_USERNAME")

accessKey = os.getenv("LT_ACCESS_KEY")

gridUrl = config.get('CLOUDGRID', 'grid_url')

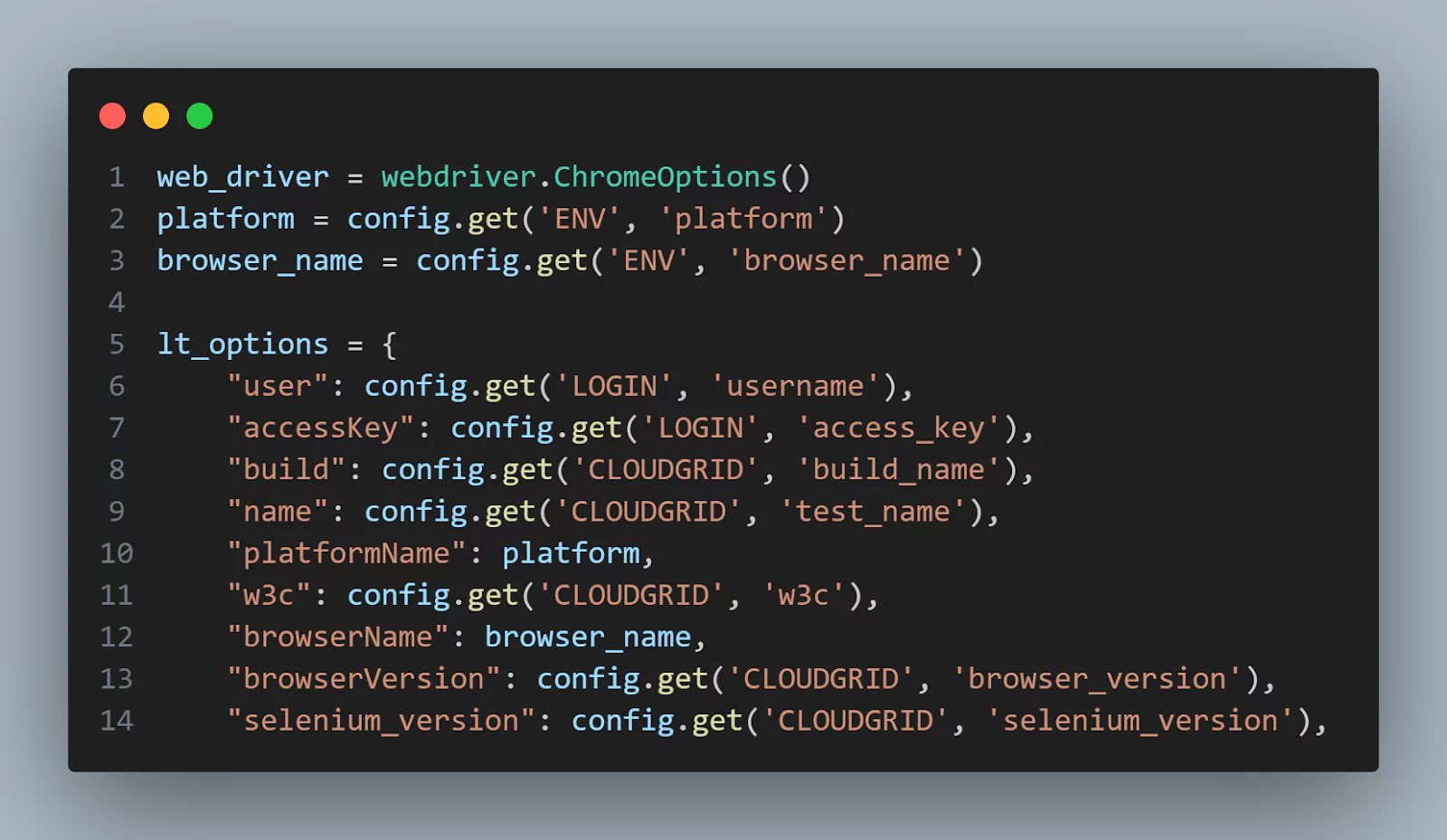

web_driver = webdriver.ChromeOptions()

platform = config.get('ENV', 'platform')

browser_name = config.get('ENV', 'browser_name')

lt_options = {

"user": config.get('LOGIN', 'username'),

"accessKey": config.get('LOGIN', 'access_key'),

"build": config.get('CLOUDGRID', 'build_name'),

"name": config.get('CLOUDGRID', 'test_name'),

"platformName": platform,

"w3c": config.get('CLOUDGRID', 'w3c'),

"browserName": browser_name,

"browserVersion": config.get('CLOUDGRID', 'browser_version'),

"selenium_version": config.get('CLOUDGRID', 'selenium_version'),

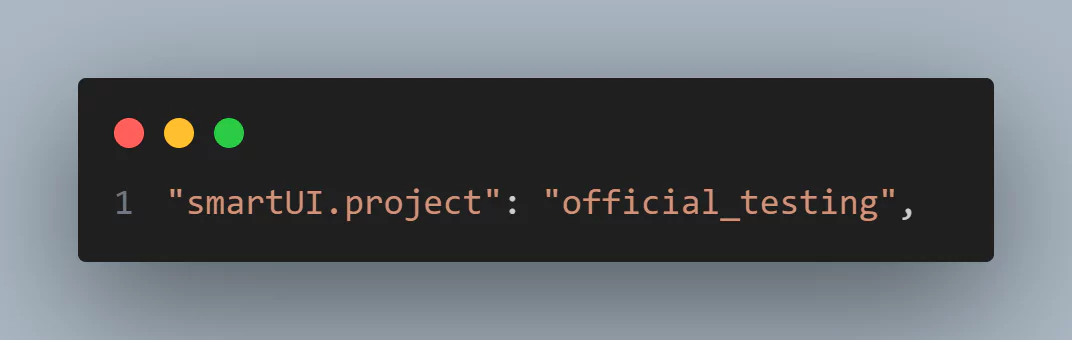

"smartUI.project": "official_testing",

"smartUI.build": "build_1",

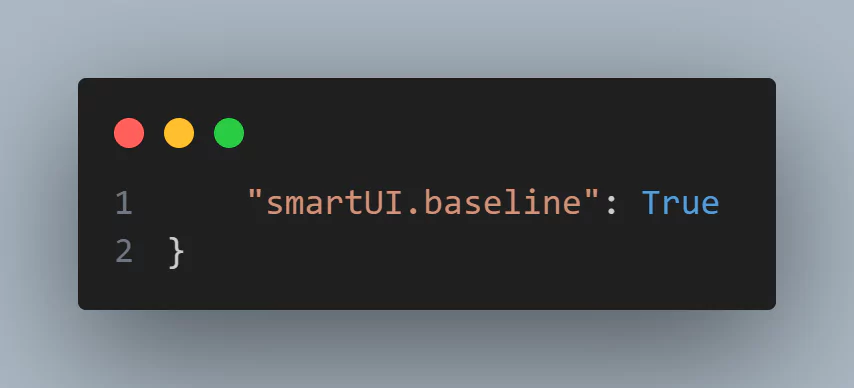

"smartUI.baseline": True

}

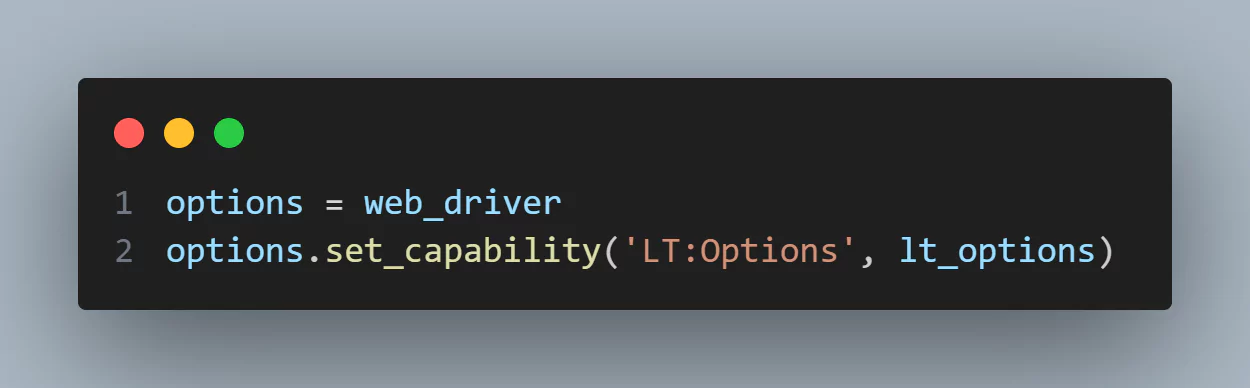

options = web_driver

options.set_capability('LT:Options', lt_options)

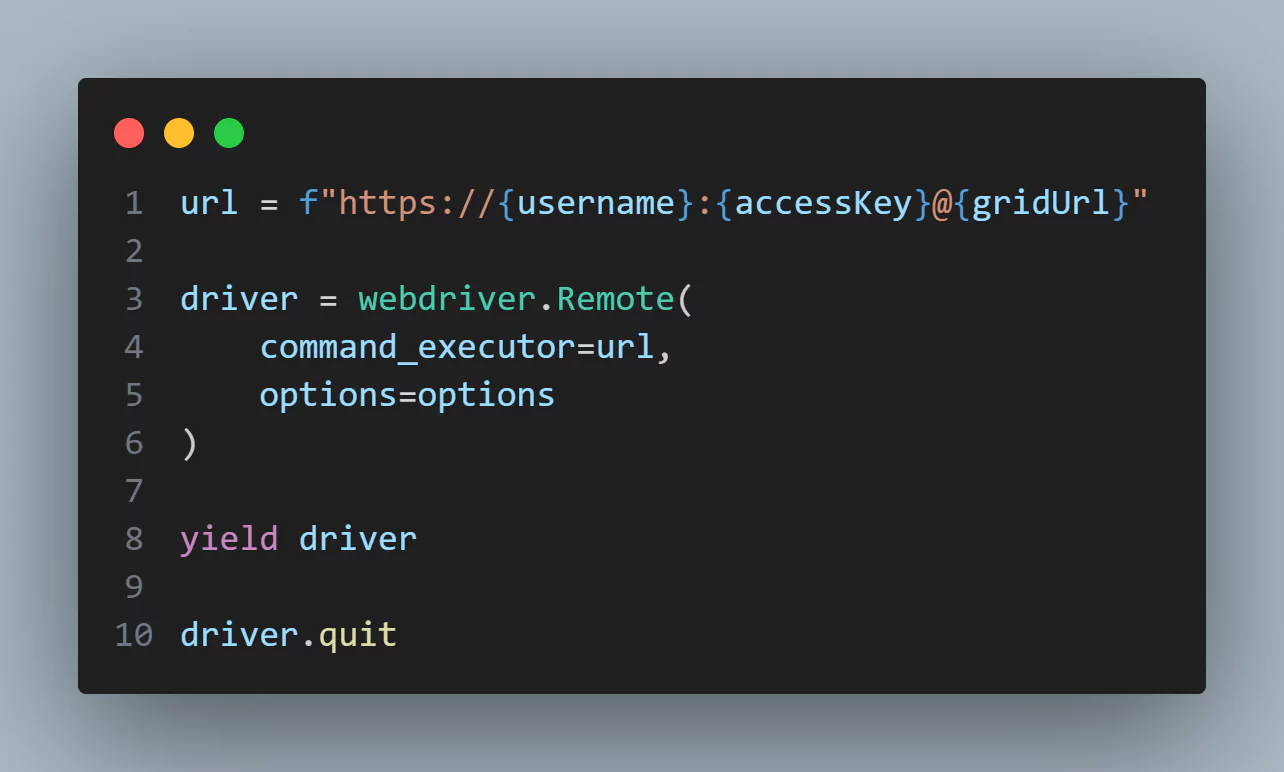

url = f"https://{username}:{accessKey}@{gridUrl}"

driver = webdriver.Remote(

command_executor=url,

options=options

)

yield driver

driver.quit

def test_visual_using_lambdatest(driver):

driver.get(config.get('WEBSITE', 'url'))

driver.execute_script("smartui.takeScreenshot=screenshots/screenshot_initial.jpg")

# Find an input element by its ID and enter text

input_element = driver.find_element(By.ID, "user-message")

input_element.send_keys("This is a visual testing text!")

# # Find an element by its ID and click on it

element = driver.find_element(By.ID, "showInput")

element.click()

# # Find an element by its ID and extract its text

element = driver.find_element(By.ID, "message")

driver.execute_script("smartui.takeScreenshot=screenshots/screenshot_final.jpg")

assert element.text == "This is a visual testing text!"

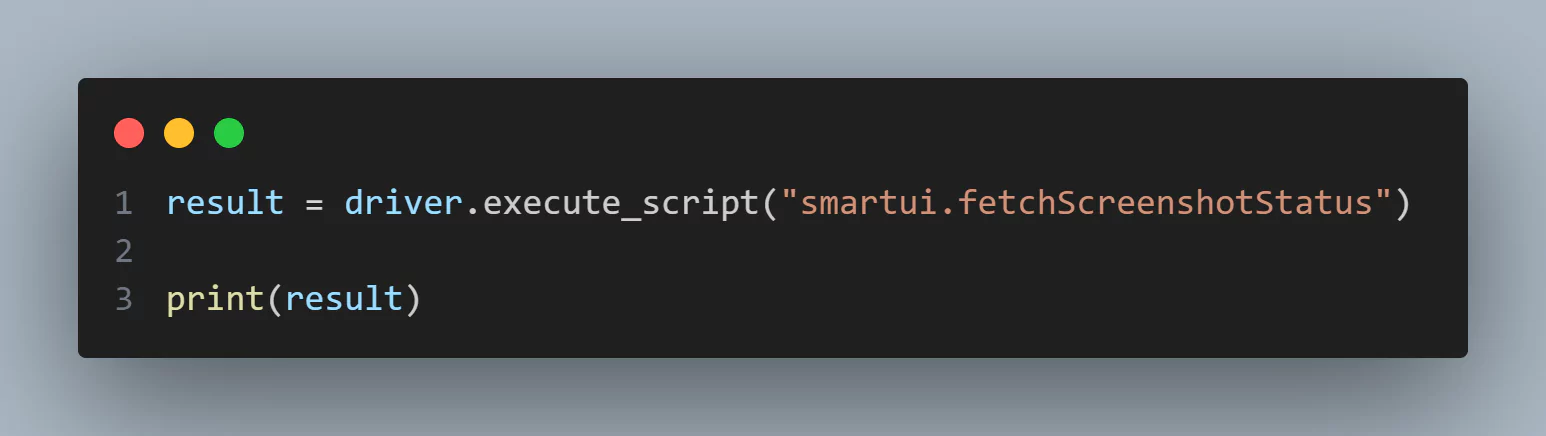

result = driver.execute_script("smartui.fetchScreenshotStatus")

print(result)

Code Walkthrough

In this described scenario, a two-step Python Selenium automation testing script is illustrated. The primary objective of the code is to perform Python visual regression testing using LambdaTest.

The configuration details for the test, such as website URL, login credentials, grid details, and environment settings, are stored in a file named config.ini. This file contains various sections, including WEBSITE, LOGIN, CLOUDGRID, and ENV, which hold the website’s URL, LambdaTest login details, grid configuration, and the environment specifications, such as the platform and browser name.

The script begins by importing the necessary modules and loading configuration settings from the config.ini file.

The code now defines a pytest fixture called driver, which sets up a Selenium WebDriver for automated browser testing. Initially, it fetches username and access key values from environment variables. Also the URL for the cloud grid where testing will occur (gridUrl) is retrieved.

A Chrome WebDriver is then instantiated with ChromeOptions (web_driver). A dictionary lt_options is populated with all these test settings, including login credentials, build and test names, platform information, and browser details; most of them came from the configuration file.

The included capabilities, specifically "smartUI.project": "official_testing", "smartUI.build": "build_1", and "smartUI.baseline": True, provide additional configurations for the LambdaTest automation testing.

The smartUI.project capability is set to "official_testing", which likely denotes the name or identifier of the testing project currently in progress. This aids in organizing and categorizing the tests within the LambdaTest environment.

The smartUI.build capability is assigned the value "build_1", indicating the specific build version or iteration under test, thus assisting in version tracking and ensuring that the test results correspond to the correct software build.

Lastly, the smartUI.baseline capability is a Boolean set to True, signifying that this test run establishes a baseline. In visual testing, a baseline is a set of reference images or data used to compare with the results of subsequent test runs to identify any discrepancies or visual differences. By setting this capability to True, the script indicates that the screenshots taken during this test should be treated as the reference for future comparisons.

Finally, these options are set as capabilities for the WebDriver instance using the set_capability method. This ensures that the WebDriver is fully configured according to the parameters defined in the config file and the environment variables.

With the WebDriver set up, the fixture connects to the LambdaTest Selenium Grid, which allows running tests on cloud-hosted browsers. It then yields the driver, making it available to the test function, and closes it once the test is done.

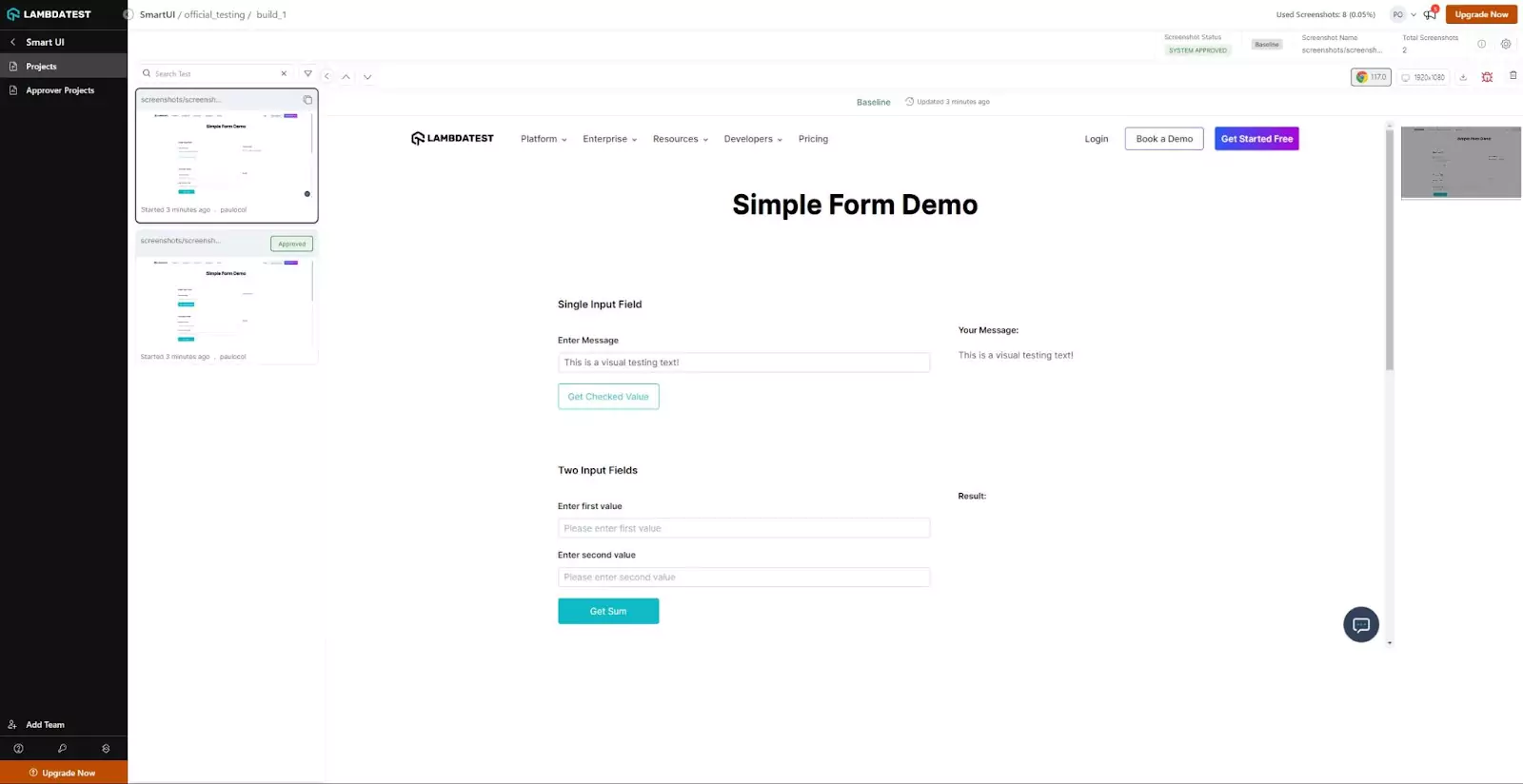

The actual test, test_visual_using_lambdatest, begins by navigating the driver to the specified website URL. It then executes a JavaScript command to take an initial screenshot.

Following this, the script interacts with the web elements on the page – it finds an input field by its ID, enters a text message, and clicks a button to display the message. After these interactions, a final screenshot is taken, asserting that the displayed message is correct.

Finally, the script fetches and prints the screenshot status using another JavaScript command. This way, the visual aspects of the webpage are tested before and after the interaction, ensuring that visual elements are rendered as expected.

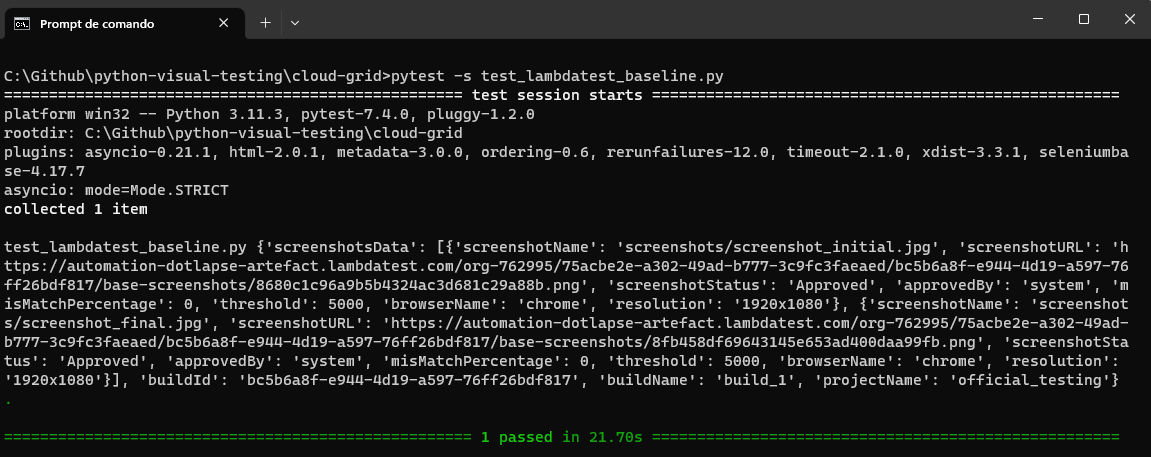

You can run the above code by just calling the below command:

pytest -s test_lambdatest_baseline.py

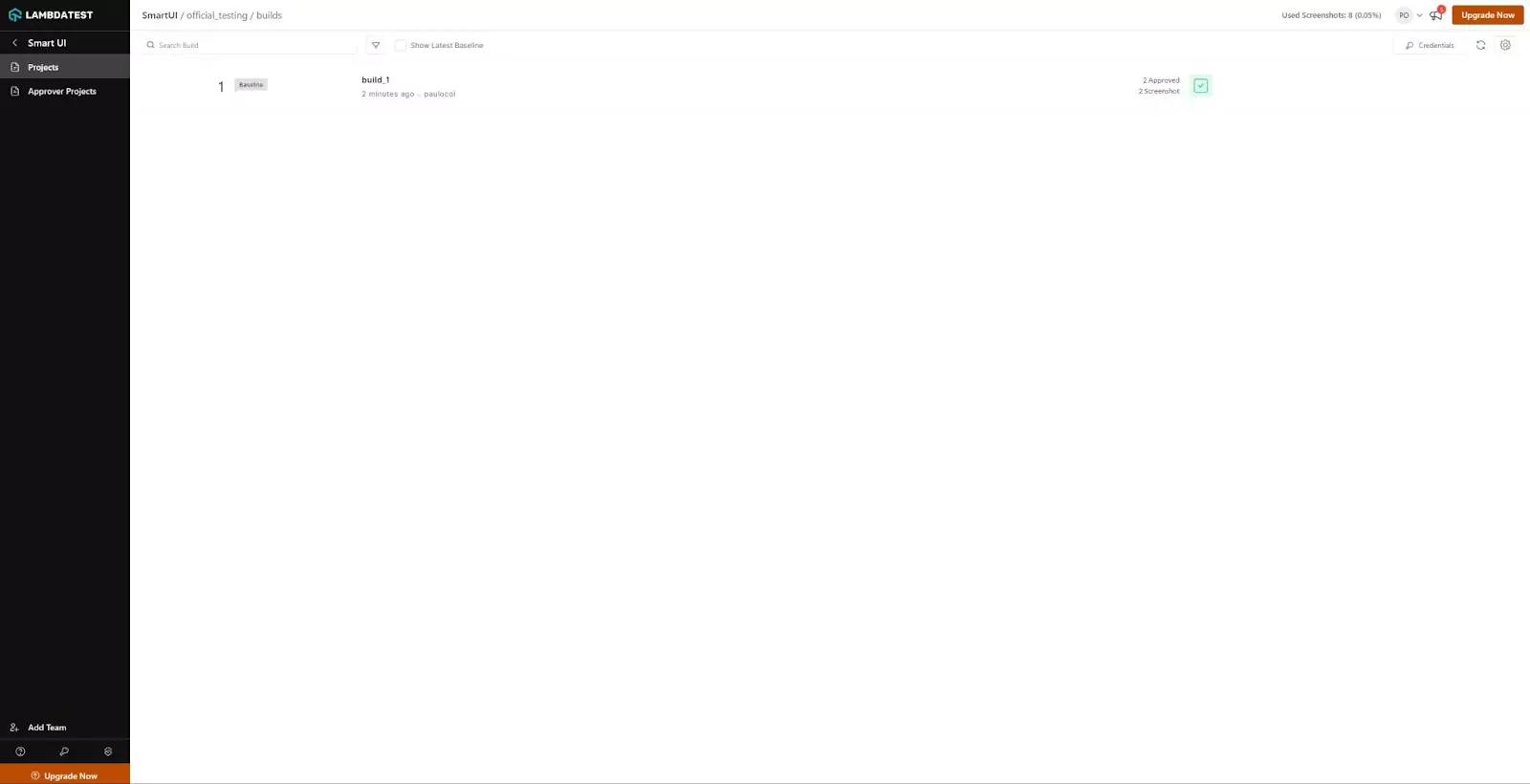

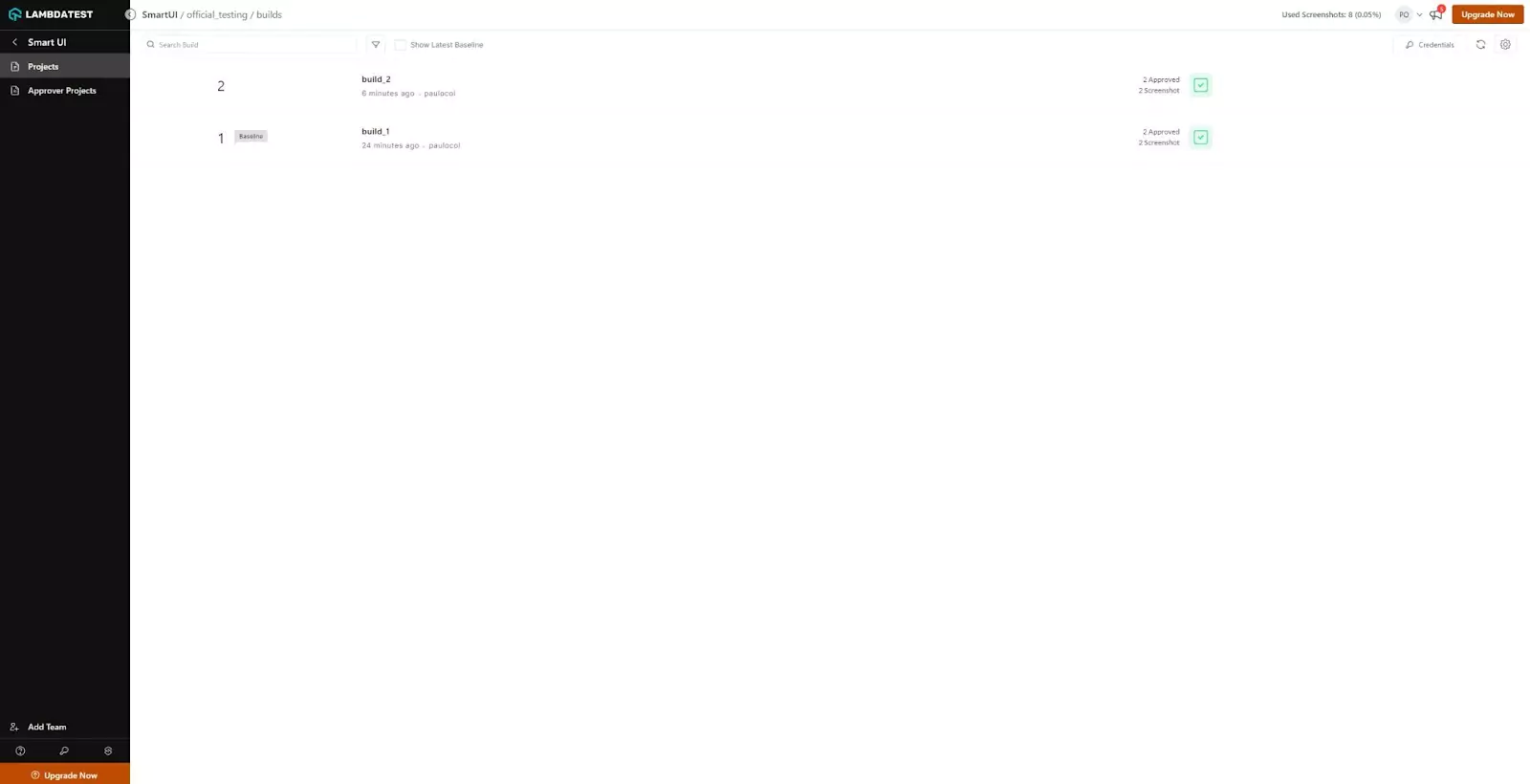

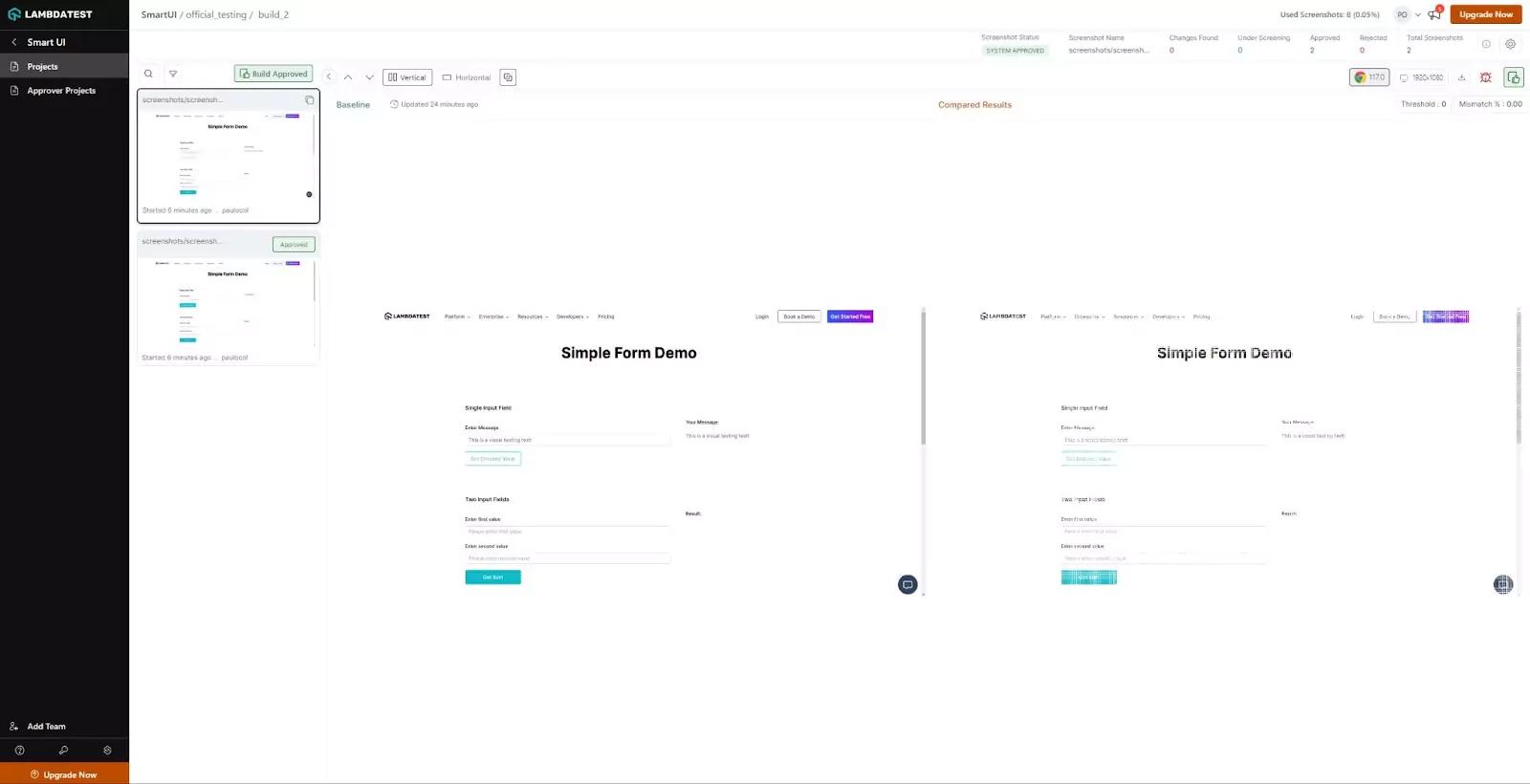

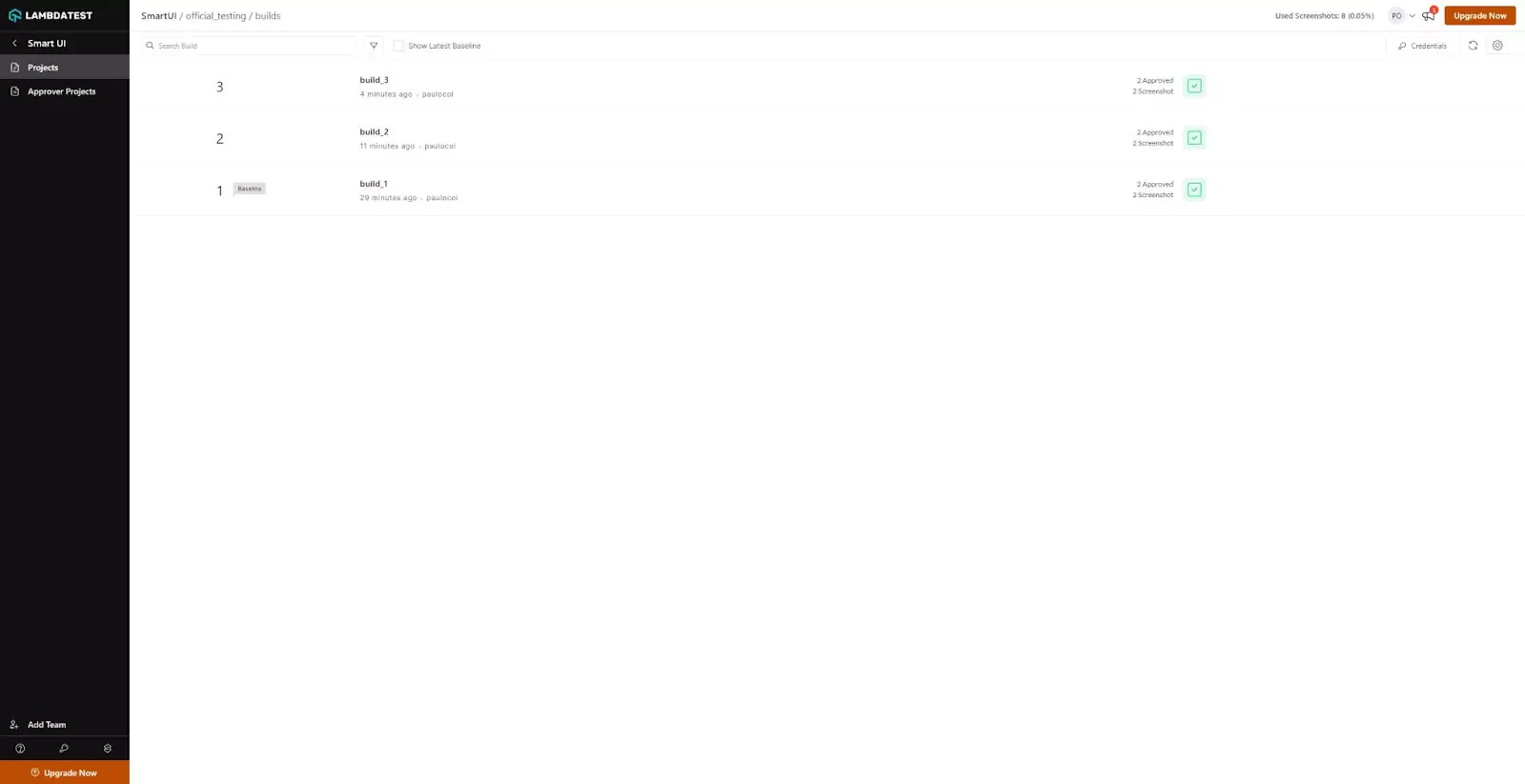

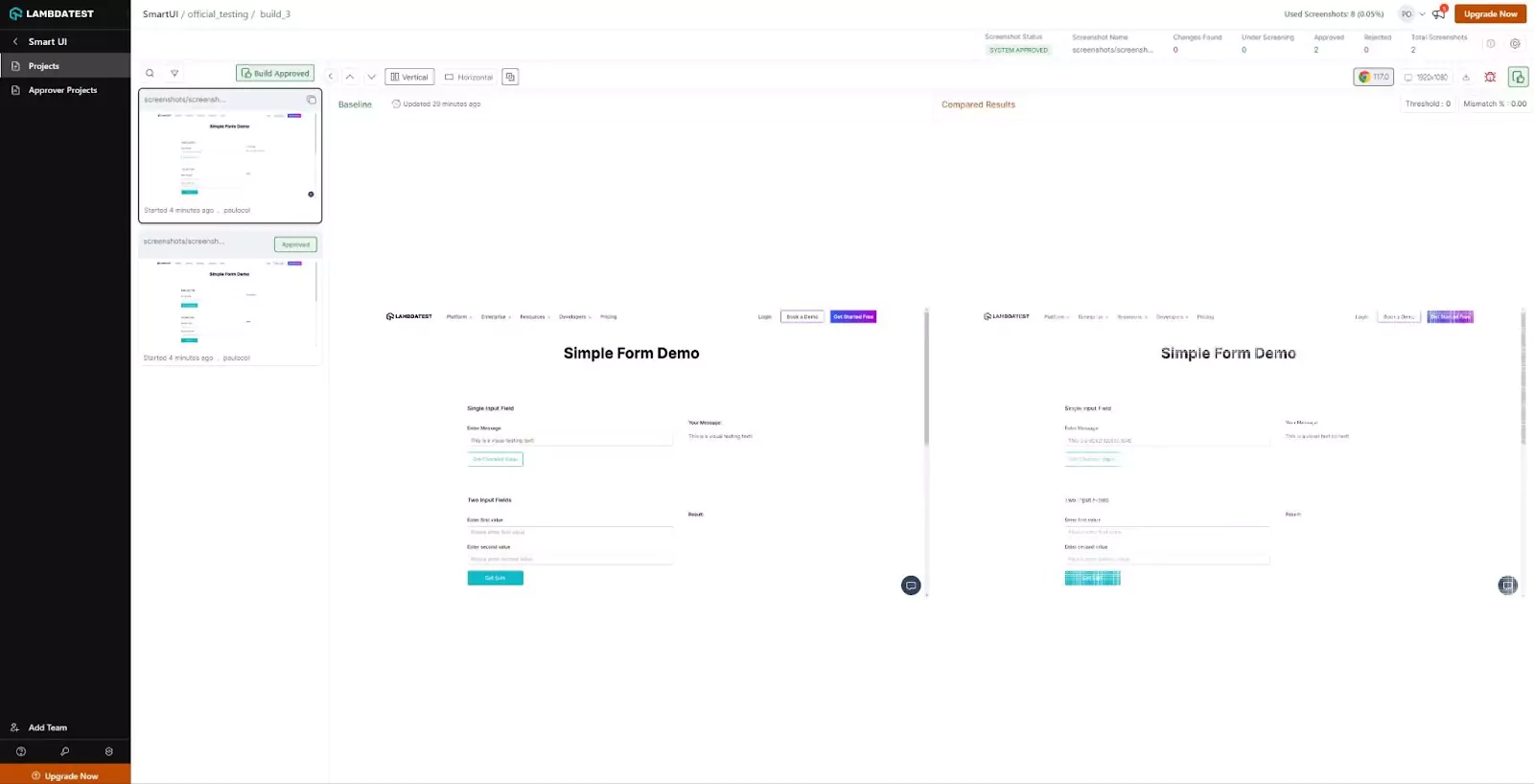

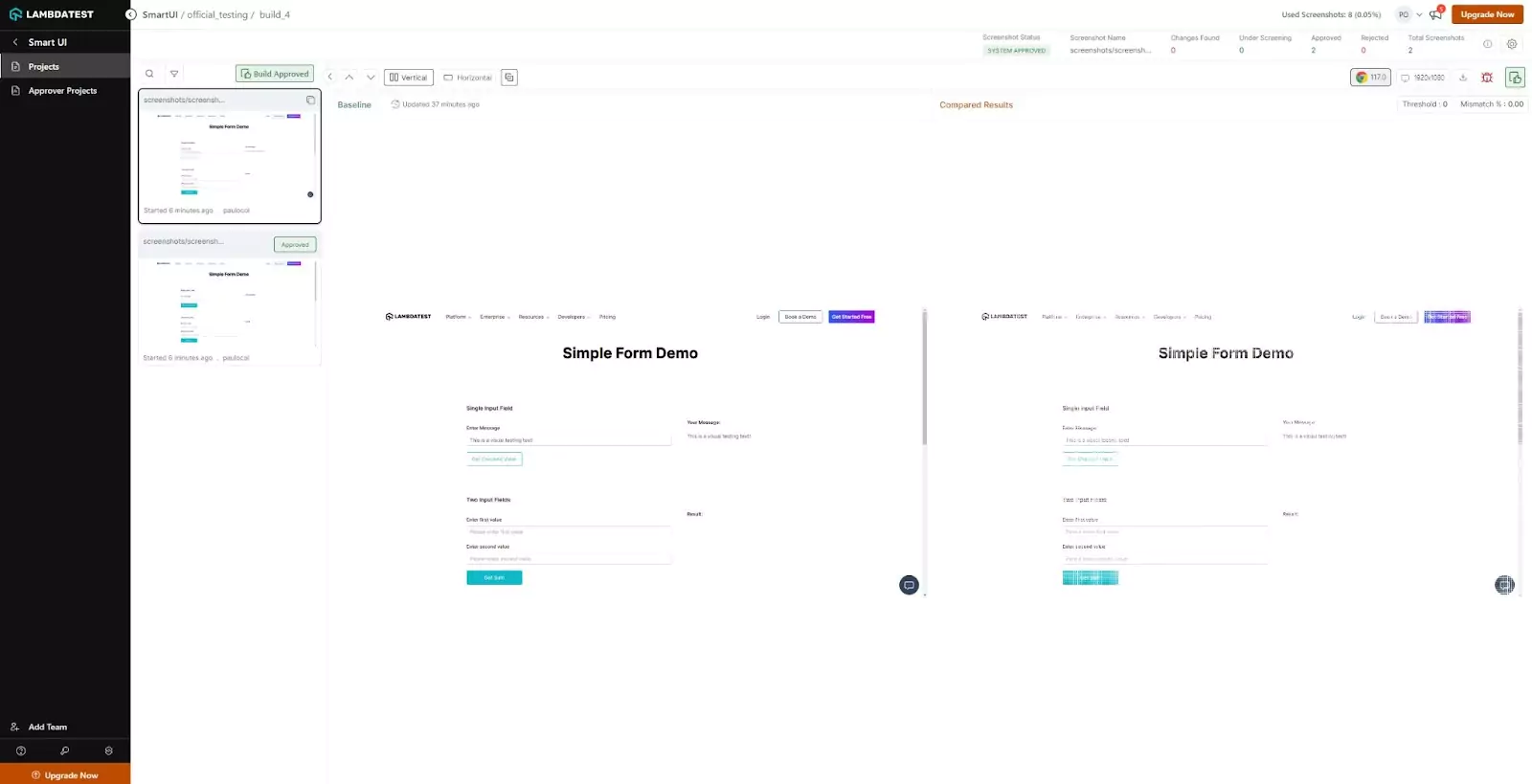

In the LambdaTest Dashboard, you can see the test status and the screenshots taken.

Scenario 2: Visual Testing Ignore P2P Implementation

File Name - test_lambdatest_ignore_p2p.py

from selenium import webdriver

from selenium.webdriver.common.by import By

import pytest

import os

import configparser

# Load the configuration file

config = configparser.ConfigParser()

config.read('config/config.ini')

@pytest.fixture()

def driver(request):

username = os.getenv("LT_USERNAME")

accessKey = os.getenv("LT_ACCESS_KEY")

gridUrl = config.get('CLOUDGRID', 'grid_url')

web_driver = webdriver.ChromeOptions()

platform = config.get('ENV', 'platform')

browser_name = config.get('ENV', 'browser_name')

lt_options = {

"user": config.get('LOGIN', 'username'),

"accessKey": config.get('LOGIN', 'access_key'),

"build": config.get('CLOUDGRID', 'build_name'),

"name": config.get('CLOUDGRID', 'test_name'),

"platformName": platform,

"w3c": config.get('CLOUDGRID', 'w3c'),

"browserName": browser_name,

"browserVersion": config.get('CLOUDGRID', 'browser_version'),

"selenium_version": config.get('CLOUDGRID', 'selenium_version'),

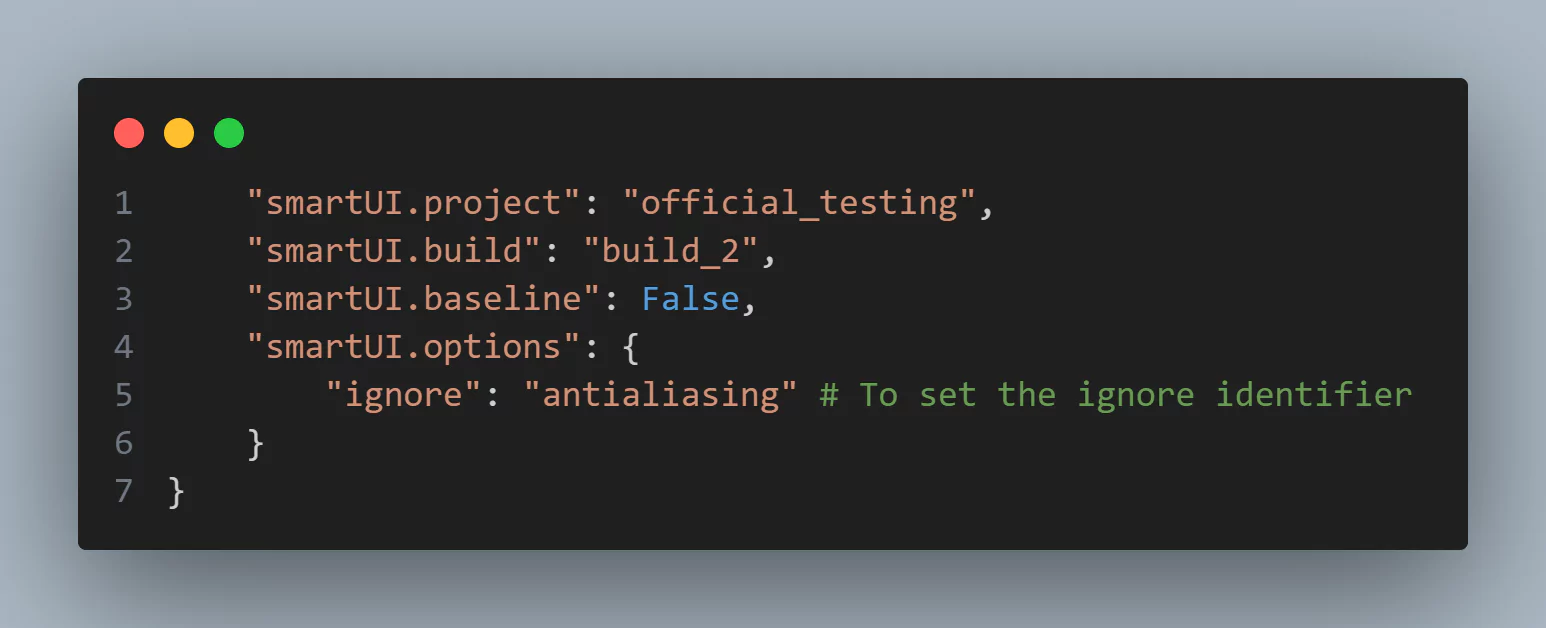

"smartUI.project": "official_testing",

"smartUI.build": "build_2",

"smartUI.baseline": False,

"smartUI.options": {

"ignore": "antialiasing" # To set the ignore identifier

}

}

options = web_driver

options.set_capability('LT:Options', lt_options)

url = f"https://{username}:{accessKey}@{gridUrl}"

driver = webdriver.Remote(

command_executor=url,

options=options

)

yield driver

driver.quit

def test_visual_using_lambdatest(driver):

driver.get(config.get('WEBSITE', 'url'))

driver.execute_script("smartui.takeScreenshot=screenshots/screenshot_initial.jpg")

# Find an input element by its ID and enter text

input_element = driver.find_element(By.ID, "user-message")

input_element.send_keys("This is a visual testing text!")

# # Find an element by its ID and click on it

element = driver.find_element(By.ID, "showInput")

element.click()

# # Find an element by its ID and extract its text

element = driver.find_element(By.ID, "message")

driver.execute_script("smartui.takeScreenshot=screenshots/screenshot_final.jpg")

assert element.text == "This is a visual testing text!"

result = driver.execute_script("smartui.fetchScreenshotStatus")

print(result)Code Walkthrough

Almost the same as the previous file, just changing three points:

- The smartUI.build capability that is now called as build_2.

- The smartUI.baseline capability is now set to False (given that in the previous scenario, we already created the baseline).

- There is one new capability is smartUI.options.ignore, which is set to “antialiasing”.

In the given LambdaTest capability "smartUI.options": {"ignore": "antialiasing"}, the Ignore P2P False Positives feature is leveraged to mitigate the risk of inaccuracies in visual comparisons between the baseline version and the current screenshot. This is essential because when screenshots are captured and compressed to common file formats like .png, .jpg, or .jpeg, there’s a significant likelihood of pixelation enhancement to improve image quality.

However, this enhancement can be misleading and may present discrepancies in visual appearances when compared with the baseline version. The selected option, "antialiasing", specifically addresses the smoothing of edges in digital images, ensuring that such enhancements do not affect the visual testing results, thus reducing the chance of false positives in the comparison output.

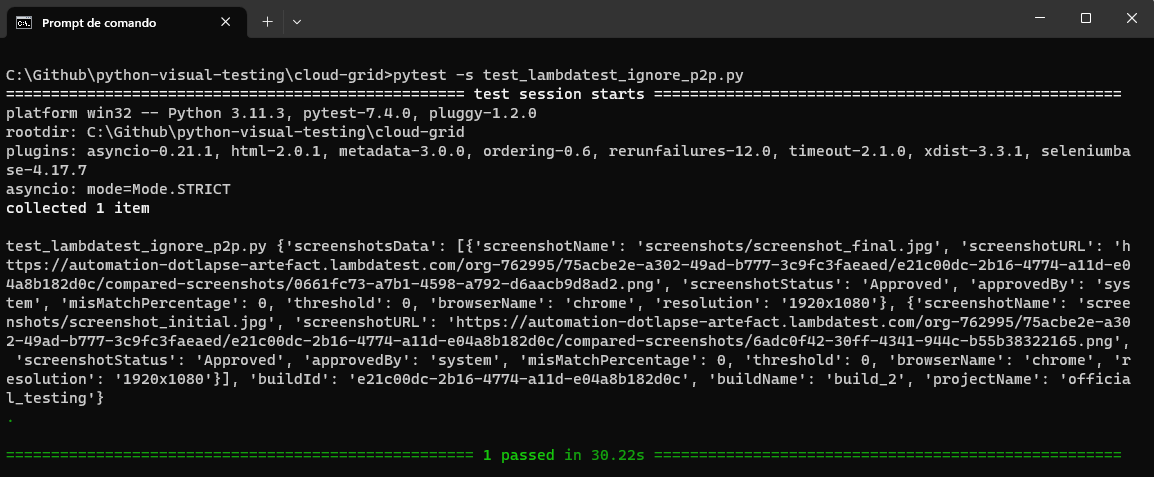

You can run the above code by just calling the below command:

pytest -s test_lambdatest_ignore_p2p.py

In the LambdaTest Dashboard, you can see the test results and the screenshots taken while you performed Python visual regression testing.

Scenario 3: Visual Testing Ignore Colored Areas Implementation

File Name - test_lambdatest_ignore_colored_areas.py

from selenium import webdriver

from selenium.webdriver.common.by import By

import pytest

import os

import configparser

# Load the configuration file

config = configparser.ConfigParser()

config.read('config/config.ini')

@pytest.fixture()

def driver(request):

username = os.getenv("LT_USERNAME")

accessKey = os.getenv("LT_ACCESS_KEY")

gridUrl = config.get('CLOUDGRID', 'grid_url')

web_driver = webdriver.ChromeOptions()

platform = config.get('ENV', 'platform')

browser_name = config.get('ENV', 'browser_name')

color = {

"r": 242,

"g": 201,

"b": 76,

"a": 1

}

lt_options = {

"user": config.get('LOGIN', 'username'),

"accessKey": config.get('LOGIN', 'access_key'),

"build": config.get('CLOUDGRID', 'build_name'),

"name": config.get('CLOUDGRID', 'test_name'),

"platformName": platform,

"w3c": config.get('CLOUDGRID', 'w3c'),

"browserName": browser_name,

"browserVersion": config.get('CLOUDGRID', 'browser_version'),

"selenium_version": config.get('CLOUDGRID', 'selenium_version'),

"smartUI.project": "official_testing",

"smartUI.build": "build_3",

"smartUI.baseline": False,

"ignoreAreasColoredWith" : color # Your bounding box configuration

}

options = web_driver

options.set_capability('LT:Options', lt_options)

url = f"https://{username}:{accessKey}@{gridUrl}"

driver = webdriver.Remote(

command_executor=url,

options=options

)

yield driver

driver.quit

def test_visual_using_lambdatest(driver):

driver.get(config.get('WEBSITE', 'url'))

driver.execute_script("smartui.takeScreenshot=screenshots/screenshot_initial.jpg")

# Find an input element by its ID and enter text

input_element = driver.find_element(By.ID, "user-message")

input_element.send_keys("This is a visual testing text!")

# # Find an element by its ID and click on it

element = driver.find_element(By.ID, "showInput")

element.click()

# # Find an element by its ID and extract its text

element = driver.find_element(By.ID, "message")

driver.execute_script("smartui.takeScreenshot=screenshots/screenshot_final.jpg")

assert element.text == "This is a visual testing text!"

result = driver.execute_script("smartui.fetchScreenshotStatus")

print(result)Code Walkthrough

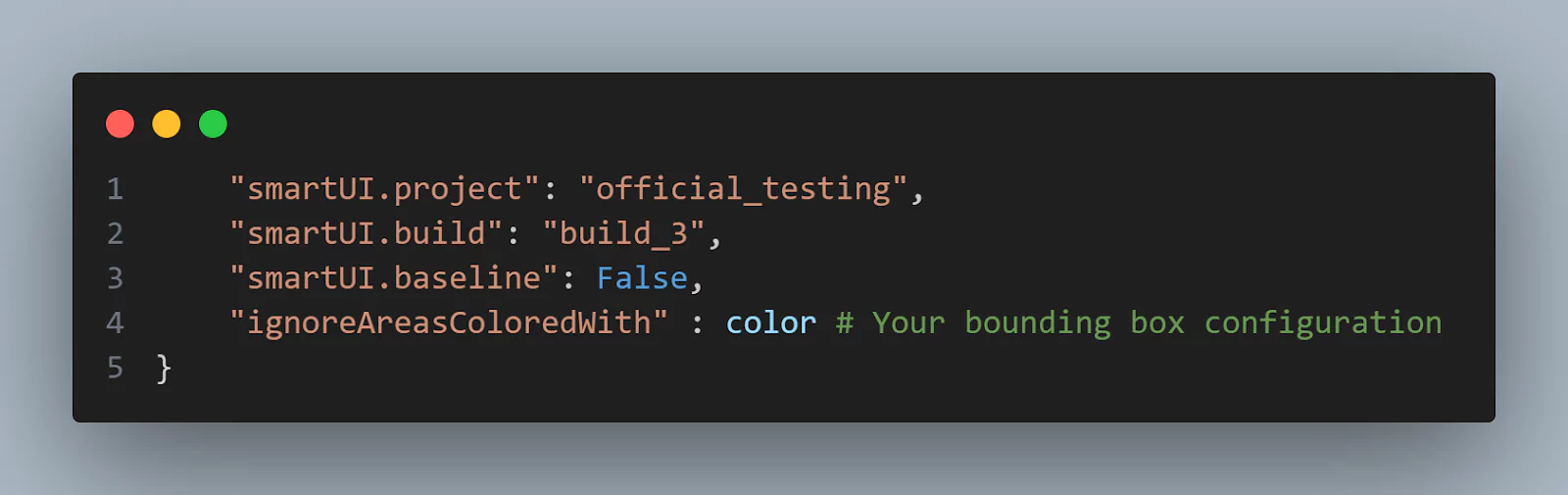

Almost the same as the previous file, just changing three points:

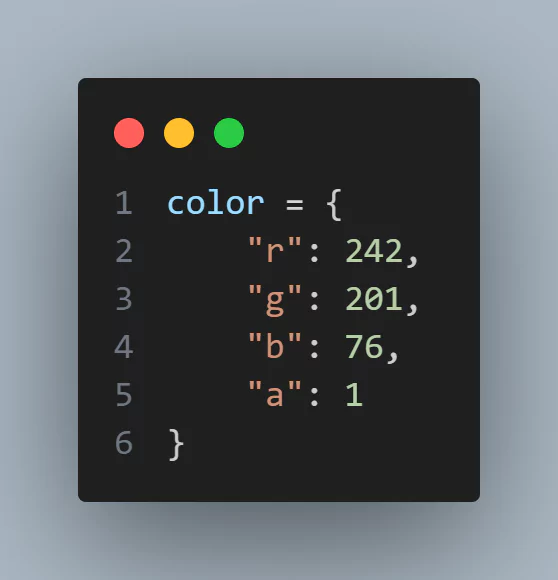

- A color dictionary is created with some RGBA values.

- The smartUI.build capability is now called build_3.

- There is one new capability, ignoreAreasColoredWith, that is set to the color dictionary.

In the below code snippet, the LambdaTest capability "ignoreAreasColoredWith": color is utilized, where color is a dictionary specifying the RGBA values as {"r": 242, "g": 201, "b": 76, "a": 1}.

This capability enables the Ignore Areas Colored feature, which is designed to omit pixels of a specific color in the baseline image during visual comparison. Any region in the baseline image matching the specified color will be excluded from the comparison view, allowing for more focused and relevant visual testing results.

This is particularly useful in cases where certain colored regions or elements in the image are dynamic or irrelevant to the testing scenario, thus helping reduce false positives and enhancing the accuracy of the visual comparison.

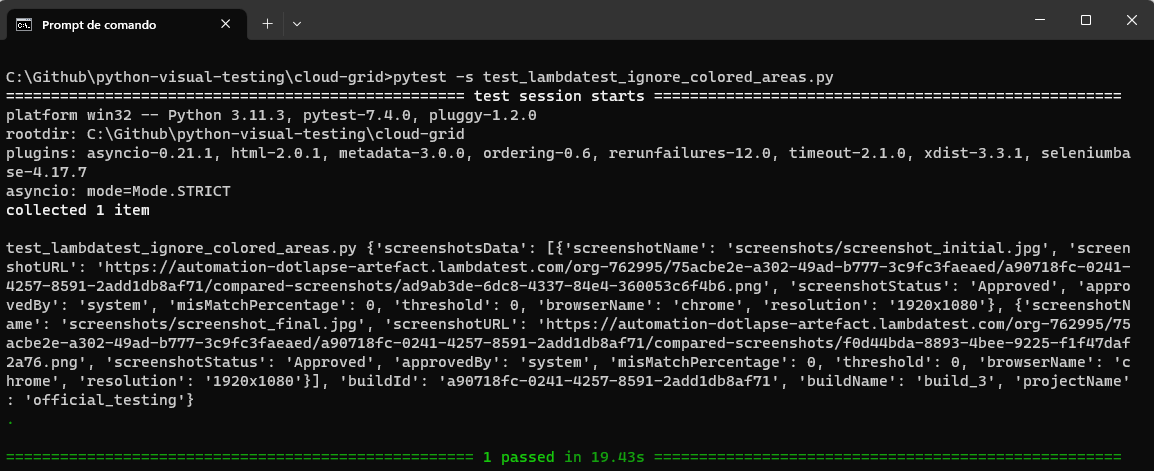

You can run the above code by just calling the below command:

pytest -s test_lambdatest_ignore_colored_areas.py

In the LambdaTest Dashboard you can see the test status and the screenshots taken.

Scenario 4: Visual Testing Bounding Boxes Implementation

File Name - test_lambdatest_bounding_boxes.py

from selenium import webdriver

from selenium.webdriver.common.by import By

import pytest

import os

import configparser

# Load the configuration file

config = configparser.ConfigParser()

config.read('config/config.ini')

@pytest.fixture()

def driver(request):

username = os.getenv("LT_USERNAME")

accessKey = os.getenv("LT_ACCESS_KEY")

gridUrl = config.get('CLOUDGRID', 'grid_url')

web_driver = webdriver.ChromeOptions()

platform = config.get('ENV', 'platform')

browser_name = config.get('ENV', 'browser_name')

box1 = {

"left": 100,

"top": 500,

"right": 800,

"bottom": 300

}

box2 = {

"left": 800,

"top": 50,

"right": 20,

"bottom": 700

}

lt_options = {

"user": config.get('LOGIN', 'username'),

"accessKey": config.get('LOGIN', 'access_key'),

"build": config.get('CLOUDGRID', 'build_name'),

"name": config.get('CLOUDGRID', 'test_name'),

"platformName": platform,

"w3c": config.get('CLOUDGRID', 'w3c'),

"browserName": browser_name,

"browserVersion": config.get('CLOUDGRID', 'browser_version'),

"selenium_version": config.get('CLOUDGRID', 'selenium_version'),

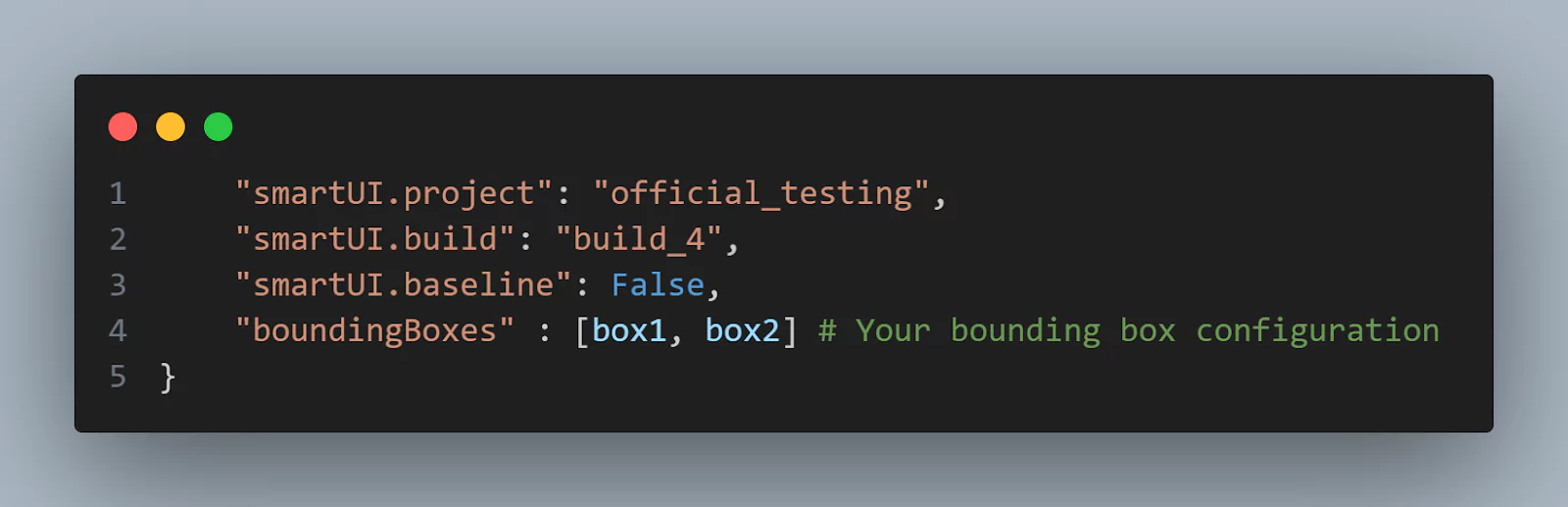

"smartUI.project": "official_testing",

"smartUI.build": "build_4",

"smartUI.baseline": False,

"boundingBoxes" : [box1, box2] # Your bounding box configuration

}

options = web_driver

options.set_capability('LT:Options', lt_options)

url = f"https://{username}:{accessKey}@{gridUrl}"

driver = webdriver.Remote(

command_executor=url,

options=options

)

yield driver

driver.quit

def test_visual_using_lambdatest(driver):

driver.get(config.get('WEBSITE', 'url'))

driver.execute_script("smartui.takeScreenshot=screenshots/screenshot_initial.jpg")

# Find an input element by its ID and enter text

input_element = driver.find_element(By.ID, "user-message")

input_element.send_keys("This is a visual testing text!")

# # Find an element by its ID and click on it

element = driver.find_element(By.ID, "showInput")

element.click()

# # Find an element by its ID and extract its text

element = driver.find_element(By.ID, "message")

driver.execute_script("smartui.takeScreenshot=screenshots/screenshot_final.jpg")

assert element.text == "This is a visual testing text!"

result = driver.execute_script("smartui.fetchScreenshotStatus")

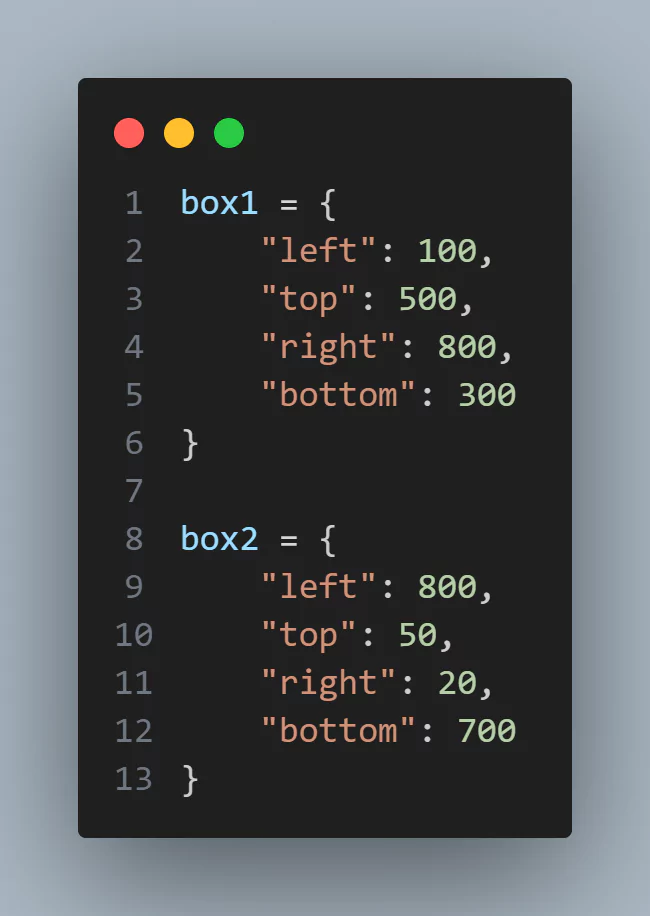

print(result)Code Walkthrough

Almost the same as the previous file, just changing three points:

- Two box dictionaries are created with some left, top, right, and bottom values set.

- The smartUI.build capability is now called build_4.

- There is one new capability, boundingBoxes, which is set to the two box dictionaries.

In the below code snippet, two bounding boxes are defined, box1 and box2, each represented by a dictionary specifying the left, top, right, and bottom coordinates. These bounding boxes are then set as a LambdaTest capability through "boundingBoxes": [box1, box2], signifying that only the areas within these bounding boxes will be considered during the visual comparison with the baseline image.

The Bounding Boxes feature is instrumental in isolating specific screenshot areas for comparison, effectively ignoring the rest. This is especially valuable when the goal is to focus on certain elements or sections of a page, ensuring that the visual testing is targeted and precise, facilitating more accurate and meaningful testing outcomes.

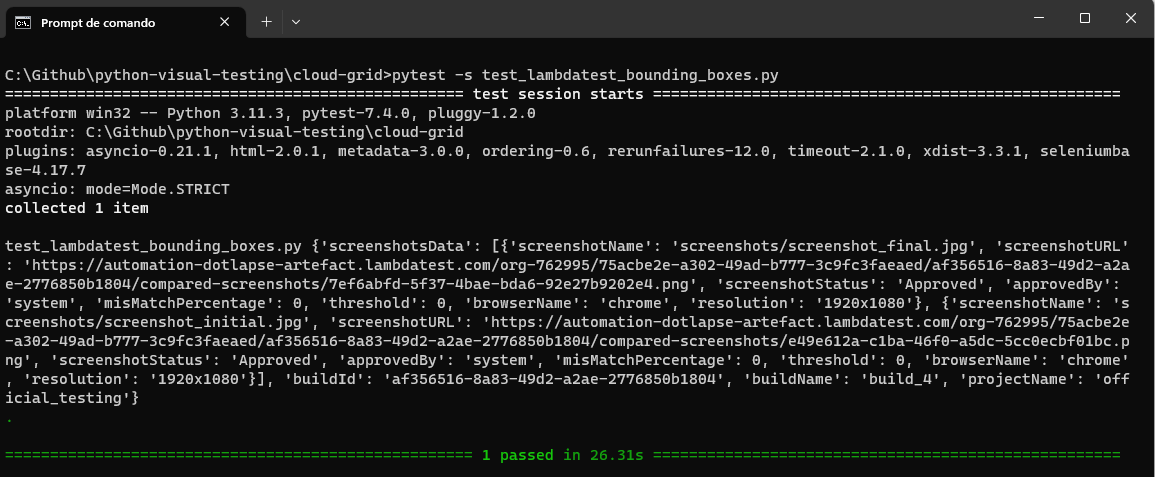

You can run the above code by just calling the below command:

pytest -s test_lambdatest_bounding_boxes.py

In the LambdaTest Dashboard, you can see the test status and the screenshots taken.

Advanced Techniques in Visual Regression Testing

Visual regression testing is pivotal for maintaining visual consistency and user experience across software versions. While basic testing can identify discrepancies, advanced techniques are crucial when dealing with intricate and dynamic elements.

Handling Dynamic Content

Dynamic content such as sliders, videos, animations, and ads can introduce complications to Python visual regression testing.

- Dealing with Sliders, Videos, etc.

- Static State Testing: Freeze sliders or animations at a specific frame or state, ensuring consistent visuals across tests.

- Sequence Testing: Instead of testing a single state, capture a sequence of states to validate the entire animation or transition.

- Using Waits and Timeouts Effectively

- Explicit Waits: Wait for a particular condition to be met (like an element to be displayed) before taking a snapshot.

- Implicit Waits: Set a fixed time duration for the tool to wait before taking a snapshot, giving dynamic elements enough time to load or transition.

Threshold Configurations

The nature of visual testing means that sometimes negligible differences, which are not genuine errors, can be flagged.

- Setting Acceptable Change Limits

- Pixel Threshold: Establish a limit for how many pixels can differ before a test is considered failed.

- Percentage Difference: Determine an acceptable percentage change between images, offering a more scalable threshold method than pixel counts.

- Understanding False Positives and Negatives

- False Positives: These are scenarios where the test flags an error, but there's no genuine issue. A minor rendering difference between browser versions can cause this.

- False Negatives: More dangerous, these occur when a test doesn't flag an error, but there is a genuine issue. Overly generous thresholds or overlooked dynamic content can result in false negatives.

Mobile and Responsive Testing

Given the array of devices and resolutions today, testing across different viewports is crucial.

- Emulating Different Devices and Resolutions

- Device Emulation: Use tools that mimic specific device behaviors and characteristics. Many browser developer tools come with device emulation features.

- Viewport Resizing: Adjust the browser size to various resolutions to simulate different devices.

- Dealing with Mobile-Specific Challenges

- Orientation Changes: Mobile devices can switch between portrait and landscape orientations, affecting visual layouts.

- Touch Interactions: Some mobile-specific interactions (like swipes or long-presses) might introduce visual elements (like tooltips) that must be considered in tests.

- Variable Network Conditions: Mobile devices can experience fluctuating network speeds, impacting the loading and appearance of dynamic content.

By incorporating these advanced techniques, testers can navigate the complexities of modern web environments, ensuring that visual regression tests are thorough, accurate, and relevant to real-world usage scenarios.

Conclusion

Python visual regression testing is an indispensable pillar in software development, ensuring that any alterations in the codebase do not inadvertently introduce visual discrepancies or errors in the application's user interface. Through this detailed exploration, we have journeyed through the foundational concepts, practical implementation, and some of the available tools in the Python ecosystem for conducting visual regression testing.

In closing, Python visual regression testing emerges as a vital practice, safeguarding the visual integrity and user experience of applications in an ever-evolving digital landscape. Armed with the knowledge and practical insights gleaned through this exploration, developers and testers alike are well-equipped to harness the power of Python for crafting robust and effective visual regression tests, thereby contributing to the delivery of high-quality software products.

On this page

- Overview

- Introduction to Visual Regression Testing

- Basics of Regression Testing

- Visual Regression Testing Tools/Frameworks in Python

- Writing your First Visual Regression Test in Python

- Integrating Python Visual Regression Testing into CI/CD

- Reporting and Maintenance

- Demonstration: Python Visual Regression Testing

- Advanced Techniques in Visual Regression Testing

- Frequently Asked Questions

Frequently asked questions

- General

Author's Profile

Paulo Oliveira

Paulo is a Quality Assurance Engineer with more than 15 years of experience in Software Testing. He loves to automate tests for all kind of applications (both backend and frontend) in order to improve the team’s workflow, product quality, and customer satisfaction. Even though his main roles were hands-on testing applications, he also worked as QA Lead, planning and coordinating activities, as well as coaching and contributing to team member’s development. Sharing knowledge and mentoring people to achieve their goals make his eyes shine.

Reviewer's Profile

Himanshu Sheth

Himanshu Sheth is a seasoned technologist and blogger with more than 15+ years of diverse working experience. He currently works as the 'Lead Developer Evangelist' and 'Director, Technical Content Marketing' at LambdaTest. He is very active with the startup community in Bengaluru (and down South) and loves interacting with passionate founders on his personal blog (which he has been maintaining since last 15+ years).

Did you find this page helpful?

More Hubs

Try LambdaTest Now !!

Get 100 minutes of automation test minutes FREE!!

Christmas Deal is on: Save 25% off on select annual plans for 1st year.

Christmas Deal is on: Save 25% off on select annual plans for 1st year.