- Testing Basics

- Home

- /

- Learning Hub

- /

- Performance Testing: Its Working With Best Practices

- -

- March 20 2023

Performance Testing: Its Working With Best Practices

Learn the basics of performance testing and how it helps detect and fix the performance bottlenecks in the software application.

OVERVIEW

Performance testing is a non-functional testing technique to check how a software application's stability, speed, scalability, and responsiveness perform under a specific workload.

Every newly developed software application with multiple features requires testing with respect to reliability, scalability, resource usage, and other factors. A software application with mediocre performance metrics can result in a bad reputation and failure to meet sales revenues.

Performance testing includes validating all quality attributes of the software system, like application output, processing speed, network bandwidth utilization, data transfer speed, maximum parallel users, memory consumption, workload efficiency, and command response times.

What is Performance Testing?

When a tester subjects a software application to a specific workload for resource usage, scalability, reliability, stability, response time, and speed, this process is known as performance or perf testing. The main intention of a performance test is to detect and fix the performance bottlenecks in the software application.

During a performance test, the QA team focuses on the following parameters:

- It determines the stability, i.e., whether the software application can withstand and remain stable while the workload changes.

- It determines the scalability and the maximum user load the software application can handle.

- It determines the speed to ensure the application's responses are quick.

Note : If you're a beginner aiming to familiarize yourself with frequently asked performance testing interview questions explore our comprehensive guide for better understanding.

Importance of Performance Testing

Performance testing is not about verifying the features and functionalities of the software application. Instead, it evaluates the performance of the software application under user load by finding out the scalability, resource usage, reliability, and response time.

The gist of a performance test is not the detection of bugs but the elimination of performance bottlenecks. The testing team shares the results pertinent to the software application’s scalability, stability, and speed with the stakeholders to analyze the results and identify the required improvements to be made in the software applications before sharing them with the end user or customer.

Without performance tests, the software application might suffer from the following issues:

- Poor usability

- Inconsistencies occur when the software application runs on different operating systems.

- If multiple users use the software application simultaneously, the software application runs at a slower-than-normal speed.

Releasing such applications to the market will eventually gain a bad reputation and will not meet the expected sales targets.

Examples of Performance Testing

There are some mission-critical applications, such as life-saving medical equipment or space launch programs. Their success in performance testing is an assurance that they will run for a considerable duration without deviations. Let’s understand this with an example.

Dunn & Bradstreet has come up with the following statistics.

Of the Fortune 500 companies, 59% suffer from 1.6 hours of downtime per week. Let us assume that an average Fortune 500 companies in 2022 will have a minimum of 10,000 employees. This company pays its employees 56 USD per hour. For such an organization, the downtime costs (that is, the labor part of them) accrue to 0.896 million USD per week, which becomes 46 million USD per year.

The third example is Amazon Web Service Outage. This outage caused a loss of 1,100 USD per second in the pertinent companies.

The above three examples prove the significance of performance testing.

Benefits of Performance Testing

If the software application works slowly, your customers conversing with speed will have a bad impression. When they detect that the software application has a ‘more than reasonable’ loading time and stalls in performance, they will just switch off the software application.

The consequence is that the organization will lose customers to competitors. A software application that cannot deliver a satisfactory performance as per current standards will adversely affect your business.

Therefore, the performance of a software application must be tested and brought to current standards before delivering it to the end user. This is where performance testing provides the following advantages.

- Validation of the basic features

This step consists of measuring the performance of the essential functions of the software application. After completing this step, business leaders can make significant decisions. A robust software foundation enables businesspersons to plan their business strategy.

- Measurement of the quality attributes

Measuring the speed, precision, and stability during the performance testing of the software application enables you to realize how the software application will manage scalability. When the testing team shares this information with the development team, they can make the correct decisions about the essential amendments in the software application. The development and testing teams can repeat this cycle until they achieve their objectives.

- End users satisfaction

The first impression of your software application in the customer's mind is very significant. According to research, end users using web and mobile applications expect the loading time of the applications must be up to two seconds. If, within these two seconds, your client experiences satisfaction, it is a great achievement for the organization.

- Identification of discrepancies and resolution of issues

If the team identifies some discrepancies, the testing team shares them with the development team. In this manner, they get some buffer time before the release of the software application. The development team resolves these issues during this buffer time.

- Optimization and load ability

Another benefit of performance testing is the improvement of optimization and load ability. Due to the performance measurement, the organization can address the volume issue so that the software application can remain stable, although the count of users is very high.

Types of Performance Testing

Performance testing is of the following six types.

- Scalability testing

In scalability testing, the QA team augments the user load on the software application and tests whether the application scales up to withstand this increased user load. One can use testing results in software development's planning and design phases, which help reduce costs and mitigate potential performance issues.

- Volume testing

The software testing team populates a large volume of data in the software application's database to monitor the software application's behavior. They vary the database volume and check the software application's performance under varying volume loads.

Here, a pertinent example is the website of a new college. In the initial years, the website has a lesser volume of data, which is manageable. However, after five years, the website's database has an enormous volume of data.

- Spike testing

The QA team generates sudden large spikes in the user load of the software application and checks the reaction of the software application to these spikes.

- Endurance testing

The testing team checks whether the software application can withstand the expected user load for a long time.

- Stress testing

The QA team subjects the software application to extreme workloads to check the behavior of the software application when there is high traffic or high-volume data processing. The final intention of this testing is to determine the breaking point of the software application.

The team finds out the answers to the following questions:

- While increasing the load on the software application, to what value of load does the software application bear the load without breaking down?

- What is the pertinent information about the breakdown of the software application?

- After the software application crashes, is the recovery of the application possible? If yes, what are the conditions for recovery?

- What are the different ways that a system can break?

- While the software application handles the unexpected load, which are the weak nodes?

- Load testing

The quality assurance team checks the software application's ability to perform under expected user loads. Load testing aims to identify performance bottlenecks while the software application has yet to go live.

The team finds out the answers to the following questions:

- While increasing the load on the software application, to what value of load does the software application behave expectedly? After what value of load does the software application behave erratically?

- After what volume of data does the software application crash or slow down?

- Are there any issues related to networks that can be addressed?

- Capacity testing

The testing team determines the number of users or transactions the software application can support while maintaining the required performance. The team considers resources such as disk capacity, memory usage, network bandwidth, and processor capacity and modifies them to meet the goals.

The team finds out the answers to the following questions:

- Can the software application bear the future load?

- Can the environment withstand the upcoming increased load?

- If the environment's ability is to be made adequate, which additional resources are essential?

- Recovery or Reliability testing

The QA team subjects the software application to abnormal behavior or failure. Then, the team checks whether the software application in such a state can return to its normal state. If the software application returns to its normal state, then the team determines the time required by the software application to change from the abnormal or failed state to the normal state.

An example of this is an online trading site. During peak hours, this site fails and remains in this failed state for two hours. During these two hours, users cannot purchase or sell shares. However, after two hours, the application returns to its normal state, and then, users can buy or sell shares. In such a case, the team can state that the software application is reliable or can recover from the abnormal behavior.

Common Issues in a Software Application’s Performance

One of the most significant attributes of an application is speed. If a software application runs at a slower speed, this application is likely to lose potential users. When performance testing happens, the team can ascertain that the software application is running adequately fast to retain the attention and interest of the user.

In addition to slow speed, other performance problems are related to poor scalability, load time, and response time. The following is a list of the usual performance issues.

- Bottlenecks

These are hurdles in the software application that result in degraded system performance. The reasons for the formation of bottlenecks are hardware issues or coding errors. These bring about a reduction in the throughput under specific loads. The origin of bottlenecks is one faulty section of code.

Some examples of common bottlenecks are disk usage, operating system limitations, network utilization, memory utilization, and CPU utilization. The solution for bottlenecks is to detect the section of the code that has resulted in the slowdown. The general ways of fixing bottlenecks are by adding additional hardware or improving slow-running processes.

- Poor scalability

Some software applications need to accommodate an adequately broad range of users or handle the expected count of users. These software applications need better scalability. The solution is to perform load testing for a software application. If this is successful, the conclusion is that the software application can manage the anticipated count of users.

- Poor response time

When a user provides data as input to the software application, it responds in the form of output after some time. This time interval between input and output is the response time. The ideal condition is that the response time must be minimal. If the response time is more, the user has to be patient for a long time, and the result is that the user loses interest in interacting with the software application.

- Long load time

The initial time essential for an application to start is the load time. Generally, the load time must be minimum, up to only a few seconds. In the case of some software applications, it is impossible to restrict the load time below 60 seconds.

Process of Performance testing

The objective of performance testing is the same, but the sequence of steps can differ. Following is the generic sequence of steps to run a performance test.

- Requirement Analysis or Gathering.

- Proof of Concept (PoC) and Tool Selection.

- Performance Test Planning and Designing.

- Create Performance Test Use Cases.

- Create Performance Load Model.

- Performance Test Execution .

- Analysis of Test Results.

- For every test result, the team assigns a unique and meaningful name.

- The test result summary includes the following information:

- The reason for the failure.

- Comparing the application performance from the previous test run with the current one.

- The modifications in the test concerning the test environment.

- After every test run, the team comes up with a result summary, which has the following information:

- The goal of the test.

- The count of virtual users.

- The scenario summary.

- The test duration.

- Graphs.

- Throughput.

- The response time.

- A comparison of graphs.

- The error that has occurred.

- Some recommendations.

- Performance testing is implemented with several test runs, after which the correct conclusion is deduced.

- Report.

The QA team sets meetings with the customer to identify and gather technical and business requirements. They collect information related to the software application concerning the following: software and hardware requirements, test requirement, application usage, functionality, intended users, database, technologies, and architecture.

The first step is identifying the critical functionality of the software application. Further, using the available tools, the team completes the PoC. The list of available tools depends on the tool cost, the software application’s protocol, the technologies used by the development team to build the software application, and the user count.

The testing team creates the scripts for the PoC based on the key functionality of the software application. Then, the team executes the PoC with 10 to 15 virtual users.

The software testing team uses the information from Step 1 and Step 2 to plan and design tests. The test plan consists of information related to the test environment, workload, hardware, etc.

The QA team creates use cases for the key functionality of the software application. Then, the team shares these use cases with the customer. The customer submits the approval for these use cases. Now, the testing team commences the script development. This consists of a recording of the steps in the use cases.

For this, the team uses the performance test tools to execute the PoC. As per the situation and need, they enhance the script development by including custom functions, parameterization or value substitution, and correlation to handle the dynamic values. Then, the team validates the script for different users.

While doing script development, the team simultaneously sets up the test environment, which includes the hardware and the software. The third activity is to handle the metadata (that is, the back end) using the scripts.

The testing team creates the Performance Load Model for the execution of the test. The team’s main aim is the validation of the client-provided performance metrics. The team confirms whether these metrics are achieved or otherwise. This team has many different approaches for creating the Performance Load Model. In most instances, the team uses ‘Little’s Law.’.

The QA team designs the scenario for performance tests in tune with the Performance Load Model in the Performance Center or the Controller. They increase the test loads in increments. An example is when the maximum count of users is 500. In such a case, the team gradually increases the load (10, 15, 20, 30, 50, 100 users, and so on).

Test results are the most significant deliverable of the team. In analyzing test results, the team implements the following best practices:

The testing team simplifies the test results so that a clear conclusion is obtained without any deviations. The development team needs details about the reasoning of the testing team to reach the results, a comparison of results, and detailed analysis information

Responsibilities of Performance Testing Team

Following are the roles and responsibilities of a Performance Test Lead and Performance Tester.

Performance Test Lead:

- Procuring the performance requirements.

- Analyzing the performance requirements.

- Drafting the requirements and signing them off.

- Drafting the strategies and signing them off.

- Participating in the reviews of the deliverables.

Performance Tester:

- Developing the performance test scripts for the identified scenarios.

- Conduct the performance test.

- Submitting the test results.

Key Performance Testing Metrics

During performance testing, the following parameters are monitored.

- Garbage Collection: It involves evaluating unused memory back to the system to increase the application’s efficiency.

- Thread Counts: It helps determine the count of running and active threads, which indicates the health of the software application.

- Top Waits: It monitors and finds out the wait times that can be reduced when the team is handling the speed at which the data can be retrieved from the memory.

- Database Locks: It implies that the databases and the tables are monitored and tuned carefully.

- Rollback Segment: It determines the volume of data that can roll back at a specific time.

- Hits Per Second: During the load testing, it provides the count of hits on a Web Server per second.

- Hit Ratios: It involves the count of SQL statements managed by the cache data in place of the costly input/output operations.

- Maximum Active Sessions: It renders the maximum count of sessions that can be active simultaneously.

- Amount of Connection Pooling: It offers the count of user requests met by pooled connections. The performance quality is directly proportional to the count of user requests met by connections in the pool.

- Throughput Monitoring: It provides the rate at which the network or the computer receives requests per second.

- Response Time Monitoring: It helps determine the time from the entry of a request by a user till the receipt of the first character of the response.

- Network Bytes Total Per Second: It renders the rate at which the bytes are sent and received on the interface, including framing characters.

- Network Output Queue Length: It returns the length of the output packet lined up in packets. If this length is more than two, it indicates a delay, and the testing team must stop bottlenecking.

- Disk Queue Length: It provides the average count of read and writes requests queued for the selected disk during a sample interval.

- CPU Interrupts Per Second: It monitors and renders the average count of hardware interrupts that a processor receives and processes per second.

- Page Faults/Second: It returns the overall rate at which the processor processes the fault pages.

- Memory Pages/Second: It offers the count of pages that the system reads from or writes to the disk for the resolution of hard page faults.

- Committed Memory: It provides the volume of the used virtual memory.

- Private Bytes: It monitoring gives the number of bytes allocated by a process, which are not shareable with other processes. The private bytes count helps measure memory leaks and usage.

- Bandwidth: It renders the bits per second used by a network interface.

- Disk Time: It displays the time for which the disk is involved in the execution of a write or read request.

- Memory Use: It renders the quantity of physical memory processes that can be used on a computer.

- Processor Usage: It displays the time the processor executes non-idle threads

Performance Testing Tools

For tool selection, you should consider various factors such as platform support, hardware requirements, license cost, and the supported protocol. Some well-known performance test tools are the following.

- HP LoadRunner

This tool has a virtual user generator, which can simulate the actions of live human users. The tool can simulate hundreds of thousands of users. Therefore, it can subject the software application to real-life loads and then find out the behavior of the software application in this condition. In the present market, this is the tool with maximum popularity.

- JMeter

- LoadNinja

JMeter is an open-source tool for performance and load testing. It analyzes and measures the performance of web and web service applications.

It is a cloud-based load testing tool. The QA team records and then instantly playbacks holistic load tests without complex dynamic correlation. Then, the team runs the load tests in real-time browsers. The result is that this tool decreases load testing time and enhances the test coverage.

Best practices for Performance Testing

Implementing performance testing using best practices for all the stages (Planning, Development, Execution, and Analysis) assures this endeavor will be successful. Let us understand the best practices for each step.

Planning

- The team should attempt to locate the most usual workflows (that is, business scenarios) that it must test. In the case of an existing software application, the team should refer to the server logs and then pinpoint the scenarios accessed with maximum frequency. In the case of a new software application, the team should hold discussions with the project management teams to understand the major business flow.

- The team should devise a plan for the load test that encompasses an entire gamut of workflows starting from light usage, proceeding to medium usage, and ending with peak usage.

- The team has to execute several cycles of the load test. With this in mind, it should do two things. The first is to come up with a framework wherein the team can use the same scripts repeatedly. The second is to have a backup of the scripts.

- The team should attempt to predict the duration of the test. It can state durations such as an hour, eight hours, one day, or one week. Typically, tests of longer durations expose sundry major defects (such as memory leaks and OS bugs, among others).

- Some organizations use the Application Monitoring Tool (APM). If so, the team can include this APM during the test runs. The result of this endeavor is that the team can easily find the performance issues, along with the root cause of these issues

Development

- When the testing team is doing script development (that is, recording), the team should coin meaningful transaction names. They should base these names on the business flow names written in the plan.

- The team must never record third-party applications. If some such applications get recorded mistakenly, the team should filter them out during script enhancement.

- The team uses the Autocorrelation feature of the tool to correlate the dynamic values. However, the team cannot correlate all such values. The proper method to prevent errors is that the team should perform manual correlation.

- The team must design the performance tests so that they can hit not only the Cache Server but also the software application’s back end.

Execution

- In performance testing, the ultimate aim is to simulate a realistic load on the software application. Therefore, the team should implement the tests in an environment identical to the production environment. This should be applicable in the case of all factors, such as Firewalls, Load Balancers, and Secure Socket Layer (SSL), among others.

- To ensure successful performance testing, the crux is to arrange a workload identical to the software application's real-life workload. In the case of an existing software application, the team should check the server logs to understand the realistic workload. If the software application is new, the team should discuss this with the business team.

- At times, the team tends to conduct performance tests in an environment that is 50 percent of the size of the production environment. If the team uses the results of such tests to form conclusions, the team can deduce the wrong conclusions. The only way is to implement performance testing in an environment that is identical or almost identical to the production environment size.

- When the team conducts long-run tests, they should check the run intermittently and frequently. By this method, the team can ensure that the performance testing progresses smoothly.

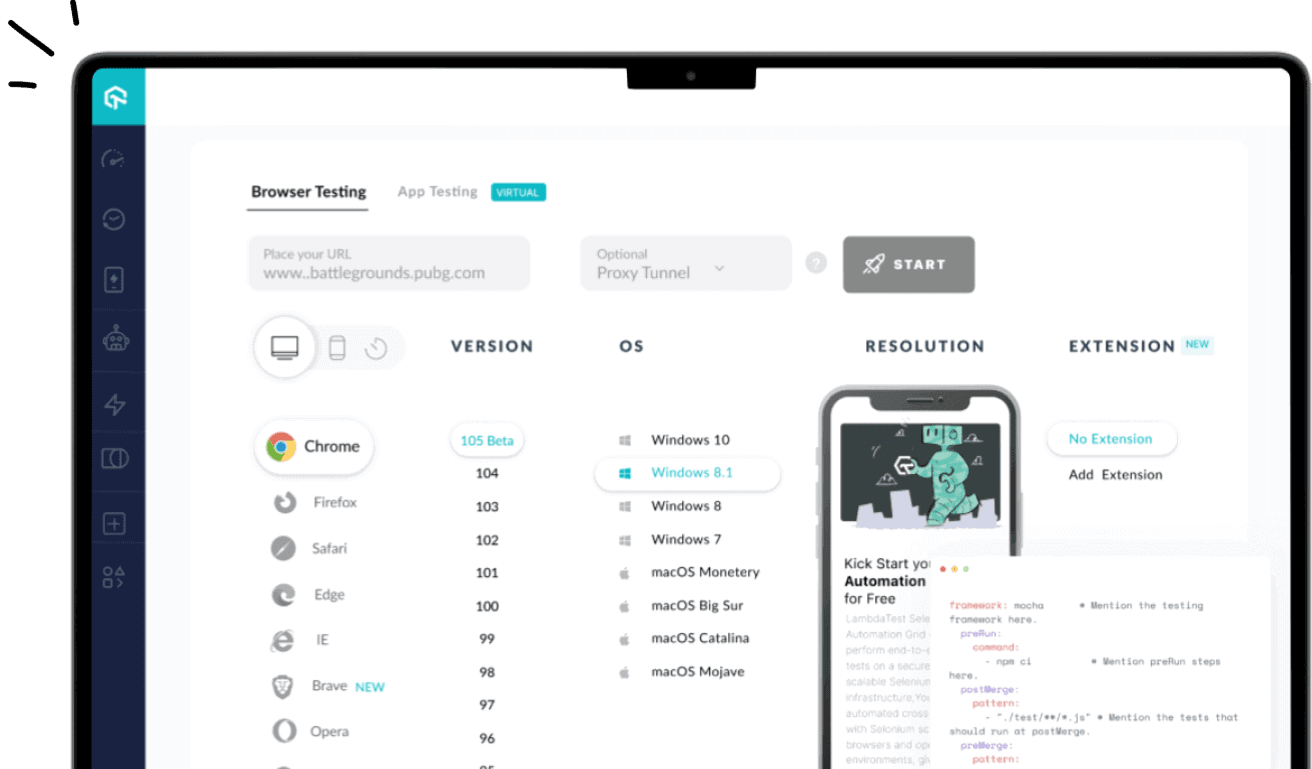

To test software applications in real user conditions, the QA team can leverage real device cloud without maintaining the hassle of in-house device labs. In this way, QAs can ensure that their results are always accurate. By ensuring comprehensive and bug-free testing, one can avoid major bugs from making their way into production, thus enabling software applications to provide the best user experience.

Subscribe to the LambdaTest YouTube Channel for test automation tutorials around Selenium, Playwright, Appium, and more.

Analysis

- Initially, the team must add a small count of counters to the software application. When the team comes across a bottleneck, the team should add more counters related to the bottleneck. If the team analyzes this way, it can easily detect the issue.

- The reasons for the failure of a software application can be many. Some of these can be that the software application responds at a slow speed, fails the validation logic, responds with an error code, or fails to respond to a request. The team must consider all such reasons and then deduce a conclusion

Conclusion

Performance testing is a crucial aspect of software development that ensures the application's stability, scalability, and reliability. In this comprehensive guide, we have covered all the important aspects of performance testing, including its definition, types, and best practices. With the help of the right tools and methodologies, performance testing can be an efficient and effective way to ensure your software application's stability, scalability, and reliability.

Frequently asked questions

- General

- LambdaTest related FAQs

Author's Profile

Irshad Ahamed

Irshad Ahamed is an optimistic and versatile software professional and a technical writer who brings to the table around four years of robust working experience in various companies. Deliver excellence at work and implement expertise and skills appropriately required whenever. Adaptive towards changing technology and upgrading necessary skills needed in the profession.

Reviewer's Profile

Salman Khan

Salman works as a Digital Marketing Manager at LambdaTest. With over four years in the software testing domain, he brings a wealth of experience to his role of reviewing blogs, learning hubs, product updates, and documentation write-ups. Holding a Master's degree (M.Tech) in Computer Science, Salman's expertise extends to various areas including web development, software testing (including automation testing and mobile app testing), CSS, and more.

Did you find this page helpful?

More Hubs

Try LambdaTest Now !!

Get 100 minutes of automation test minutes FREE!!

Christmas Deal is on: Save 25% off on select annual plans for 1st year.

Christmas Deal is on: Save 25% off on select annual plans for 1st year.