- Testing Basics

- Home

- /

- Learning Hub

- /

- Big Data Testing: Implementation and Best Practices

- -

- 16 Aug 2023

Big Data Testing Tutorial: Definition, Implementation and Best Practices

Master Big Data testing with our concise tutorial. Learn strategies, tools & best practices for seamless data quality assurance.

OVERVIEW

In the digital age, we are producing an immense amount of data every second. This data comes from various sources, including social media, sensors, machines, and more. This exponential growth in data has given rise to the concept of "Big Data," which refers to the vast and complex datasets that traditional data processing applications struggle to handle. As businesses and organizations rely more on Big Data for decision-making, it becomes crucial to ensure the quality and accuracy of this data. This is where Big Data Testing comes into play.

What is Big Data Testing?

Big Data Testing is a specialized form of software testing that focuses on validating the accuracy, completeness, performance, and reliability of large and complex datasets and the associated processing pipelines within a Big Data environment.

Importance of Big Data Testing

- Reliability and Accuracy: Testing Big Data is crucial for verifying the reliability and accuracy of insights derived from it. Faulty data can result in misleading conclusions and detrimental decisions.

- Avoiding Incorrect Conclusions: Inaccurate or inconsistent data can lead to incorrect conclusions, posing significant risks to businesses. Thorough testing helps prevent such situations.

- Impact on Decision-Making: Faulty insights can lead to misguided decisions that impact business strategies, operations, and outcomes. Effective testing mitigates this risk.

- Anomaly Detection: Big Data testing helps uncover anomalies, outliers, and unexpected patterns within the data. Identifying these anomalies is essential for maintaining data quality.

- Error and Inconsistency Identification: Testing assists in detecting errors and inconsistencies present in the dataset. Addressing these issues ensures the data's integrity and reliability.

- Informed Decision-Making: Organizations rely on accurate information to make informed decisions. Testing ensures that data-driven decisions are based on dependable insights.

- Business Consequences: Incorrect decisions due to flawed data can lead to financial losses, reputational damage, and missed opportunities. Thorough testing mitigates these negative outcomes.

- Data Quality Assurance: Testing processes enhance the overall quality of Big Data by identifying and rectifying issues that compromise the data's quality and usefulness.

- Enhancing Data Governance: Effective testing contributes to a robust data governance framework. Organizations can establish standards for data quality and ensure compliance with data regulations.

- Long-Term Viability: Consistently testing Big Data supports its long-term viability by maintaining its accuracy and relevance over time.

- Cross-Verification: Testing provides a means to cross-verify insights derived from different data sources, ensuring consistency and accuracy across datasets.

- Continuous Improvement: Regular testing fosters a culture of continuous improvement, encouraging organizations to refine data collection, processing, and analysis methods.

- Risk Mitigation: Through thorough testing, organizations can proactively identify and mitigate risks associated with data inaccuracies, safeguarding against potential pitfalls.

- Optimizing Resource Allocation: Reliable insights allow businesses to allocate resources effectively, focusing on areas that provide the most value based on accurate data.

How to Perform Testing for Hadoop Applications

When it comes to testing in the realm of big data, ensuring the efficacy of your test environment is paramount. This environment should have the capability to process substantial data volumes, akin to what a production setup would handle. In real-time production clusters, these setups generally comprise 30 to 40 nodes, with data distributed across these nodes. Each node within the cluster must meet certain minimum configuration requirements. Clusters can operate either within an on-premise infrastructure or in the cloud. For effective big data testing, a similar environment is required, complete with specific node configuration parameters.

An important aspect of the test environment is scalability. This characteristic allows for a thorough examination of application performance as the resources increase in number. The insights gained from this scalability analysis can subsequently contribute to defining the Service Level Agreements (SLAs) for the application.

Big Data Testing can be categorized into three stages:

Stage 1: Validation of Data Staging

The initial phase of this big data testing guide is referred to as the pre-Hadoop stage, focusing on process validation. Here are the key steps:

- Validate data from diverse sources such as RDBMS, weblogs, and social media to ensure accurate data ingestion.

- Conduct a comparative analysis between source data and data loaded into the Hadoop system to ascertain consistency.

- Verify the accurate extraction and loading of data into the designated HDFS location.

- Leverage tools like Talend and Datameer for data staging validation.

Stage 2: "MapReduce" Validation

The second stage involves "MapReduce" validation. During this phase, the Big Data tester ensures the validation of business logic on individual nodes and subsequently across multiple nodes. The focus is on confirming the following:

- Correct functioning of the MapReduce process.

- Implementation of data aggregation or segregation rules.

- Generation of key-value pairs.

- Validation of data post the MapReduce process.

Stage 3: Output Validation Phase

The final stage of Hadoop testing revolves around output validation. At this point, the generated output data files are poised for transfer to an Enterprise Data Warehouse (EDW) or another designated system, based on requirements. The activities encompass:

- Scrutinizing the correct application of transformation rules.

- Ensuring data integrity and successful data loading into the target system.

- Safeguarding against data corruption by comparing target data with the HDFS file system data.

By meticulously navigating through these stages, a comprehensive testing framework for Hadoop applications can be established, ensuring the reliability and robustness of your big data solutions.

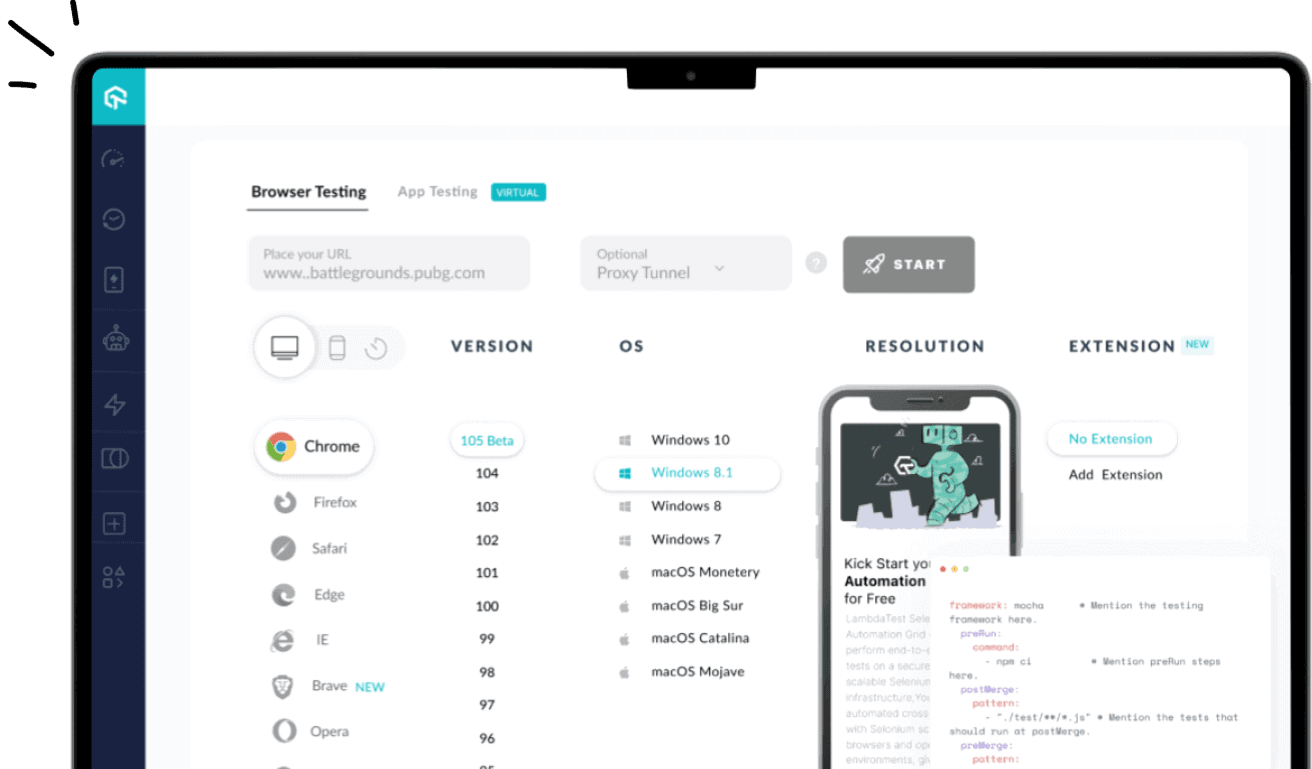

Run test automation on AI-powered test orchestration and execution cloud. Try LambdaTest Now!

Traditional and Big Data Testing

| Characteristics | Traditional Database Testing | Big Data Testing |

| Data | Works with structured data | Works with both structured and unstructured data |

| Testing Approach | Well-defined and time-tested | Requires focused R&D efforts |

| Testing Strategy | "Sampling" or "Exhaustive Verification" using automation | "Sampling" strategy presents challenges |

| Infrastructure | Regular environment due to limited file size | Requires special environment due to large data size (HDFS) |

| Validation Tools | Excel-based macros or UI automation tools | Range of tools from MapReduce to HIVEQL |

| Testing Tools | Basic operating knowledge, minimal training | Specific skills and training required, evolving features |

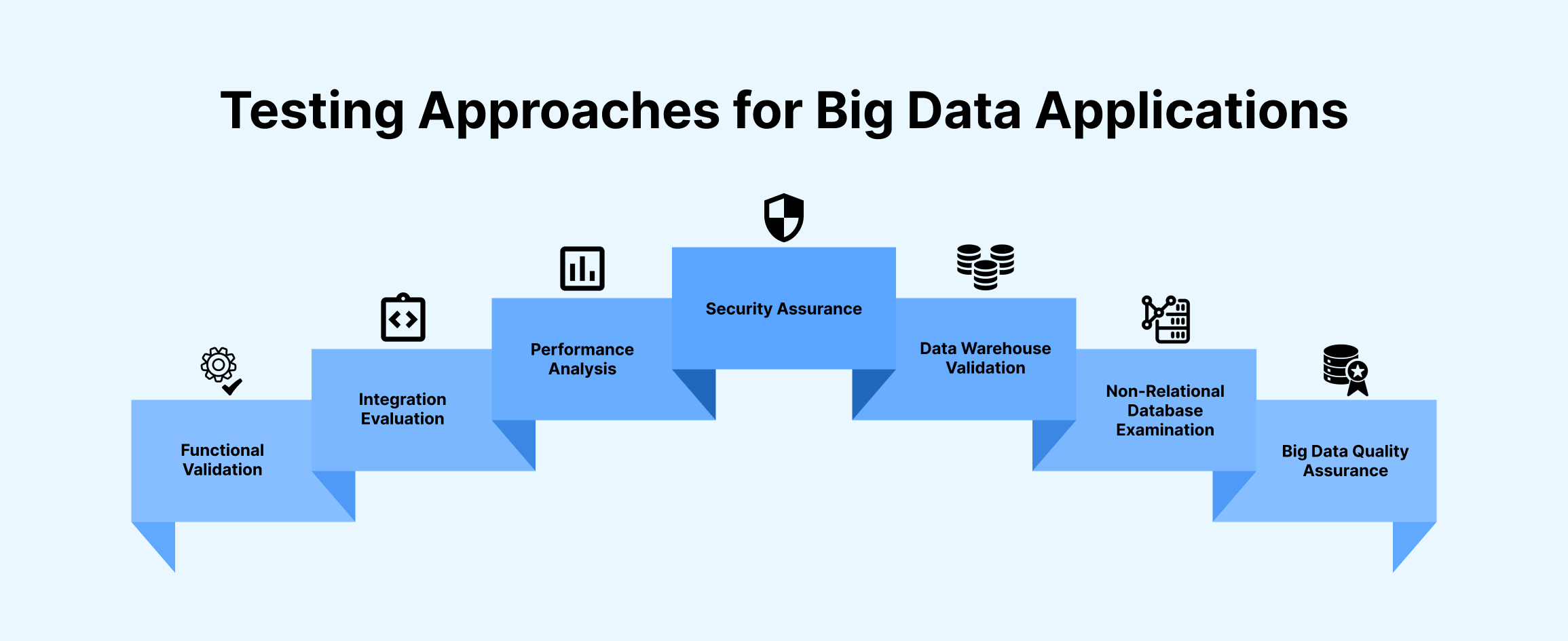

Testing Approaches for Big Data Applications

Functional Validation

Testing a comprehensive big data application that comprises both operational and analytical components necessitates a meticulous approach to functional validation, particularly at the API level. To begin, it is essential to verify the functionality of each individual element within the big data app.

For instance, in the case of a big data operational solution built on an event-driven architecture, a test engineer will methodically assess the output and behavior of each constituent component (such as data streaming tools, event libraries, and stream processing frameworks) by inputting test events. Following this, an end-to-end functional assessment ensures the seamless coordination of the entire application.

Integration Evaluation

The testing paradigm of integration assumes paramount importance in affirming the smooth intercommunication of the extensive big data application with third-party software. This extends to ensuring harmonious collaboration both within and between multiple segments of the big data application.

The verification process also involves confirming the compatibility of diverse technologies employed. Tailored to the specific architecture and technological stack of the application, integration testing assesses, for instance, the interaction among technologies from the Hadoop family, such as HDFS, YARN, MapReduce, and other pertinent tools.

Performance Analysis

Maintaining consistent and robust performance within a big data application demands comprehensive performance analysis. This involves multiple facets:

- Evaluating actual access latency and determining the maximum data and processing capacities.

- Gauging response times from various geographical locations, considering potential variations in network throughput.

- Assessing the application's resilience against stress load and load spikes.

- Validating the application's efficient resource utilization.

- Tracing the application's scalability potential.

- Conducting tests under load to ensure the core functionality remains intact.

Security Assurance

Ensuring the security of vast volumes of sensitive data mandates rigorous security testing protocols. To this end, security test engineers should undertake the following:

- Validate encryption standards for data at rest and in transit.

- Verify data isolation and redundancy parameters.

- Identify architectural vulnerabilities that may compromise data security.

- Scrutinize the configuration of role-based access control rules.

- At a heightened security level, engage cybersecurity professionals to perform comprehensive application and network security scans, along with penetration testing.

Data Warehouse Validation

Testing the efficacy of a big data warehouse involves multiple facets, including accurate interpretation of SQL queries, validation of business rules and transformation logic embedded within DWH columns and rows, and the assurance of data integrity within online analytical processing (OLAP) cubes. Additionally, the smooth functioning of OLAP operations, such as roll-up, drill-down, slicing and dicing, and pivot, is verified through BI testing as an integral part of DWH testing.

Non-Relational Database Examination

Examination of the database's query handling capabilities is central to testing non-relational databases. Moreover, prudent assessment of database configuration parameters that may impact application performance, as well as the efficacy of the data backup and restoration process, is recommended.

Big Data Quality Assurance

Given the inherent complexities of big data applications, attaining absolute consistency, accuracy, auditability, orderliness, and uniqueness throughout the data ecosystem is an impractical pursuit. However, big data tests and data engineers should strive to ensure satisfactory quality on several challenging fronts:

- Validation of batch and stream data ingestion processes.

- Rigorous evaluation of the ETL (extract, transform, load) process.

- Assurance of reliable data handling within analytics, transactional applications, and analytics middleware.

Challenges in Big Data Testing

Big data testing presents a range of challenges that must be addressed through a comprehensive testing approach.

1. Test Data Complexity

The exponential surge in data growth over recent years has created a monumental challenge. Enormous volumes of data are generated daily and stored in expansive data centers or data marts. Consider the telecom industry, which generates vast call logs daily for enhancing customer experiences and competitive market positioning. Similarly, for testing purposes, the test data must mirror production data while encompassing all logically valid fields. However, generating such test data is a formidable task. Moreover, the test data must be substantial enough to thoroughly validate the functionality of the big data application.

2. Optimized Testing Environment

The efficacy of data processing significantly hinges on the testing environment performance. Efficient environment setup yields enhanced performance and expeditious data processing outcomes. Distributed computing, employed for processing big data, distributes data across a network of nodes. Therefore, the testing environment must encompass numerous nodes with well-distributed data. Furthermore, meticulous monitoring of these nodes is imperative to achieve peak performance with optimal CPU and memory utilization. Both aspects, the distribution of nodes and their vigilant monitoring, must be encompassed within the testing approach.

3. Unwavering Performance

Performance stands as a pivotal requirement for any big data application, motivating enterprises to gravitate towards NoSQL technologies. These technologies excel at managing and processing vast data within minimal timeframes. Processing extensive datasets swiftly is a cornerstone of big data testing. This challenge entails real-time monitoring of cluster nodes during execution, coupled with diligent time tracking for each iteration of execution. Achieving consistent and remarkable performance remains a testing priority.

By addressing these challenges with a strategic testing approach, the world of big data applications can be navigated with confidence, yielding reliable and high-performing results.

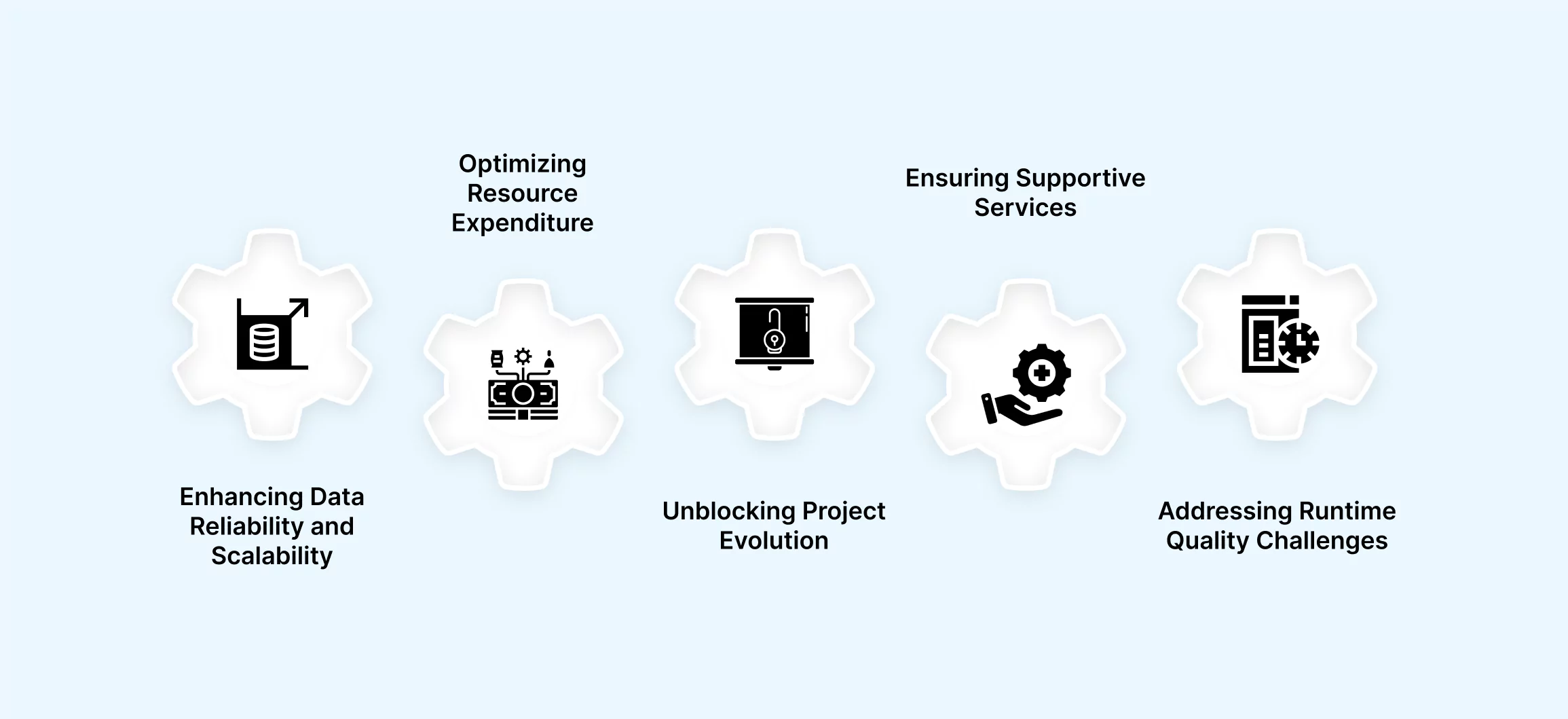

Big Data Challenges in Hadoop-Delta Lake Migration?

The decision to transition away from Hadoop is prompted by a range of compelling factors. The imperative for migration arises from several key considerations:

- Enhancing Data Reliability and Scalability: One of the foremost motivations for migration from the Hadoop ecosystem lies in the pursuit of improved data reliability and scalability. Enterprises seek advanced solutions that can provide more dependable data management and effortlessly accommodate growing data volumes.

- Optimizing Resource Expenditure: The commitment of time and resources in maintaining and operating a Hadoop environment often leads organizations to assess the cost-effectiveness of their choices. Migration presents an opportunity to streamline resource allocation and mitigate potential inefficiencies.

- Unblocking Project Evolution: Migration also serves as a means to unlock stalled projects. The constraints of the existing Hadoop infrastructure might hinder the progress of initiatives. By migrating, organizations can remove these obstacles and reignite project momentum.

- Ensuring Supportive Services: As technology landscapes evolve, ensuring access to comprehensive and adaptable support services becomes pivotal. Organizations consider migration to platforms that offer responsive and up-to-date support, allowing them to address challenges effectively.

- Addressing Runtime Quality Challenges: The occurrence of runtime quality issues can impede operations and decision-making processes. Migrating to alternative solutions can provide the opportunity to rectify such issues, leading to smoother operations and better-informed strategic choices.

These factors collectively contribute to the rationale behind migration from Hadoop. Organizations strive for solutions that offer enhanced reliability, cost-effectiveness, project agility, support infrastructure, and runtime quality – all of which facilitate their growth and success in a dynamic business landscape.

Supercharge your Big Data Testing with LambdaTest. Try LambdaTest Now!

Big Data Challenges in Cloud Security Governance?

Exploring the Complexities of Cloud Security Governance in the Realm of Big Data Within the landscape of cloud security governance, a series of formidable challenges related to the management of substantial data sets emerge. These challenges, which are effectively addressed by the features of Cloud Governance, encompass the following key dimensions:

- Performance Optimization and Management: A primary concern lies in the efficient performance management of intricate data ecosystems within the cloud infrastructure. Employing Cloud Governance allows for strategic monitoring and enhancement of data performance, ensuring that operations remain streamlined and responsive.

- Governance and Control Enhancement: The task of maintaining effective governance and control over expansive data reservoirs becomes paramount. Cloud Governance features offer a framework that enables precise oversight, regulatory compliance, and the enforcement of standardized practices throughout the cloud environment.

- Holistic Cost Management: The financial intricacies associated with managing vast data volumes in the cloud necessitate a comprehensive approach to cost management. Through Cloud Governance, organizations can implement refined strategies for budget allocation, resource optimization, and cost containment, thus achieving economic equilibrium.

- Mitigating Security Imperatives: Addressing security concerns within the cloud paradigm constitutes a critical challenge. Cloud Governance features assume a pivotal role in upholding data integrity, confidentiality, and availability. By integrating robust security measures and proactive monitoring, potential vulnerabilities are systematically identified and mitigated.

Navigating these multifaceted challenges within cloud security governance necessitates a strategic embrace of Cloud Governance features. This facilitates the orchestration of a harmonious and secure coexistence between intricate data landscapes and the dynamic cloud environment.

Key Considerations for Effective Big Data Testing

1. Test Environment Setup

Creating an appropriate test environment that mimics the production environment is crucial for accurate testing results.

2. Data Sampling Techniques

As testing the entire dataset might be impractical, using effective data sampling techniques can help in selecting representative subsets for testing.

3. Test Data Generation

Generating synthetic test data that closely resembles real-world scenarios can help in conducting comprehensive tests.

4. Data Validation and Verification

Validating and verifying the accuracy and consistency of the data is vital to ensure its trustworthiness.

Evaluating the performance of Big Data processing applications under different loads and conditions helps identify bottlenecks and optimize performance.

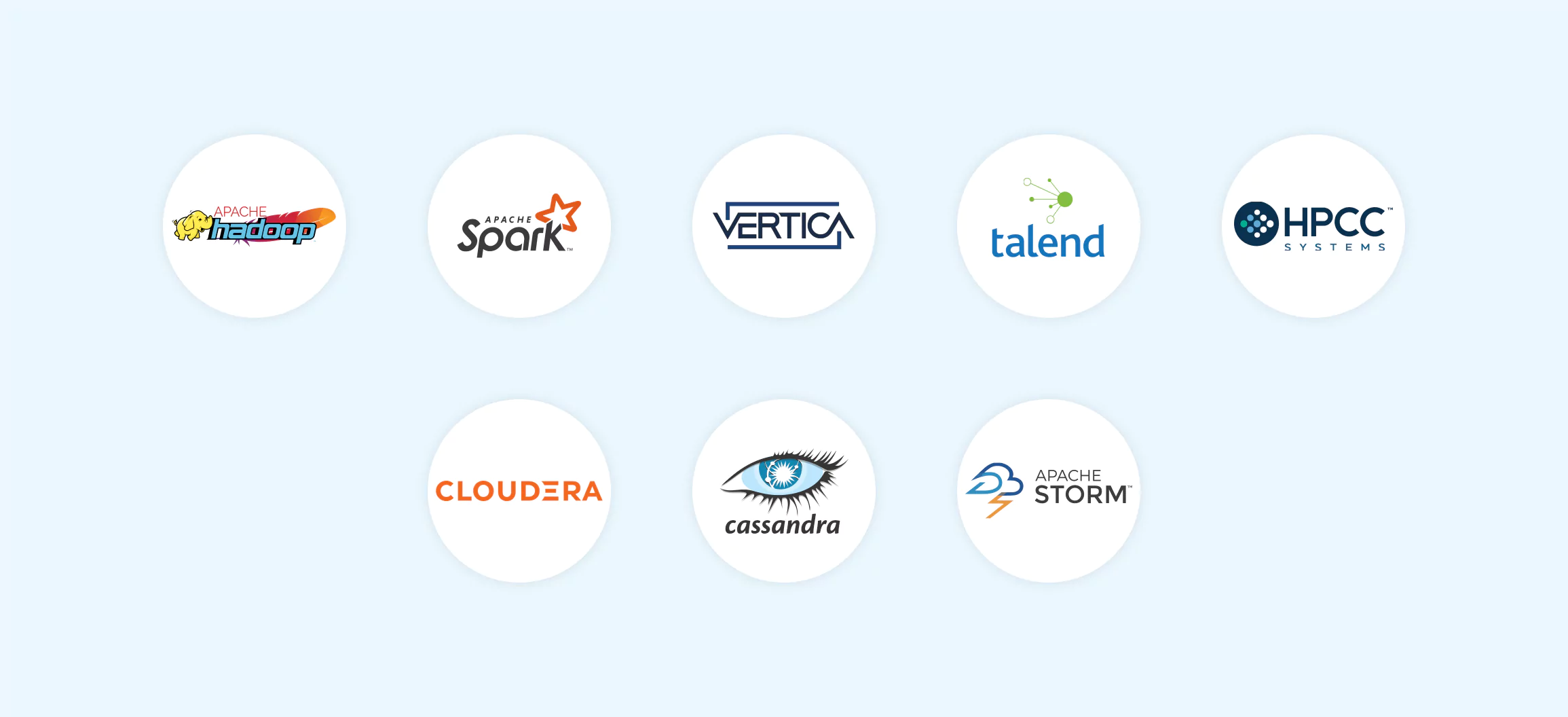

Popular Big Data Testing Tools

1. Apache Hadoop

An open-source framework that enables the storage and processing of vast datasets across distributed systems.

2. Apache Spark

A high-speed and versatile cluster computing system tailored for real-time data processing.

3. HP Vertica

Engineered as a columnar database management system, HP Vertica excels in rapid querying and analytical tasks within the realm of Big Data.

4. HP Vertica

A comprehensive integration platform that equips data management with Big Data testing and quality tools.

5. HPCC (High-Performance Computing Cluster)

A scalable supercomputing platform for Big Data testing, supporting data parallelism and offering high performance. Requires familiarity with C++ and ECL programming languages.

6. Cloudera

A powerful tool for enterprise-level technology testing, including Apache Hadoop, Impala, and Spark. Known for its easy implementation, robust security, and seamless data handling.

7. Cassandra

Preferred by industry leaders, Cassandra is a reliable open source tool for managing large data on standard servers. It features automated replication, scalability, and fault tolerance.

8. Storm

A versatile open source tool for real-time processing of unstructured data, compatible with various programming languages. Known for its scalability, fault tolerance, and wide range of applications.

The Role of AI in Big Data Testing

The integration of artificial intelligence and machine learning holds a pivotal role in the automation of intricate testing procedures, the identification of irregularities, and the enhancement of overall testing efficacy. This symbiotic relationship between AI and big data testing not only streamlines operations but also empowers the testing framework to detect complex patterns and deviations that might otherwise go unnoticed. The utilization of AI-driven algorithms allows for more robust and comprehensive testing, thereby raising the bar for the quality assurance of vast datasets.

Benefits of Big Data Testing

Transitioning from one case study in the realm of big data testing to the next, numerous companies can proudly highlight the advantages stemming from the formulation of a comprehensive big data testing strategy. This is attributed to the fact that big data testing is meticulously devised to pinpoint high-quality, precise, and intact data. The enhancement of applications is only possible after the verification of data collected from various sources and channels, ensuring its alignment with anticipated functionality.

What further gains can your team anticipate through the adoption of big data testing? Here are several benefits that merit consideration:

- Data Precision: Every organization aspires to possess accurate data for activities like business planning, forecasting, and decision-making. In any big data application, this data necessitates validation to ascertain its accuracy. The validation process encompasses the following:

- Ensuring an error-free data injection process.

- Confirming the loading of comprehensive and accurate data onto the big data framework.

- Validating the efficacy of the data processing based on the prescribed logic.

- Verifying the accuracy of data output within data access tools, aligning with stipulated requirements.

- Economical Data Storage: Underpinning each big data application are multiple machines that store data injected from diverse servers into the big data framework. The storage of data incurs costs, and it's vital to rigorously validate the proper storage of injected data across distinct nodes in accordance with configuration settings such as data replication factor and data block size. It's imperative to recognize that data lacking proper structure or in suboptimal condition necessitates greater storage. Upon subjecting such data to testing and restructuring, its storage footprint diminishes, consequently rendering it more cost-effective.

- Informed Decision-Making and Business Strategy: Accurate data serves as the bedrock for pivotal business decisions. When accurate data reaches capable hands, it transforms into a valuable asset. This facilitates the analysis of diverse risks, ensuring that only data germane to the decision-making process assumes significance. Consequently, it emerges as an invaluable tool for facilitating sound decision-making.

- Timely Availability of Relevant Data: The architecture of a big data framework comprises numerous components, any of which can impede data loading or processing performance. Irrespective of data accuracy, its utility is compromised if it isn't accessible when needed. Applications subjected to load testing, involving varying data volumes and types, adeptly manage extensive data volumes and provide timely access to information as required.

- Deficit Reduction and Profit Amplification: Inadequate big data proves detrimental to business operations, as identifying the source and location of errors becomes challenging. In contrast, accurate data elevates overall business operations, including the decision-making process. Testing such data segregates valuable data from unstructured or subpar data, bolstering customer service quality and augmenting business revenue.

Note : Revolutionize your Big Data Testing with LambdaTest! Try LambdaTest Now!

Best Practices for Successful Big Data Testing

In the realm of managing and ensuring the quality of extensive datasets, adhering to the finest practices for successful big data testing is paramount. These strategies not only validate the accuracy and reliability of your data but also enhance the overall performance of your data-driven systems.

- Comprehensive Data Verification: Thoroughly examining the data at each stage of its life cycle is essential. This involves scrutinizing its integrity, consistency, and conformity to established standards. By identifying and rectifying anomalies early on, you can prevent erroneous outcomes down the line.

- Realistic Test Environments: Simulating real-world scenarios in your testing environment is crucial. This approach mimics actual usage conditions and helps uncover potential bottlenecks, failures, or inefficiencies that might not be apparent in controlled settings.

- Scalability Assessment: Evaluating your system's scalability is fundamental, as big data applications are designed to handle immense volumes of information. Rigorous testing ensures that your system maintains optimal performance as the data load increases.

- Performance Benchmarking: Establishing performance baselines aids in measuring the efficiency of your big data solution. Through continuous monitoring and comparisons, you can identify deviations and optimize resource allocation for enhanced overall performance.

- Data Security Evaluation: As big data often includes sensitive information, assessing data security measures is crucial. Implementing encryption, access controls, and data masking techniques safeguards the confidentiality and privacy of your data.

- Automation for Efficiency: Embracing automation tools and frameworks streamlines the testing process. Automation not only reduces human error but also accelerates the testing cycle, allowing your team to focus on in-depth analysis.

- Robust Data Validation: Employing rigorous validation methodologies ensures that your big data is accurate and reliable. This involves cross-referencing data from multiple sources and validating it against predefined rules and expectations.

- Collaborative Testing Approach: Encouraging collaboration between development, testing, and operations teams fosters a holistic approach to big data testing. Effective communication and shared insights lead to faster issue resolution and continuous improvement.

- Failover and Recovery Testing: Verifying the failover and recovery mechanisms of your big data systems is essential to maintain uninterrupted service in case of failures. Rigorous testing ensures seamless transitions and minimal data loss.

- Documentation and Reporting: Thorough documentation of testing processes, methodologies, and outcomes is indispensable. Clear and concise reporting facilitates informed decision-making and enables efficient tracking of improvements over time.

By embracing these best practices, you can navigate the complexities of big data testing with confidence. Your meticulous approach will not only ensure data accuracy and reliability but also optimize the performance and resilience of your data-driven endeavors.

Conclusion

Big Data testing is like a crucial puzzle piece when it comes to handling the massive amount of data flooding our digital world. Think of it as a key tool to make sure all this data is accurate and safe. Imagine you're building a strong bridge – without the right materials and checks, the bridge might collapse. Similarly, without proper testing, the data might lead us astray.

By using the right methods and tools, organizations can make smart choices based on this data and get ahead in the business world. Just like a detective finding clues, Big Data testing helps uncover important information. This way, companies not only understand what's happening in the market but also get an edge over their competitors. So, in the end, Big Data testing is like a guide that helps us navigate this new data-driven world successfully.

On this page

- Overview

- What is Big Data Testing?

- Importance of Big Data Testing

- How to Perform Testing for Hadoop Applications

- Traditional and Big Data Testing

- Testing Approaches for Big Data Applications

- Challenges in Big Data Testing

- Big Data Challenges in Hadoop-Delta Lake Migration

- Big Data Challenges in Cloud Security Governance

- Key Considerations for Effective Big Data Testing

- Popular Big Data Testing Tools

- The Role of AI in Big Data Testing

- Benefits of Big Data Testing

- Best Practices for Successful Big Data Testing

- Frequently Asked Questions

Frequently asked questions

- General

Author's Profile

Anupam Pal Singh

Anupam Pal Singh is a Product Specialist at LambdaTest, bringing a year of dedicated expertise to the team. With a passion for driving innovation in testing and automation, He is committed to ensuring seamless browser compatibility. His professional journey reflects a deep understanding of quality assurance and product development. He is actively contributing insights through blogs and webinars. With a strong foundation in the field, he continues to elevate LambdaTest's impact in the world of testing.

Reviewer's Profile

Harshit Paul

Harshit is currently the Director of Product Marketing at LambdaTest. His professional experience spans over 7 years, with more than 5 years of experience with LambdaTest as a product specialist and 2 years at Wipro Technologies as a certified Salesforce developer. During his career, he has been actively contributing blogs, webinars as a subject expert around Selenium, browser compatibility, automation testing, DevOps, continuous testing, and more.

Did you find this page helpful?

More Hubs

Try LambdaTest Now !!

Get 100 minutes of automation test minutes FREE!!

Christmas Deal is on: Save 25% off on select annual plans for 1st year.

Christmas Deal is on: Save 25% off on select annual plans for 1st year.